Describe any image, then let a computer create it for you. What sounded futuristic only a few years ago has become reality with advances in neural networks and latent diffusion models (LDM). DALL·E by OpenAI has made a splash through the amazing generative art and realistic images that people create with it.

OpenAI allows access to DALL·E through their API, which means that you can incorporate its functionality into your Python applications.

In this tutorial, you’ll:

- Get started using the OpenAI Python library

- Explore API calls related to image generation

- Create images from text prompts

- Create variations of your generated image

- Convert Base64 JSON responses to PNG image files

You’ll need some experience with Python, JSON, and file operations to breeze through this tutorial. You can also study up on these topics while you go along, as you’ll find relevant links throughout the text.

Get Your Code: Click here to download the free sample code that you’ll use to generate stunning images with DALL·E and the OpenAI API.

Take the Quiz: Test your knowledge with our interactive “Generate Images With DALL·E and the OpenAI API” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Generate Images With DALL·E and the OpenAI APIIn this quiz, you'll test your understanding of generating images with DALL·E by OpenAI using Python. You'll revisit concepts such as using the OpenAI Python library, making API calls for image generation, creating images from text prompts, and converting Base64 strings to PNG image files.

Complete the Setup Requirements

If you’ve seen what DALL·E can do and you’re eager to make its functionality part of your Python applications, then you’re in the right spot! In this first section, you’ll quickly walk through what you need to do to get started using DALL·E’s image creation capabilities in your own code.

Install the OpenAI Python Library

Confirm that you’re running Python version 3.7.1 or higher, create and activate a virtual environment, and install the OpenAI Python library:

The openai package gives you access to the full OpenAI API. In this tutorial, you’ll focus on image generation, which lets you interact with DALL·E models to create and edit images from text prompts. For text generation with ChatGPT, see How to Integrate ChatGPT’s API With Python Projects.

Get Your OpenAI API Key

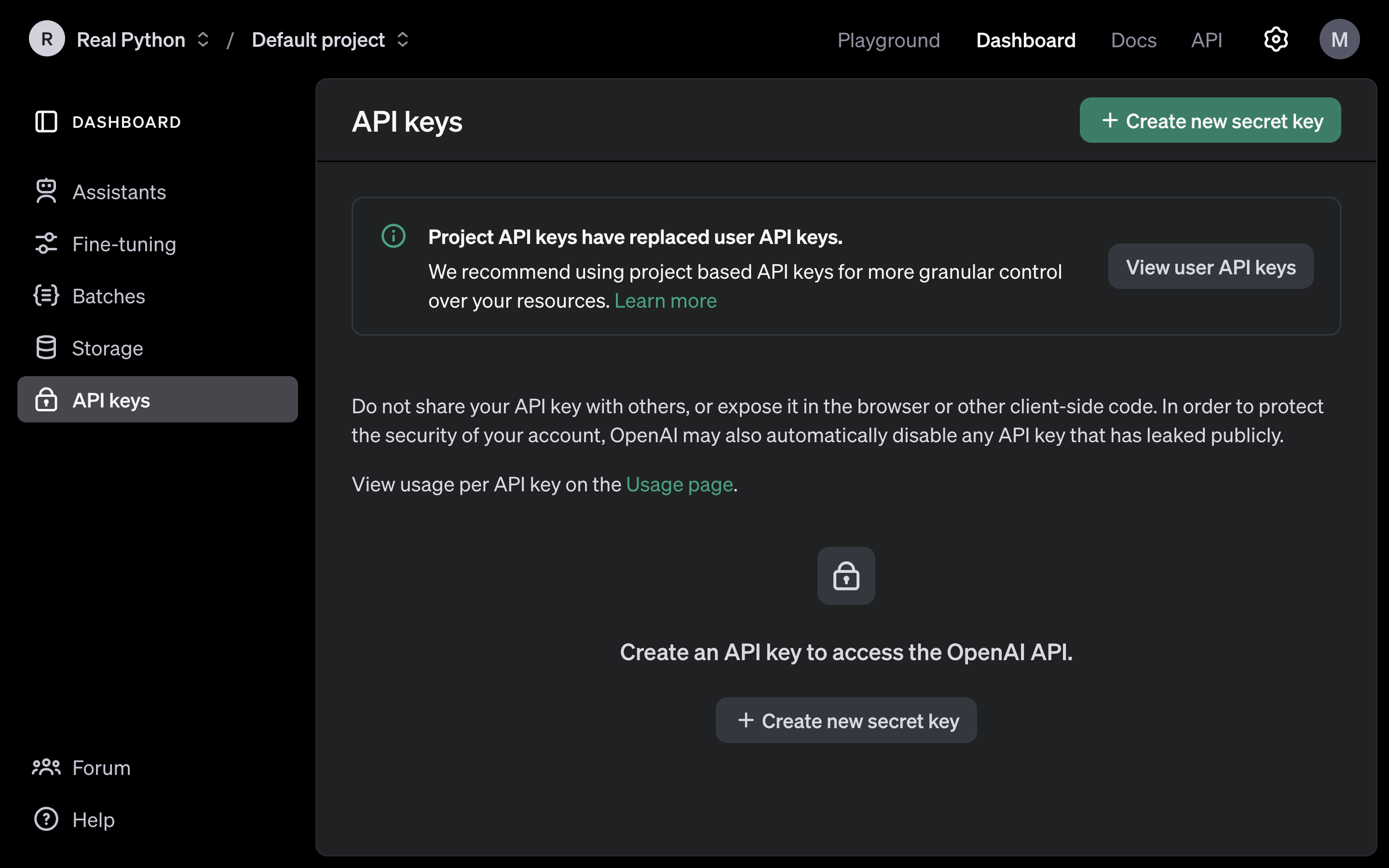

You need an API key to make successful API calls. Sign up with OpenAI and create a new project API key by clicking on the Dashboard menu and then API keys on the bottom left of the navigation menu:

On this page, you can create and manage your API keys, which allow you to access the service that OpenAI offers through their API. You can create and delete secret keys.

Click on Create new secret key to create a new API key, and copy the value shown in the pop-up window.

Note: OpenAI assigns your API usage through unique key values, so make sure to keep your API key private. The company calculates the pricing of requests to generate images on a per-image basis that depends on the model you use and the resolution of the output image.

Keep in mind that OpenAI’s API services and pricing policies may change. Be sure to check their website for up-to-date information about pricing and offers.

Always keep this key secret! Copy the value of this key so you can later use it in your project. You’ll only see the key value once.

Save Your API Key as an Environment Variable

A quick way to save your API key and make it available to your Python scripts is to save it as an environment variable. Select your operating system to learn how:

With this command, you make the API key accessible under the environment variable OPENAI_API_KEY in your current terminal session. Keep in mind that you’ll lose it if you close your terminal.

You could name your variable however you like, but if you use OPENAI_API_KEY, which is the name suggested by the OpenAI documentation, then you’ll be able to use the provided code examples without needing to do any additional setup.

With the logistics out of the way and your API key safely stored, you’re now ready to create some images from text prompts.

Create an Image From a Text Prompt With OpenAI’s DALL·E

Start by confirming that you’re set up and ready to go by using the openai library through its command-line interface:

(venv) $ openai api images.generate -p "a vaporwave computer"

This command will send a request to OpenAI’s Images API and create an image from the text prompt "a vaporwave computer". As a result, you’ll receive a JSON response that contains a URL that pointing to your freshly created image:

{

"created": 1723549436,

"data": [

{

"b64_json": null,

"revised_prompt": null,

"url": "https://oaidalleapiprodscus.blob.core.windows.net/private/org

⮑ -H8Satq5hV2dscyutIStbN89m/user-O5O8mpE4TugEI9zqpxx8tGPt/img-4rDZEQ

⮑ leCdzAD3fuWowgLb5U.png?st=2024-08-13T10%3A43%3A56Z&se=2024-08-13T1

⮑ 2%3A43%3A56Z&sp=r&sv=2024-08-04&sr=b&rscd=inline&rsct=image/png&sk

⮑ oid=d505667d-d6c1-4a0a-bac7-5c84a87759f8&sktid=a48cca56-e6da-484e-

⮑ a814-9c849652bcb3&skt=2024-08-12T23%3A00%3A04Z&ske=2024-08-13T23%3

⮑ A00%3A04Z&sks=b&skv=2024-08-04&sig=c%2BBmroDkC0wkA2Lu8MxkvXH4xjdW5

⮑ B18iQ4e4BwJU6k%3D"

}

]

}

Click your URL or copy and paste it into your browser to view the image. Here’s the image that DALL·E dreamt up for this request:

Your image will look different. That’s because the diffusion model creates each of these images only when you submit the request.

Note: The URL for your generated image is only valid for one hour, so make sure to save the image to your computer if you like it and want to keep it around.

When you submit an API request, you must follow OpenAI’s usage policy. If you send text prompts that conflict with the usage policy, then you’ll receive an error and you might get blocked after repeated violations.

Now that you’ve confirmed that everything is set up correctly and you’ve gotten a glimpse of what you can do when generating images with the OpenAI API, you’ll learn how to integrate it into a Python script.

Call the API From a Python Script

It’s great that you can create an image from the command-line interface (CLI), but it’d be even better to incorporate this functionality into your Python applications. There’s a lot of exciting stuff you could build!

Open your favorite code editor and write a script that you’ll use to create an image from a text prompt just like you did using the command-line before.

However, this time you’ll specify the DALL·E model to use:

create.py

1from openai import OpenAI

2

3client = OpenAI()

4

5PROMPT = "A vaporwave computer"

6

7response = client.images.generate(

8 model="dall-e-3",

9 prompt=PROMPT,

10)

11

12print(response.data[0].url)

Just like before, this code sends an authenticated request to the API that generates a single image based on the text in PROMPT. Note that this code adds some tweaks that’ll help you to build more functionality into the script:

-

Line 3 creates an instance of

OpenAIand saves it asclient. This object has authentication baked in because you’ve named the environment variableOPENAI_API_KEY. If you follow that naming convention, then it automatically accesses the API key value from your environment. Alternatively, you can pass the API key when instantiating the object throughapi_key. -

Line 5 defines the text prompt as a constant. Putting this text into a constant at the top of your script allows you to quickly refactor your code—for example, to collect the text from user input instead because its value is quicker to find and edit.

-

Line 7 calls

.images.generate()on theclient. The next couple of lines contain some of the parameters that you can pass to the method. -

Line 8 sets the model to the newer DALL·E 3, which not only handles your prompt differently, but also allows different parameters, image sizes, and quality than the default DALL·E 2 model.

-

Line 9 passes the value of

PROMPTto the fittingly namedpromptparameter. With that, you give DALL·E the text that it’ll use to create the image. Remember that you also passed a text prompt when you called the API from the command-line interface.

Finally, you also want to get the URL so that you can look at the generated image online. For this, you step through the response object to the .url attribute in line 12 and print its value to your terminal.

When you run this script, you’ll get output that’s similar to before, but now you won’t see the whole JSON response, only the URL:

(venv) $ python create.py

https://oaidalleapiprodscus.blob.core.windows.net/private/org-H8Satq5hV2d

⮑ scyutIStbN89m/user-O5O8mpE4TugEI9zqpxx8tGPt/img-hMAEPhuqVH73JMRQ6BOV4J

⮑ Hz.png?st=2024-08-13T10%3A56%3A54Z&se=2024-08-13T12%3A56%3A54Z&sp=r&sv

⮑ =2023-11-03&sr=b&rscd=inline&rsct=image/png&skoid=d505667d-d6c1-4a0a-b

⮑ ac7-5c84a87759f8&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2024-0

⮑ 8-12T21%3A36%3A11Z&ske=2024-08-13T21%3A36%3A11Z&sks=b&skv=2023-11-03&s

⮑ ig=D7K6UF353uO3wJDOovbeyBsn2yaf384kEotVbjJ5SB4%3D

Click the link or paste it into your browser to view the generated image. Because you’re now using the DALL·E 3 model, the output will likely look more compelling:

That’s a significant increase in quality and detail, and it seems that the newer model has a better grasp on what makes up the style of vaporwave.

While that’s probably true, something else is happening behind the scenes that also plays a part in creating this increased image detail. When you send a request to generate an image using the DALL·E 3 model, then it rewrites your prompt before generating the image.

If you want to see the revised prompt that DALL·E actually used to generate your image, then you can access it through .revised_prompt:

create.py

# ...

print(response.data[0].revised_prompt)

When prompting for image generation, it’s generally true that you’ll get better results with more detailed prompts. Adding additional details to your prompts can go quite far! For example, here’s the revised prompt that generated the image above:

A computer set in the aesthetic of vaporwave; imagine a vintage machine from the 1980s, bathed in neon pink and blue hues. Its design evokes feelings of nostalgia with retro typography on its body. Glowing, digital palm trees are embossed on its casing, resembling a scene from an old video game. In the background, Greek busts and glitchy sunset patterns popular in vaporwave design can be seen, encapsulating the perfect blend of modern and retro styles.

That certainly is a lot more detail than you initially provided!

Note: Rewriting prompts only happens for requests to the DALL·E 3 model, so this attribute will be None when you use DALL·E 2.

While DALL·E 3 may produce more stunning images, it gives you less control over the output because you can’t switch off prompt rewriting. Additionally, not all the functionality is available for this model just yet.

So for the rest of this tutorial, you’ll continue to work with DALL·E 2. Start by editing the script and adding a couple of additional parameters that you can set when calling .generate(). And while you’re at it, why not also add a bit more detail to your initial prompt:

create.py

1from openai import OpenAI

2

3client = OpenAI()

4

5PROMPT = "An eco-friendly computer from the 90s in the style of vaporwave"

6

7response = client.images.generate(

8 model="dall-e-2", # Default

9 prompt=PROMPT,

10 n=1,

11 size="256x256",

12)

13

14print(response.data[0].url)

You’ve added some detail to your prompt and changed the model to the default DALL·E 2. You also added two new parameters that allow for further customization:

-

Line 10 passes the integer

1to the parametern. This parameter lets you define how many new images you want to create with the prompt. The value ofnneeds to be between one and ten and defaults to1. -

Line 11 sets a value for

size. With this, you can define the dimensions of the image that DALL·E should generate. The argument needs to be a string where the available sizes depend on the model that you’re using. For DALL·E 2, it needs to be"256x256","512x512", or"1024x1024". Each string represents the dimensions in pixels of the image that you’ll receive. It defaults to the largest possible setting, 1024x1024.

At the end of your script, you again print the URL of the generated image to your terminal. You’ve removed the line that prints .revised_prompt because this will be None when you work with DALL·E 2.

When you run the script, you get another URL as your response.

Click the link or paste it into your browser to view the generated image. Again, your image will look different, but you should see an image that resembles the prompt you used in PROMPT. It’ll be less detailed than your previous run because you’re working with DALL·E 2 again:

You may notice that this image is much smaller than the images you created before. That’s because you asked the API for a 256x256 pixel image through the size parameter. Smaller images are less expensive, so you just saved some money! As a successful saver, maybe you’d like to save something else—your image data.

Save the Image Data to a File

While it’s great that you’re creating images from text using Python, DALL·E, and the OpenAI API, the responses are currently quite fleeting. If you want to continue to work with the generated image within your Python script, it’s probably better to skip the URL and access the image data directly instead:

create.py

1from openai import OpenAI

2

3client = OpenAI()

4

5PROMPT = "An eco-friendly computer from the 90s in the style of vaporwave"

6

7response = client.images.generate(

8 model="dall-e-2",

9 prompt=PROMPT,

10 n=1,

11 size="256x256",

12 response_format="b64_json",

13)

14

15print(response.data[0].b64_json[:50])

The API allows you to switch the response format from a URL to the Base64-encoded image data. In line 12, you set the value of response_format to "b64_json". The default value of this parameter is "url", which is why you’ve received URLs in the JSON responses up to now.

While the JSON response that you get after applying this change looks similar to before, the dictionary key to access the image data is now "b64_json" instead of "url". You applied this change in the call to print() on line 15 and limited the output to the first fifty characters.

If you run the script with these settings, then you’ll get the actual data of the generated image. But don’t run the script yet, because the image data will be lost immediately after the script runs, and you’ll never get to see the image!

To avoid losing the one perfect image that got away, you can store the JSON responses in a file instead of printing them to the terminal:

create.py

1import json

2from pathlib import Path

3

4from openai import OpenAI

5

6client = OpenAI()

7

8PROMPT = "An eco-friendly computer from the 90s in the style of vaporwave"

9DATA_DIR = Path.cwd() / "responses"

10

11DATA_DIR.mkdir(exist_ok=True)

12

13response = client.images.generate(

14 model="dall-e-2",

15 prompt=PROMPT,

16 n=1,

17 size="256x256",

18 response_format="b64_json",

19)

20

21file_name = DATA_DIR / f"{PROMPT[:5]}-{response.created}.json"

22

23with open(file_name, mode="w", encoding="utf-8") as file:

24 json.dump(response.to_dict(), file)

With a few additional lines of code, you’ve added file handling to your Python script using pathlib and json:

-

Lines 9 and 11 define and create a data directory called

"responses/"that’ll hold the API responses as JSON files. -

Line 21 defines a variable for the file path where you want to save the data. You use the beginning of the prompt and the timestamp from the JSON response to create a unique file name.

-

Lines 23 and 24 create a new JSON file in the data directory and write the API response as JSON to that file.

With these additions, you can now run your script and generate images, and the image data will stick around in a dedicated file within your data directory.

Did you run the script and inspect the generated JSON file? Looks like gibberish, doesn’t it? So where’s that beautiful image that you know with certainty is the best image ever created by DALL·E?

It’s right there, only it’s currently represented as Base64-encoded bits, which doesn’t make for a great viewing experience if you’re a human. In the next section, you’ll learn how you can convert Base64-encoded image data into a PNG file that you can look at.

Decode a Base64 JSON Response

You just saved a PNG image as a Base64-encoded string in a JSON file. That’s great because it means that your image won’t get lost in the ether of the Internet after one hour, like it does if you keep generating URLs with your API calls.

However, now you can’t look at your image—unless you learn how to decode the data. Fortunately, this doesn’t require a lot of code in Python, so go ahead and create a new script file to accomplish this conversion:

convert.py

1import json

2from base64 import b64decode

3from pathlib import Path

4

5DATA_DIR = Path.cwd() / "responses"

6JSON_FILE = DATA_DIR / "An ec-1667994848.json"

7IMAGE_DIR = Path.cwd() / "images" / JSON_FILE.stem

8

9IMAGE_DIR.mkdir(parents=True, exist_ok=True)

10

11with open(JSON_FILE, mode="r", encoding="utf-8") as file:

12 response = json.load(file)

13

14for index, image_dict in enumerate(response["data"]):

15 image_data = b64decode(image_dict["b64_json"])

16 image_file = IMAGE_DIR / f"{JSON_FILE.stem}-{index}.png"

17 with open(image_file, mode="wb") as png:

18 png.write(image_data)

The script convert.py will read a JSON file with the filename that you defined in JSON_FILE. Remember that you’ll need to adapt the value of JSON_FILE to match the filename of your JSON file, which will be different.

The script then fetches the Base64-encoded string from the JSON data, decodes it, and saves the resulting image data as a PNG file in a directory. Python will even create that directory for you, if necessary.

Note that this script will also work if you’re fetching more than one image at a time. The for loop will decode each image and save it as a new file.

Note: You can generate JSON files with Base64-encoded data of multiple images by running create.py after passing a value higher than 1 to the n parameter.

Most of the code in this script is about reading and writing files from and into the correct folders. The true star of the code snippet is b64decode(). You import the function in line 2 and put it to work in line 15. It decodes the Base64-encoded string so that you can save the actual image data as a PNG file. Your computer will then be able to recognize it as a PNG image and know how to display to you.

After running the script, you can head into the newly created folder structure and open the PNG file to finally see the ideal generated image that you’ve been waiting for so long:

Is it everything you’ve ever hoped for? If so, then rejoice! However, if the image you got looks kind of like what you’re looking for but not quite, then you can make another call to the API where you pass your image as input and create a couple of variations of it.

Create Variations of an Image

If you have an image—whether it’s a machine-generated image or not—that’s similar to what you’re looking for but doesn’t quite fit the bill, then you can create variations of it using OpenAI’s DALL·E 2 latent diffusion model.

Based on the code that you wrote earlier in this tutorial, you can create a new file that you’ll call vary.py:

vary.py

1import json

2from base64 import b64decode

3from pathlib import Path

4

5from openai import OpenAI

6

7client = OpenAI()

8

9DATA_DIR = Path.cwd() / "responses"

10SOURCE_FILE = DATA_DIR / "An ec-1667994848.json"

11

12with open(SOURCE_FILE, mode="r", encoding="utf-8") as json_file:

13 saved_response = json.load(json_file)

14 image_data = b64decode(saved_response["data"][0]["b64_json"])

15

16response = client.images.create_variation(

17 image=image_data,

18 n=3,

19 size="256x256",

20 response_format="b64_json",

21)

22

23new_file_name = f"vary-{SOURCE_FILE.stem[:5]}-{response.created}.json"

24

25with open(DATA_DIR / new_file_name, mode="w", encoding="utf-8") as file:

26 json.dump(response.to_dict(), file)

In this script, you send the Base64-encoded image data from the previous JSON response to the Images API and ask for three variations of the image. You save the image data of all three images in a new JSON file in your data directory:

-

Line 10 defines a constant that holds the name of the JSON file where you collected the Base64-encoded data of the image that you want to generate variations of. If you want to create variations of a different image, then you’ll need to edit this constant before rerunning the script.

-

Line 14 decodes the image data using

b64decode()in the same way you did inconvert.pyand saves it toimage_data. Note that the code picks the first image from your JSON file withsaved_response["data"][0]. If your saved response contains multiple images and you want to base your variations on another image, then you’ll need to adapt the index accordingly. -

Line 17 passes

image_dataas an argument to.create_variation(). Note that theimageparameter of the method requires valid PNG image data, which is why you need to decode the string from the JSON response before passing it to the method. -

Line 18 defines how many variation images of the original image you want to receive. In this case, you set

nto3, which means that you’ll receive three new images.

If you take a look in your responses/ directory, then you’ll see a new JSON file whose name starts with vary-. This file holds the image data from your new image variations. You can copy the filename and set it as JSON_FILE in convert.py, run the conversion script, and take a look at your image variations.

Note: You don’t need to use Base64-encoded image data as a source. Instead, you can open a square PNG file no larger than four megabytes in binary mode and pass the image data like that to image:

IMAGE_PATH = "images/example.png"

response = client.images.create_variation(

image=open(IMAGE_PATH, mode="rb"),

n=3,

size="256x256",

response_format="b64_json",

)

You can also find this approach in the official API documentation on image variations.

However, if you’re planning to include the functionality in a Python app, then you may want to skip saving a PNG file only to later load the file again. Therefore, it can be useful to know how to handle the image data if it doesn’t come directly from reading an image file.

How do your image variations look? Maybe one of them sticks out as the best fit for what you were looking for:

If you like one of the images, but it’s still not quite what you’re looking for, then you can adapt vary.py by changing the value for SOURCE_FILE and run it again. If you want to base the variations on an image other than the first one, then you’ll also need to change the index of the image data that you want to use.

Conclusion

It’s fun to dream of eco-friendly computers with great AESTHETICS—but it’s even better to create these images with Python and OpenAI’s Images API!

In this tutorial, you’ve learned how to:

- Set up the OpenAI Python library locally

- Use the image generation capabilities of the OpenAI API

- Create images from text prompts using Python

- Create variations of your generated image

- Convert Base64 JSON responses to PNG image files

Most importantly, you gained practical experience with incorporating API calls to DALL·E into your Python scripts, which allows you to bring stunning image creation capabilities into your own applications.

Next Steps

The OpenAI image generation API has yet another feature that you can explore next. With a similar API call, you can edit parts of your image, thereby implementing inpainting and outpainting functionality from your Python scripts.

Look for a script called edit.py in the provided code examples to give it a try:

Get Your Code: Click here to download the free sample code that you’ll use to generate stunning images with DALL·E and the OpenAI API.

You might want to do further post-processing of your images with Python. For that, you could read up on image processing with pillow.

To improve the handling and organization of the code that you wrote in this tutorial, you could replace the script constants with entries in a TOML settings file. Alternatively, you could create a command-line interface with argparse that allows you to pass the variables directly from your CLI.

You might be curious to dive deeper into latent diffusion models. In this tutorial, you learned to interact with the model through an API, but to learn more about the logic that powers this functionality, you might want to set it up on your own computer. However, if you wanted to run DALL·E on your local computer, then you’re out of luck because OpenAI hasn’t made the model publicly available.

But there are other latent diffusion models that achieve similarly stunning results. As a next step, you could install a project called Stable Diffusion locally, dig into the codebase, and use it to generate images without any content restrictions.

Or you could just continue to create beautiful and weird images with your Python scripts, DALL·E, and the OpenAI API! Which interesting text prompt did you try? What strange or beautiful image did DALL·E generate for you? Share your experience in the comments below, and keep dreaming!

Get Your Code: Click here to download the free sample code that you’ll use to generate stunning images with DALL·E and the OpenAI API.

Take the Quiz: Test your knowledge with our interactive “Generate Images With DALL·E and the OpenAI API” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Generate Images With DALL·E and the OpenAI APIIn this quiz, you'll test your understanding of generating images with DALL·E by OpenAI using Python. You'll revisit concepts such as using the OpenAI Python library, making API calls for image generation, creating images from text prompts, and converting Base64 strings to PNG image files.