You’ve likely interacted with large language models (LLMs), like the ones behind OpenAI’s ChatGPT, and experienced their remarkable ability to answer questions, summarize documents, write code, and much more. While LLMs are remarkable by themselves, with a little programming knowledge, you can leverage libraries like LangChain to create your own LLM-powered chatbots that can do just about anything.

In an enterprise setting, one of the most popular ways to create an LLM-powered chatbot is through retrieval-augmented generation (RAG). When you design a RAG system, you use a retrieval model to retrieve relevant information, usually from a database or corpus, and provide this retrieved information to an LLM to generate contextually relevant responses.

In this tutorial, you’ll step into the shoes of an AI engineer working for a large hospital system. You’ll build a RAG chatbot in LangChain that uses Neo4j to retrieve data about the patients, patient experiences, hospital locations, visits, insurance payers, and physicians in your hospital system.

In this tutorial, you’ll learn how to:

- Use LangChain to build custom chatbots

- Design a chatbot using your understanding of the business requirements and hospital system data

- Work with graph databases

- Set up a Neo4j AuraDB instance

- Build a RAG chatbot that retrieves both structured and unstructured data from Neo4j

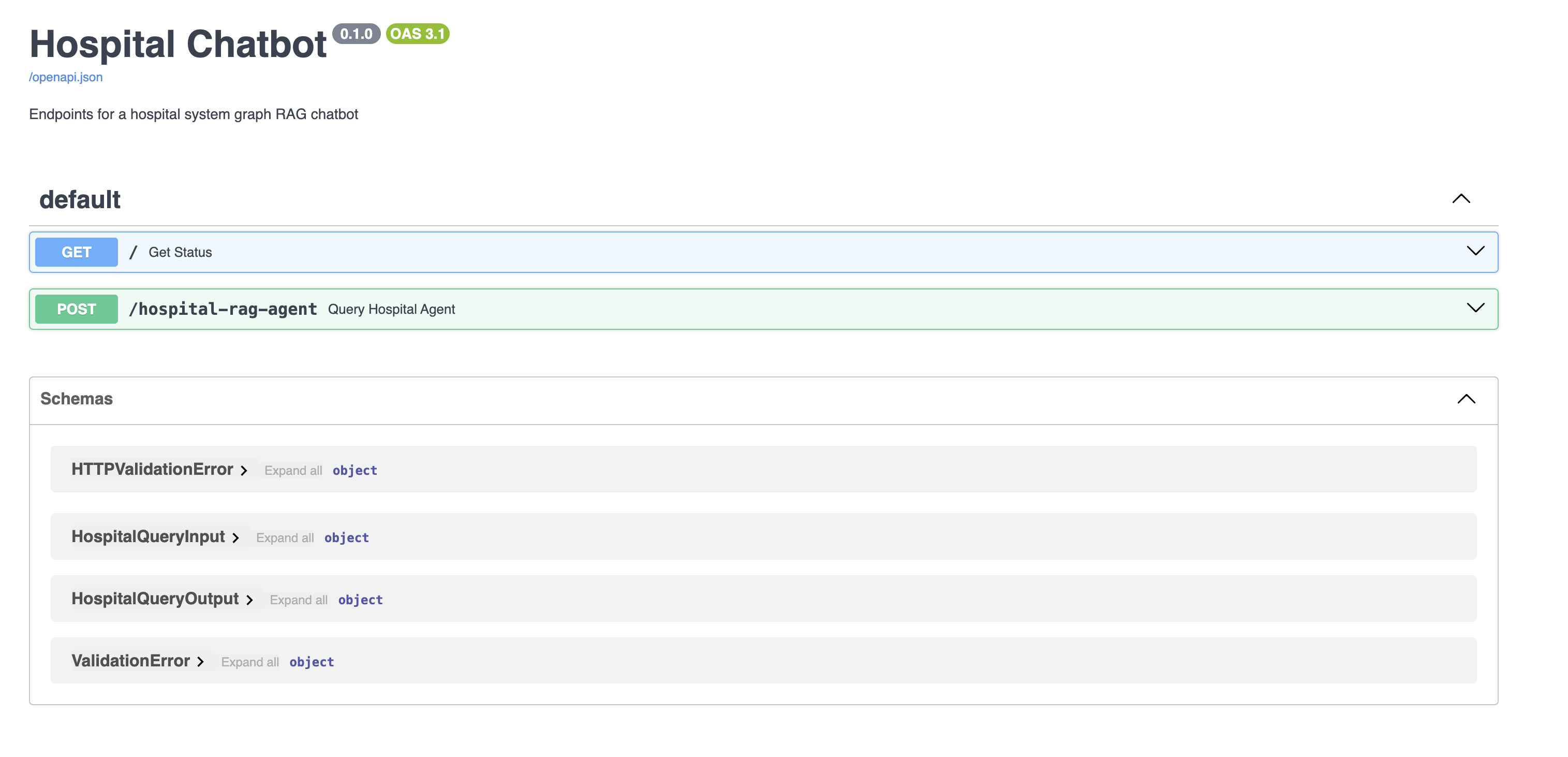

- Deploy your chatbot with FastAPI and Streamlit

Click the link below to download the complete source code and data for this project:

Get Your Code: Click here to download the free source code for your LangChain chatbot.

Take the Quiz: Test your knowledge with our interactive “Build an LLM RAG Chatbot With LangChain” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Build an LLM RAG Chatbot With LangChainIn this quiz, you'll test your understanding of building a retrieval-augmented generation (RAG) chatbot using LangChain and Neo4j. This knowledge will allow you to create custom chatbots that can retrieve and generate contextually relevant responses based on both structured and unstructured data.

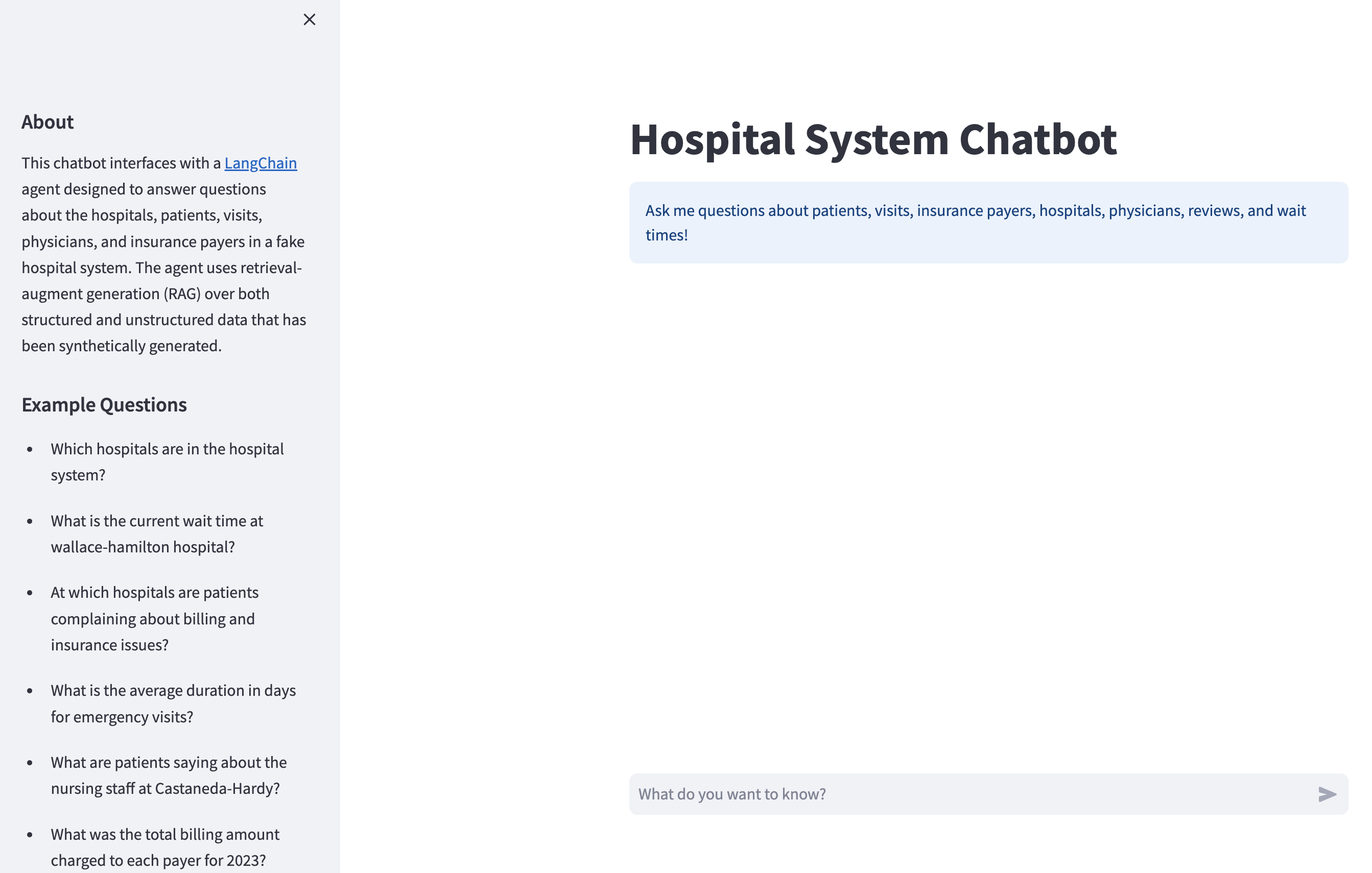

Demo: An LLM RAG Chatbot With LangChain and Neo4j

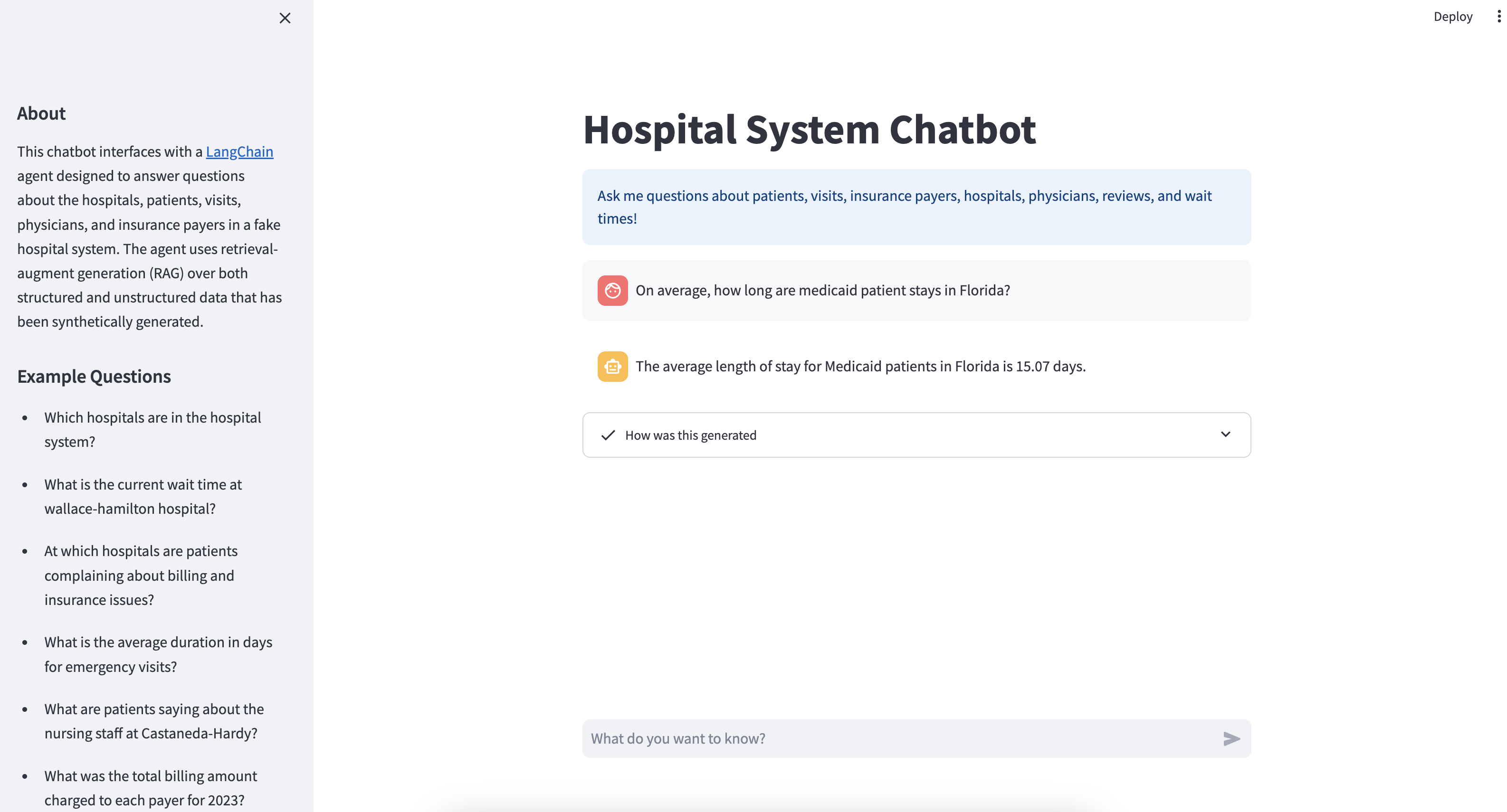

By the end of this tutorial, you’ll have a REST API that serves your LangChain chatbot. You’ll also have a Streamlit app that provides a nice chat interface to interact with your API:

Under the hood, the Streamlit app sends your messages to the chatbot API, and the chatbot generates and sends a response back to the Streamlit app, which displays it to the user.

You’ll get an in-depth overview of the data that your chatbot has access to later, but if you’re anxious to test it out, you can ask questions similar to the examples given in the sidebar:

You’ll learn how to tackle each step, from understanding the business requirements and data to building the Streamlit app. There’s a lot to unpack in this tutorial, but don’t feel overwhelmed. You’ll get some background on each concept introduced, along with links to external sources that will deepen your understanding. Now, it’s time to dive in!

Prerequisites

This tutorial is best suited for intermediate Python developers who want to get hands-on experience creating custom chatbots. Aside from intermediate Python knowledge, you’ll benefit from having a high-level understanding of the following concepts and technologies:

- Large language models (LLMs) and prompt engineering

- Text embeddings and vector databases

- Graph databases and Neo4j

- The OpenAI developer ecosystem

- REST APIs and FastAPI

- Asynchronous programming

- Docker and Docker Compose

Nothing listed above is a hard prerequisite, so don’t worry if you don’t feel knowledgeable in any of them. You’ll be introduced to each concept and technology along the way. Besides, there’s no better way to learn these prerequisites than to implement them yourself in this tutorial.

Next up, you’ll get a brief project overview and begin learning about LangChain.

Project Overview

Throughout this tutorial, you’ll create a few directories that make up your final chatbot. Here’s a breakdown of each directory:

-

langchain_intro/will help you get familiar with LangChain and equip you with the tools that you need to build the chatbot you saw in the demo, and it won’t be included in your final chatbot. You’ll cover this in Step 1. -

data/has the raw hospital system data stored as CSV files. You’ll explore this data in Step 2. In Step 3, you’ll move this data into a Neo4j database that your chatbot will query to answer questions. -

hospital_neo4j_etl/contains a script that loads the raw data fromdata/into your Neo4j database. You have to run this before building your chatbot, and you’ll learn everything you need to know about setting up a Neo4j instance in Step 3. -

chatbot_api/is your FastAPI app that serves your chatbot as a REST endpoint, and it’s the core deliverable of this project. Thechatbot_api/src/agents/andchatbot_api/src/chains/subdirectories contain the LangChain objects that comprise your chatbot. You’ll learn what agents and chains are later, but for now, just know that your chatbot is actually a LangChain agent composed of chains and functions. -

tests/includes two scripts that test how fast your chatbot can answer a series of questions. This will give you a feel for how much time you save by making asynchronous requests to LLM providers like OpenAI. -

chatbot_frontend/is your Streamlit app that interacts with the chatbot endpoint inchatbot_api/. This is the UI that you saw in the demo, and you’ll build this in Step 5.

All the environment variables needed to build and run your chatbot will be stored in a .env file. You’ll deploy the code in hospital_neo4j_etl/, chatbot_api, and chatbot_frontend as Docker containers that’ll be orchestrated with Docker Compose. If you want to experiment with the chatbot before going through the rest of this tutorial, then you can download the materials and follow the instructions in the README file to get things running:

Get Your Code: Click here to download the free source code for your LangChain chatbot.

With the project overview and prerequisites behind you, you’re ready to get started with the first step—getting familiar with LangChain.

Step 1: Get Familiar With LangChain

Before you design and develop your chatbot, you need to know how to use LangChain. In this section, you’ll get to know LangChain’s main components and features by building a preliminary version of your hospital system chatbot. This will give you all the necessary tools to build your full chatbot.

Use your favorite code editor to create a new Python project, and be sure to create a virtual environment for its dependencies. Make sure you have Python 3.10 or later installed. Activate your virtual environment and install the following libraries:

(venv) $ python -m pip install langchain==0.1.0 openai==1.7.2 langchain-openai==0.0.2 langchain-community==0.0.12 langchainhub==0.1.14

You’ll also want to install python-dotenv to help you manage environment variables:

(venv) $ python -m pip install python-dotenv

Python-dotenv loads environment variables from .env files into your Python environment, and you’ll find this handy as you develop your chatbot. However, you’ll eventually deploy your chatbot with Docker, which can handle environment variables for you, and you won’t need Python-dotenv anymore.

If you haven’t already, you’ll need to download reviews.csv from the materials or GitHub repo for this tutorial:

Get Your Code: Click here to download the free source code for your LangChain chatbot.

Next, open the project directory and add the following folders and files:

./

│

├── data/

│ └── reviews.csv

│

├── langchain_intro/

│ ├── chatbot.py

│ ├── create_retriever.py

│ └── tools.py

│

└── .env

The reviews.csv file in data/ is the one you just downloaded, and the remaining files you see should be empty.

You’re now ready to get started building your first chatbot with LangChain!

Chat Models

You might’ve guessed that the core component of LangChain is the LLM. LangChain provides a modular interface for working with LLM providers such as OpenAI, Cohere, HuggingFace, Anthropic, Together AI, and others. In most cases, all you need is an API key from the LLM provider to get started using the LLM with LangChain. LangChain also supports LLMs or other language models hosted on your own machine.

You’ll use OpenAI for this tutorial, but keep in mind there are many great open- and closed-source providers out there. You can always test out different providers and optimize depending on your application’s needs and cost constraints. Before moving forward, make sure you’re signed up for an OpenAI account and you have a valid API key.

Once you have your OpenAI API key, add it to your .env file:

OPENAI_API_KEY=<YOUR-OPENAI-API-KEY>

While you can interact directly with LLM objects in LangChain, a more common abstraction is the chat model. Chat models use LLMs under the hood, but they’re designed for conversations, and they interface with chat messages rather than raw text.

Using chat messages, you provide an LLM with additional detail about the kind of message you’re sending. All messages have role and content properties. The role tells the LLM who is sending the message, and the content is the message itself. Here are the most commonly used messages:

HumanMessage: A message from the user interacting with the language model.AIMessage: A message from the language model.SystemMessage: A message that tells the language model how to behave. Not all providers support theSystemMessage.

There are other messages types, like FunctionMessage and ToolMessage, but you’ll learn more about those when you build an agent.

Getting started with chat models in LangChain is straightforward. To instantiate an OpenAI chat model, navigate to langchain_intro and add the following code to chatbot.py:

langchain_intro/chatbot.py

import dotenv

from langchain_openai import ChatOpenAI

dotenv.load_dotenv()

chat_model = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

You first import dotenv and ChatOpenAI. Then you call dotenv.load_dotenv() which reads and stores environment variables from .env. By default, dotenv.load_dotenv() assumes .env is located in the current working directory, but you can pass the path to other directories if .env is located elsewhere.

You then instantiate a ChatOpenAI model using GPT 3.5 Turbo as the base LLM, and you set temperature to 0. OpenAI offers a diversity of models with varying price points, capabilities, and performances. GPT 3.5 turbo is a great model to start with because it performs well in many use cases and is cheaper than more recent models like GPT 4 and beyond.

Note: It’s a common misconception that setting temperature=0 guarantees deterministic responses from GPT models. While responses are closer to deterministic when temperature=0, there’s no guarantee that you’ll get the same response for identical requests. Because of this, GPT models might output slightly different results than what you see in the examples throughout this tutorial.

To use chat_model, open the project directory, start a Python interpreter, and run the following code:

>>> from langchain.schema.messages import HumanMessage, SystemMessage

>>> from langchain_intro.chatbot import chat_model

>>> messages = [

... SystemMessage(

... content="""You're an assistant knowledgeable about

... healthcare. Only answer healthcare-related questions."""

... ),

... HumanMessage(content="What is Medicaid managed care?"),

... ]

>>> chat_model.invoke(messages)

AIMessage(content='Medicaid managed care is a healthcare delivery system

in which states contract with managed care organizations (MCOs) to provide

healthcare services to Medicaid beneficiaries. Under this system, MCOs are

responsible for coordinating and delivering healthcare services to enrollees,

including primary care, specialty care, hospital services, and prescription

drugs. Medicaid managed care aims to improve care coordination, control costs,

and enhance the quality of care for Medicaid beneficiaries.')

In this block, you import HumanMessage and SystemMessage, as well as your chat model. You then define a list with a SystemMessage and a HumanMessage and run them through chat_model with chat_model.invoke(). Under the hood, chat_model makes a request to an OpenAI endpoint serving gpt-3.5-turbo-0125, and the results are returned as an AIMessage.

Note: You may find copying and pasting multiline code from this tutorial into your standard Python REPL a bit cumbersome. For a better experience, you could install an alternative Python REPL, such as IPython, bpython or ptpython, into your virtual environment and run the REPL interactions with those.

As you can see, the chat model answered What is Medicaid managed care? provided in the HumanMessage. You might be wondering what the chat model did with the SystemMessage in this context. Notice what happens when you ask the following question:

>>> messages = [

... SystemMessage(

... content="""You're an assistant knowledgeable about

... healthcare. Only answer healthcare-related questions."""

... ),

... HumanMessage(content="How do I change a tire?"),

... ]

>>> chat_model.invoke(messages)

AIMessage(content='I apologize, but I can only provide assistance

and answer questions related to healthcare.')

As described earlier, the SystemMessage tells the model how to behave. In this case, you told the model to only answer healthcare-related questions. This is why it refuses to tell you how to change your tire. The ability to control how an LLM relates to the user through text instructions is powerful, and this is the foundation for creating customized chatbots through prompt engineering.

While chat messages are a nice abstraction and are good for ensuring that you’re giving the LLM the right kind of message, you can also pass raw strings into chat models:

>>> chat_model.invoke("What is blood pressure?")

AIMessage(content='Blood pressure is the force exerted by

the blood against the walls of the blood vessels, particularly

the arteries, as it is pumped by the heart. It is measured in

millimeters of mercury (mmHg) and is typically expressed as two

numbers: systolic pressure over diastolic pressure. The systolic

pressure represents the force when the heart contracts and pumps

blood into the arteries, while the diastolic pressure represents

the force when the heart is at rest between beats. Blood pressure

is an important indicator of cardiovascular health and can be influenced

by various factors such as age, genetics, lifestyle, and underlying medical

conditions.')

In this code block, you pass the string What is blood pressure? directly to chat_model.invoke(). If you want to control the LLM’s behavior without a SystemMessage here, you can include instructions in the string input.

Note: In these examples, you used .invoke(), but LangChain has other methods that interact with LLMs. For instance, .stream() returns the response one token at time, and .batch() accepts a list of messages that the LLM responds to in one call.

Each method also has an analogous asynchronous method. For instance, you can run .invoke() asynchronously with ainvoke().

Next up, you’ll learn a modular way to guide your model’s response, as you did with the SystemMessage, making it easier to customize your chatbot.

Prompt Templates

LangChain allows you to design modular prompts for your chatbot with prompt templates. Quoting LangChain’s documentation, you can think of prompt templates as predefined recipes for generating prompts for language models.

Suppose you want to build a chatbot that answers questions about patient experiences from their reviews. Here’s what a prompt template might look like for this:

>>> from langchain.prompts import ChatPromptTemplate

>>> review_template_str = """Your job is to use patient

... reviews to answer questions about their experience at a hospital.

... Use the following context to answer questions. Be as detailed

... as possible, but don't make up any information that's not

... from the context. If you don't know an answer, say you don't know.

...

... {context}

...

... {question}

... """

>>> review_template = ChatPromptTemplate.from_template(review_template_str)

>>> context = "I had a great stay!"

>>> question = "Did anyone have a positive experience?"

>>> review_template.format(context=context, question=question)

"Human: Your job is to use patient\nreviews to answer questions about

their experience at a hospital.\nUse the following context to

answer questions. Be as detailed\nas possible, but don't make

up any information that's not\nfrom the context. If you don't

know an answer, say you don't know.\n\nI had a great

stay!\n\nDid anyone have a positive experience?\n"

You first import ChatPromptTemplate and define review_template_str, which contains the instructions that you’ll pass to the model, along with the variables context and question in replacement fields that LangChain delimits with curly braces ({}). You then create a ChatPromptTemplate object from review_template_str using the class method .from_template().

With review_template instantiated, you can pass context and question into the string template with review_template.format(). The results may look like you’ve done nothing more than standard Python string interpolation, but prompt templates have a lot of useful features that allow them to integrate with chat models.

Notice how your previous call to review_template.format() generated a string with Human at the beginning. This is because ChatPromptTemplate.from_template() assumes the string template is a human message by default. To change this, you can create more detailed prompt templates for each chat message that you want the model to process:

>>> from langchain.prompts import (

... PromptTemplate,

... SystemMessagePromptTemplate,

... HumanMessagePromptTemplate,

... ChatPromptTemplate,

... )

>>> review_system_template_str = """Your job is to use patient

... reviews to answer questions about their experience at a

... hospital. Use the following context to answer questions.

... Be as detailed as possible, but don't make up any information

... that's not from the context. If you don't know an answer, say

... you don't know.

...

... {context}

... """

>>> review_system_prompt = SystemMessagePromptTemplate(

... prompt=PromptTemplate(

... input_variables=["context"], template=review_system_template_str

... )

... )

>>> review_human_prompt = HumanMessagePromptTemplate(

... prompt=PromptTemplate(

... input_variables=["question"], template="{question}"

... )

... )

>>> messages = [review_system_prompt, review_human_prompt]

>>> review_prompt_template = ChatPromptTemplate(

... input_variables=["context", "question"],

... messages=messages,

... )

>>> context = "I had a great stay!"

>>> question = "Did anyone have a positive experience?"

>>> review_prompt_template.format_messages(context=context, question=question)

[SystemMessage(content="Your job is to use patient\nreviews to answer

questions about their experience at a\nhospital. Use the following context

to answer questions.\nBe as detailed as possible, but don't make up any

information\nthat's not from the context. If you don't know an answer, say

\nyou don't know.\n\nI had a great stay!\n"), HumanMessage(content='Did anyone

have a positive experience?')]

In this block, you import separate prompt templates for HumanMessage and SystemMessage. You then define a string, review_system_template_str, which serves as the template for a SystemMessage. Notice how you only declare a context variable in review_system_template_str.

From this, you create review_system_prompt which is a prompt template specifically for SystemMessage. Next you create a review_human_prompt for the HumanMessage. Notice how the template parameter is just a string with the question variable.

You then add review_system_prompt and review_human_prompt to a list called messages and create review_prompt_template, which is the final object that encompasses the prompt templates for both the SystemMessage and HumanMessage. Calling review_prompt_template.format_messages(context=context, question=question) generates a list with a SystemMessage and HumanMessage, which can be passed to a chat model.

To see how to combine chat models and prompt templates, you’ll build a chain with the LangChain Expression Language (LCEL). This helps you unlock LangChain’s core functionality of building modular customized interfaces over chat models.

Chains and LangChain Expression Language (LCEL)

The glue that connects chat models, prompts, and other objects in LangChain is the chain. A chain is nothing more than a sequence of calls between objects in LangChain. The recommended way to build chains is to use the LangChain Expression Language (LCEL).

To see how this works, take a look at how you’d create a chain with a chat model and prompt template:

langchain_intro/chatbot.py

1import dotenv

2from langchain_openai import ChatOpenAI

3from langchain.prompts import (

4 PromptTemplate,

5 SystemMessagePromptTemplate,

6 HumanMessagePromptTemplate,

7 ChatPromptTemplate,

8)

9

10dotenv.load_dotenv()

11

12review_template_str = """Your job is to use patient

13reviews to answer questions about their experience at

14a hospital. Use the following context to answer questions.

15Be as detailed as possible, but don't make up any information

16that's not from the context. If you don't know an answer, say

17you don't know.

18

19{context}

20"""

21

22review_system_prompt = SystemMessagePromptTemplate(

23 prompt=PromptTemplate(

24 input_variables=["context"],

25 template=review_template_str,

26 )

27)

28

29review_human_prompt = HumanMessagePromptTemplate(

30 prompt=PromptTemplate(

31 input_variables=["question"],

32 template="{question}",

33 )

34)

35messages = [review_system_prompt, review_human_prompt]

36

37review_prompt_template = ChatPromptTemplate(

38 input_variables=["context", "question"],

39 messages=messages,

40)

41

42chat_model = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

43

44review_chain = review_prompt_template | chat_model

Lines 1 to 42 are what you’ve already done. Namely, you define review_prompt_template which is a prompt template for answering questions about patient reviews, and you instantiate a gpt-3.5-turbo-0125 chat model. In line 44, you define review_chain with the | symbol, which is used to chain review_prompt_template and chat_model together.

This creates an object, review_chain, that can pass questions through review_prompt_template and chat_model in a single function call. In essence, this abstracts away all of the internal details of review_chain, allowing you to interact with the chain as if it were a chat model.

After saving the updated chatbot.py, start a new REPL session in your base project folder. Here’s how you can use review_chain:

>>> from langchain_intro.chatbot import review_chain

>>> context = "I had a great stay!"

>>> question = "Did anyone have a positive experience?"

>>> review_chain.invoke({"context": context, "question": question})

AIMessage(content='Yes, the patient had a great stay and had a

positive experience at the hospital.')

In this block, you import review_chain and define context and question as before. You then pass a dictionary with the keys context and question into review_chan.invoke(). This passes context and question through the prompt template and chat model to generate an answer.

Note: When calling chains, you can use all of the same methods that a chat model supports.

In general, the LCEL allows you to create arbitrary-length chains with the pipe symbol (|). For instance, if you wanted to format the model’s response, then you could add an output parser to the chain:

langchain_intro/chatbot.py

import dotenv

from langchain_openai import ChatOpenAI

from langchain.prompts import (

PromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

ChatPromptTemplate,

)

from langchain_core.output_parsers import StrOutputParser

# ...

output_parser = StrOutputParser()

review_chain = review_prompt_template | chat_model | output_parser

Here, you add a StrOutputParser() instance to review_chain, which will make the model’s response more readable. Start a new REPL session and give it a try:

>>> from langchain_intro.chatbot import review_chain

>>> context = "I had a great stay!"

>>> question = "Did anyone have a positive experience?"

>>> review_chain.invoke({"context": context, "question": question})

'Yes, the patient had a great stay and had a

positive experience at the hospital.'

This block is the same as before, except now you can see that review_chain returns a nicely-formatted string rather than an AIMessage.

The power of chains is in the creativity and flexibility they afford you. You can chain together complex pipelines to create your chatbot, and you end up with an object that executes your pipeline in a single method call. Next up, you’ll layer another object into review_chain to retrieve documents from a vector database.

Retrieval Objects

The goal of review_chain is to answer questions about patient experiences in the hospital from their reviews. So far, you’ve manually passed reviews in as context for the question. While this can work for a small number of reviews, it doesn’t scale well. Moreover, even if you can fit all reviews into the model’s context window, there’s no guarantee it will use the correct reviews when answering a question.

To overcome this, you need a retriever. The process of retrieving relevant documents and passing them to a language model to answer questions is known as retrieval-augmented generation (RAG).

For this example, you’ll store all the reviews in a vector database called ChromaDB. If you’re unfamiliar with this database tool and topics, then check out Embeddings and Vector Databases with ChromaDB before continuing.

You can install ChromaDB with the following command:

(venv) $ python -m pip install chromadb==0.4.22

With this installed, you can use the following code to create a ChromaDB vector database with patient reviews:

langchain_intro/create_retriever.py

1import dotenv

2from langchain.document_loaders.csv_loader import CSVLoader

3from langchain_community.vectorstores import Chroma

4from langchain_openai import OpenAIEmbeddings

5

6REVIEWS_CSV_PATH = "data/reviews.csv"

7REVIEWS_CHROMA_PATH = "chroma_data"

8

9dotenv.load_dotenv()

10

11loader = CSVLoader(file_path=REVIEWS_CSV_PATH, source_column="review")

12reviews = loader.load()

13

14reviews_vector_db = Chroma.from_documents(

15 reviews, OpenAIEmbeddings(), persist_directory=REVIEWS_CHROMA_PATH

16)

In lines 2 to 4, you import the dependencies needed to create the vector database. You then define REVIEWS_CSV_PATH and REVIEWS_CHROMA_PATH, which are paths where the raw reviews data is stored and where the vector database will store data, respectively.

You’ll get an overview of the hospital system data later, but all you need to know for now is that reviews.csv stores patient reviews. The review column in reviews.csv is a string with the patient’s review.

In lines 11 and 12, you load the reviews using LangChain’s CSVLoader. In lines 14 to 16, you create a ChromaDB instance from reviews using the default OpenAI embedding model, and you store the review embeddings at REVIEWS_CHROMA_PATH.

Note: In practice, if you’re embedding a large document, you should use a text splitter. Text splitters break the document into smaller chunks before running them through an embedding model. This is important because embedding models have a fixed-size context window, and as the size of the text grows, an embedding’s ability to accurately represent the text decreases.

For this example, you can embed each review individually because they’re relatively small.

Next, open a terminal and run the following command from the project directory:

(venv) $ python langchain_intro/create_retriever.py

It should only take a minute or so to run, and afterwards you can start performing semantic search over the review embeddings:

>>> import dotenv

>>> from langchain_community.vectorstores import Chroma

>>> from langchain_openai import OpenAIEmbeddings

>>> REVIEWS_CHROMA_PATH = "chroma_data/"

>>> dotenv.load_dotenv()

True

>>> reviews_vector_db = Chroma(

... persist_directory=REVIEWS_CHROMA_PATH,

... embedding_function=OpenAIEmbeddings(),

... )

>>> question = """Has anyone complained about

... communication with the hospital staff?"""

>>> relevant_docs = reviews_vector_db.similarity_search(question, k=3)

>>> relevant_docs[0].page_content

'review_id: 73\nvisit_id: 7696\nreview: I had a frustrating experience

at the hospital. The communication between the medical staff and me was

unclear, leading to misunderstandings about my treatment plan. Improvement

is needed in this area.\nphysician_name: Maria Thompson\nhospital_name:

Little-Spencer\npatient_name: Terri Smith'

>>> relevant_docs[1].page_content

'review_id: 521\nvisit_id: 631\nreview: I had a challenging time at the

hospital. The medical care was adequate, but the lack of communication

between the staff and me left me feeling frustrated and confused about my

treatment plan.\nphysician_name: Samantha Mendez\nhospital_name:

Richardson-Powell\npatient_name: Kurt Gordon'

>>> relevant_docs[2].page_content

'review_id: 785\nvisit_id: 2593\nreview: My stay at the hospital was challenging.

The medical care was adequate, but the lack of communication from the staff

created some frustration.\nphysician_name: Brittany Harris\nhospital_name:

Jones, Taylor and Garcia\npatient_name: Ryan Jacobs'

You import the dependencies needed to call ChromaDB and specify the path to the stored ChromaDB data in REVIEWS_CHROMA_PATH. You then load environment variables using dotenv.load_dotenv() and create a new Chroma instance pointing to your vector database. Notice how you have to specify an embedding function again when connecting to your vector database. Be sure this is the same embedding function that you used to create the embeddings.

Next, you define a question and call .similarity_search() on reviews_vector_db, passing in question and k=3. This creates an embedding for the question and searches the vector database for the three most similar review embeddings to question embedding. In this case, you see three reviews where patients complained about communication, which is exactly what you asked for!

The last thing to do is add your reviews retriever to review_chain so that relevant reviews are passed to the prompt as context. Here’s how you do that:

langchain_intro/chatbot.py

import dotenv

from langchain_openai import ChatOpenAI

from langchain.prompts import (

PromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

ChatPromptTemplate,

)

from langchain_core.output_parsers import StrOutputParser

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

from langchain.schema.runnable import RunnablePassthrough

REVIEWS_CHROMA_PATH = "chroma_data/"

# ...

reviews_vector_db = Chroma(

persist_directory=REVIEWS_CHROMA_PATH,

embedding_function=OpenAIEmbeddings()

)

reviews_retriever = reviews_vector_db.as_retriever(k=10)

review_chain = (

{"context": reviews_retriever, "question": RunnablePassthrough()}

| review_prompt_template

| chat_model

| StrOutputParser()

)

As before, you import ChromaDB’s dependencies, specify the path to your ChromaDB data, and instantiate a new Chroma object. You then create reviews_retriever by calling .as_retriever() on reviews_vector_db to create a retriever object that you’ll add to review_chain. Because you specified k=10, the retriever will fetch the ten reviews most similar to the user’s question.

You then add a dictionary with context and question keys to the front of review_chain. Instead of passing context in manually, review_chain will pass your question to the retriever to pull relevant reviews. Assigning question to a RunnablePassthrough object ensures the question gets passed unchanged to the next step in the chain.

You now have a fully functioning chain that can answer questions about patient experiences from their reviews. Start a new REPL session and try it out:

>>> from langchain_intro.chatbot import review_chain

>>> question = """Has anyone complained about

... communication with the hospital staff?"""

>>> review_chain.invoke(question)

'Yes, several patients have complained about communication

with the hospital staff. Terri Smith mentioned that the

communication between the medical staff and her was unclear,

leading to misunderstandings about her treatment plan.

Kurt Gordon also mentioned that the lack of communication

between the staff and him left him feeling frustrated and

confused about his treatment plan. Ryan Jacobs also experienced

frustration due to the lack of communication from the staff.

Shannon Williams also mentioned that the lack of communication

between the staff and her made her stay at the hospital less enjoyable.'

As you can see, you only call review_chain.invoke(question) to get retrieval-augmented answers about patient experiences from their reviews. You’ll improve upon this chain later by storing review embeddings, along with other metadata, in Neo4j.

Now that you understand chat models, prompts, chains, and retrieval, you’re ready to dive into the last LangChain concept—agents.

Agents

So far, you’ve created a chain to answer questions using patient reviews. What if you want your chatbot to also answer questions about other hospital data, such as hospital wait times? Ideally, your chatbot can seamlessly switch between answering patient review and wait time questions depending on the user’s query. To accomplish this, you’ll need the following components:

- The patient review chain you already created

- A function that can look up wait times at a hospital

- A way for an LLM to know when it should answer questions about patient experiences or look up wait times

To accomplish the third capability, you need an agent.

An agent is a language model that decides on a sequence of actions to execute. Unlike chains where the sequence of actions is hard-coded, agents use a language model to determine which actions to take and in which order.

Before building the agent, create the following function to generate fake wait times for a hospital:

langchain_intro/tools.py

import random

import time

def get_current_wait_time(hospital: str) -> int | str:

"""Dummy function to generate fake wait times"""

if hospital not in ["A", "B", "C", "D"]:

return f"Hospital {hospital} does not exist"

# Simulate API call delay

time.sleep(1)

return random.randint(0, 10000)

In get_current_wait_time(), you pass in a hospital name, check if it’s valid, and then generate a random number to simulate a wait time. In reality, this would be some sort of database query or API call, but this will serve the same purpose for this demonstration.

You can now create an agent that decides between get_current_wait_time() and review_chain.invoke() depending on the question:

langchain_intro/chatbot.py

import dotenv

from langchain_openai import ChatOpenAI

from langchain.prompts import (

PromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

ChatPromptTemplate,

)

from langchain_core.output_parsers import StrOutputParser

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

from langchain.schema.runnable import RunnablePassthrough

from langchain.agents import (

create_openai_functions_agent,

Tool,

AgentExecutor,

)

from langchain import hub

from langchain_intro.tools import get_current_wait_time

# ...

tools = [

Tool(

name="Reviews",

func=review_chain.invoke,

description="""Useful when you need to answer questions

about patient reviews or experiences at the hospital.

Not useful for answering questions about specific visit

details such as payer, billing, treatment, diagnosis,

chief complaint, hospital, or physician information.

Pass the entire question as input to the tool. For instance,

if the question is "What do patients think about the triage system?",

the input should be "What do patients think about the triage system?"

""",

),

Tool(

name="Waits",

func=get_current_wait_time,

description="""Use when asked about current wait times

at a specific hospital. This tool can only get the current

wait time at a hospital and does not have any information about

aggregate or historical wait times. This tool returns wait times in

minutes. Do not pass the word "hospital" as input,

only the hospital name itself. For instance, if the question is

"What is the wait time at hospital A?", the input should be "A".

""",

),

]

hospital_agent_prompt = hub.pull("hwchase17/openai-functions-agent")

agent_chat_model = ChatOpenAI(

model="gpt-3.5-turbo-1106",

temperature=0,

)

hospital_agent = create_openai_functions_agent(

llm=agent_chat_model,

prompt=hospital_agent_prompt,

tools=tools,

)

hospital_agent_executor = AgentExecutor(

agent=hospital_agent,

tools=tools,

return_intermediate_steps=True,

verbose=True,

)

In this block, you import a few additional dependencies that you’ll need to create the agent. You then define a list of Tool objects. A Tool is an interface that an agent uses to interact with a function. For instance, the first tool is named Reviews and it calls review_chain.invoke() if the question meets the criteria of description.

Notice how description gives the agent instructions as to when it should call the tool. This is where good prompt engineering skills are paramount to ensuring the LLM calls the correct tool with the correct inputs.

The second Tool in tools is named Waits, and it calls get_current_wait_time(). Again, the agent has to know when to use the Waits tool and what inputs to pass into it depending on the description.

Next, you initialize a ChatOpenAI object using gpt-3.5-turbo-1106 as your language model. You then create an OpenAI functions agent with create_openai_functions_agent(). This creates an agent designed to pass inputs to functions. It does this by returning valid JSON objects that store function inputs and their corresponding value.

To create the agent run time, you pass the agent and tools into AgentExecutor. Setting return_intermediate_steps and verbose to True will allow you to see the agent’s thought process and the tools it calls.

Start a new REPL session to give your new agent a spin:

>>> from langchain_intro.chatbot import hospital_agent_executor

>>> hospital_agent_executor.invoke(

... {"input": "What is the current wait time at hospital C?"}

... )

> Entering new AgentExecutor chain...

Invoking: `Waits` with `C`

1374The current wait time at Hospital C is 1374 minutes.

> Finished chain.

{'input': 'What is the current wait time at hospital C?',

'output': 'The current wait time at Hospital C is 1374 minutes.',

'intermediate_steps': [(AgentActionMessageLog(tool='Waits',

tool_input='C', log='\nInvoking: `Waits` with `C`\n\n\n',

message_log=[AIMessage(content='', additional_kwargs={'function_call':

{'arguments': '{"__arg1":"C"}', 'name': 'Waits'}})]), 1374)]}

>>> hospital_agent_executor.invoke(

... {"input": "What have patients said about their comfort at the hospital?"}

... )

> Entering new AgentExecutor chain...

Invoking: `Reviews` with `What have patients said about their comfort at the

hospital?`

Patients have mentioned both positive and negative aspects of their comfort at

the hospital. One patient mentioned that the hospital's dedication to patient

comfort was evident in the well-designed private rooms and comfortable furnishings,

which made their recovery more bearable and contributed to an overall positive

experience. However, other patients mentioned that the uncomfortable beds made

it difficult for them to get a good night's sleep during their stay, affecting

their overall comfort. Another patient mentioned that the outdated and

uncomfortable beds affected their overall comfort, despite the doctors being

knowledgeable and the hospital having a clean environment. Patients have shared

mixed feedback about their comfort at the hospital. Some have praised the well-designed

private rooms and comfortable furnishings, which contributed to a positive experience.

However, others have mentioned discomfort due to the outdated and uncomfortable beds,

affecting their overall comfort despite the hospital's clean environment and knowledgeable

doctors.

> Finished chain.

{'input': 'What have patients said about their comfort at the hospital?', 'output':

"Patients have shared mixed feedback about their comfort at the hospital. Some have

praised the well-designed private rooms and comfortable furnishings, which contributed

to a positive experience. However, others have mentioned discomfort due to the outdated

and uncomfortable beds, affecting their overall comfort despite the hospital's clean

environment and knowledgeable doctors.", 'intermediate_steps':

[(AgentActionMessageLog(tool='Reviews', tool_input='What have patients said about their

comfort at the hospital?', log='\nInvoking: `Reviews` with `What have patients said about

their comfort at the hospital?`\n\n\n', message_log=[AIMessage(content='',

additional_kwargs={'function_call': {'arguments': '{"__arg1":"What have patients said about

their comfort at the hospital?"}', 'name': 'Reviews'}})]), "Patients have mentioned both

positive and negative aspects of their comfort at the hospital. One patient mentioned that

the hospital's dedication to patient comfort was evident in the well-designed private rooms

and comfortable furnishings, which made their recovery more bearable and contributed to an

overall positive experience. However, other patients mentioned that the uncomfortable beds

made it difficult for them to get a good night's sleep during their stay, affecting their

overall comfort. Another patient mentioned that the outdated and uncomfortable beds affected

their overall comfort, despite the doctors being knowledgeable and the hospital having a clean

environment.")]}

You first import the agent and then call hospital_agent_executor.invoke() with a question about a wait time. As indicated in the output, the agent knows that you’re asking about a wait time, and it passes C as input to the Waits tool. The Waits tool then calls get_current_wait_time(hospital="C") and returns the corresponding wait time to the agent. The agent then uses this wait time to generate its final output.

A similar process happens when you ask the agent about patient experience reviews, except this time the agent knows to call the Reviews tool with What have patients said about their comfort at the

hospital? as input. The Reviews tool runs review_chain.invoke() using your full question as input, and the agent uses the response to generate its output.

This is a profound capability. Agents give language models the ability to perform just about any task that you can write code for. Imagine all of the amazing, and potentially dangerous, chatbots you could build with agents.

You now have all of the prerequisite LangChain knowledge needed to build a custom chatbot. Next up, you’ll put on your AI engineer hat and learn about the business requirements and data needed to build your hospital system chatbot.

All of the code you’ve written so far was intended to teach you the fundamentals of LangChain, and it won’t be included in your final chatbot. Feel free to start with an empty directory in Step 2, where you’ll begin building your chatbot.

Step 2: Understand the Business Requirements and Data

Before you start working on any AI project, you need to understand the problem that you want to solve and make a plan for how you’re going to solve it. This involves clearly defining the problem, gathering requirements, understanding the data and technology available to you, and setting clear expectations with stakeholders. For this project, you’ll start by defining the problem and gathering business requirements for your chatbot.

Understand the Problem and Requirements

Imagine you’re an AI engineer working for a large hospital system in the US. Your stakeholders would like more visibility into the ever-changing data they collect. They want answers to ad-hoc questions about patients, visits, physicians, hospitals, and insurance payers without having to understand a query language like SQL, request a report from an analyst, or wait for someone to build a dashboard.

To accomplish this, your stakeholders want an internal chatbot tool, similar to ChatGPT, that can answer questions about your company’s data. After meeting to gather requirements, you’re provided with a list of the kinds of questions your chatbot should answer:

- What is the current wait time at XYZ hospital?

- Which hospital currently has the shortest wait time?

- At which hospitals are patients complaining about billing and insurance issues?

- Have any patients complained about the hospital being unclean?

- What have patients said about how doctors and nurses communicate with them?

- What are patients saying about the nursing staff at XYZ hospital?

- What was the total billing amount charged to Cigna payers in 2023?

- How many patients has Dr. John Doe treated?

- How many visits are open and what is their average duration in days?

- Which physician has the lowest average visit duration in days?

- How much was billed for patient 789’s stay?

- Which hospital worked with the most Cigna patients in 2023?

- What’s the average billing amount for emergency visits by hospital?

- Which state had the largest percent increase inedicaid visits from 2022 to 2023?

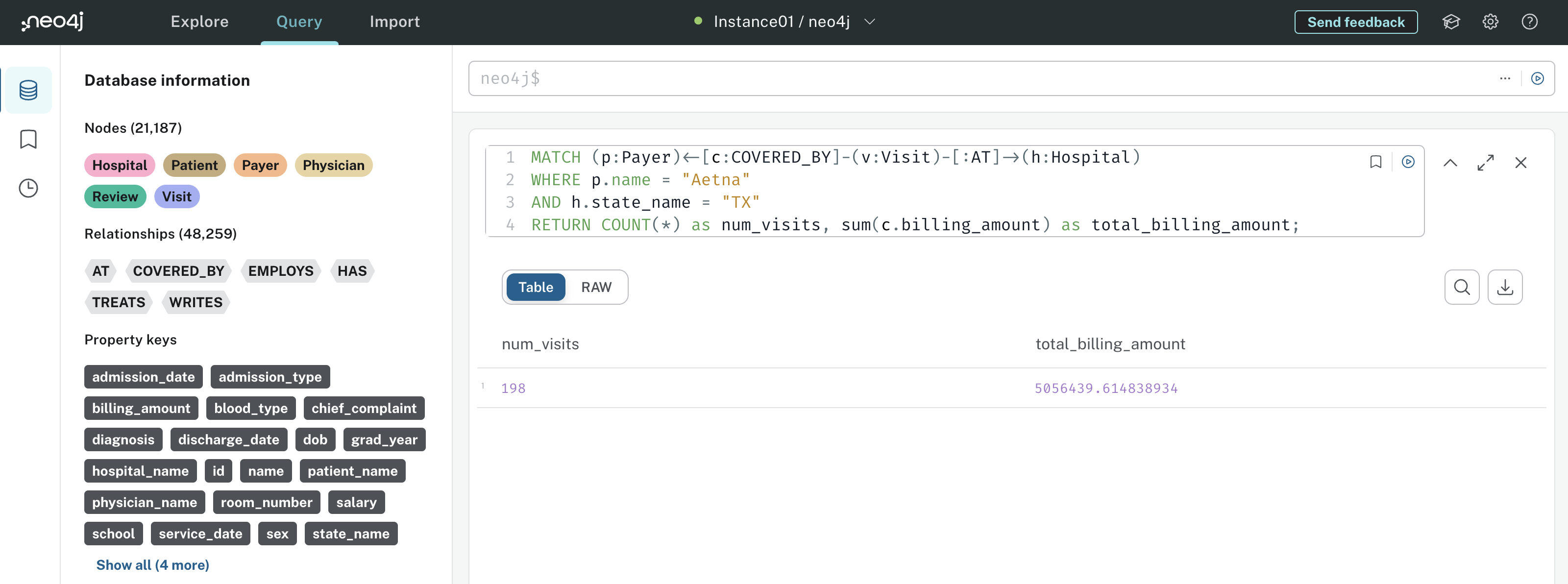

You can answer questions like What was the total billing amount charged to Cigna payers in 2023? with aggregate statistics using a query language like SQL. Crucially, these questions have a single objective answer. You could run pre-defined queries to answer these, but any time a stakeholder has a new or slightly nuanced question, you have to write a new query. To avoid this, your chatbot should dynamically generate accurate queries.

Questions like Have any patients complained about the hospital being unclean? or What have patients said about how doctors and nurses communicate with them? are more subjective and might have many acceptable answers. Your chatbot will need to read through documents, such as patient reviews, to answer these kinds of questions.

Ultimately, your stakeholders want a single chat interface that can seamlessly answer both subjective and objective questions. This means, when presented with a question, your chatbot needs to know what type of question is being asked and which data source to pull from.

For instance, if asked How much was billed for patient 789’s stay?, your chatbot should know it needs to query a database to find the answer. If asked What have patients said about how doctors and nurses communicate with them?, your chatbot should know it needs to read and summarize patient reviews.

Next up, you’ll explore the data your hospital system records, which is arguably the most important prerequisite to building your chatbot.

Explore the Available Data

Before building your chatbot, you need a thorough understanding of the data it will use to respond to user queries. This will help you determine what’s feasible and how you want to structure the data so that your chatbot can easily access it. All of the data you’ll use in this article was synthetically generated, and much of it was derived from a popular health care dataset on Kaggle.

In practice, the following datasets would likely be stored as tables in a SQL database, but you’ll work with CSV files to keep the focus on building the chatbot. This section will give you a detailed description of each CSV file.

You’ll need to place all CSV files that are part of this project in your data/ folder before continuing with the tutorial. Make sure that you downloaded them from the materials and placed them in your data/ folder:

Get Your Code: Click here to download the free source code for your LangChain chatbot.

hospitals.csv

The hospitals.csv file records information on each hospital that your company manages. There 30 hospitals and three fields in this file:

hospital_id: An integer that uniquely identifies a hospital.hospital_name: The hospital’s name.hospital_state: The state the hospital is located in.

If you’re familiar with traditional SQL databases and the star schema, you can think of hospitals.csv as a dimension table. Dimension tables are relatively short and contain descriptive information or attributes that provide context to the data in fact tables. Fact tables record events about the entities stored in dimension tables, and they tend to be longer tables.

In this case, hospitals.csv records information specific to hospitals, but you can join it to fact tables to answer questions about which patients, physicians, and payers are related to the hospital. This will be more clear when you explore visits.csv.

If you’re curious, you can inspect the first few rows of hospitals.csv using a dataframe library like Polars. Make sure Polars is installed in your virtual environment, and run the following code:

>>> import polars as pl

>>> HOSPITAL_DATA_PATH = "data/hospitals.csv"

>>> data_hospitals = pl.read_csv(HOSPITAL_DATA_PATH)

>>> data_hospitals.shape

(30, 3)

>>> data_hospitals.head()

shape: (5, 3)

┌─────────────┬───────────────────────────┬────────────────┐

│ hospital_id ┆ hospital_name ┆ hospital_state │

│ --- ┆ --- ┆ --- │

│ i64 ┆ str ┆ str │

╞═════════════╪═══════════════════════════╪════════════════╡

│ 0 ┆ Wallace-Hamilton ┆ CO │

│ 1 ┆ Burke, Griffin and Cooper ┆ NC │

│ 2 ┆ Walton LLC ┆ FL │

│ 3 ┆ Garcia Ltd ┆ NC │

│ 4 ┆ Jones, Brown and Murray ┆ NC │

└─────────────┴───────────────────────────┴────────────────┘

In this code block, you import Polars, define the path to hospitals.csv, read the data into a Polars DataFrame, display the shape of the data, and display the first 5 rows. This shows you, for example, that Walton, LLC hospital has an ID of 2 and is located in the state of Florida, FL.

physicians.csv

The physicians.csv file contains data about the physicians that work for your hospital system. This dataset has the following fields:

physician_id: An integer that uniquely identifies each physician.physician_name: The physician’s name.physician_dob: The physician’s date of birth.physician_grad_year: The year the physician graduated medical school.medical_school: Where the physician attended medical school.salary: The physician’s salary.

This data can again be thought of as a dimension table, and you can inspect the first few rows using Polars:

>>> PHYSICIAN_DATA_PATH = "data/physicians.csv"

>>> data_physician = pl.read_csv(PHYSICIAN_DATA_PATH)

>>> data_physician.shape

(500, 6)

>>> data_physician.head()

shape: (5, 6)

┌──────────────────┬──────────────┬───────────────┬─────────────────────┬───────────────────────────────────┬───────────────┐

│ physician_name ┆ physician_id ┆ physician_dob ┆ physician_grad_year ┆ medical_school ┆ salary │

│ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- │

│ str ┆ i64 ┆ str ┆ str ┆ str ┆ f64 │

╞══════════════════╪══════════════╪═══════════════╪═════════════════════╪═══════════════════════════════════╪═══════════════╡

│ Joseph Johnson ┆ 0 ┆ 1970-02-22 ┆ 2000-02-22 ┆ Johns Hopkins University School … ┆ 309534.155076 │

│ Jason Williams ┆ 1 ┆ 1982-12-22 ┆ 2012-12-22 ┆ Mayo Clinic Alix School of Medic… ┆ 281114.503559 │

│ Jesse Gordon ┆ 2 ┆ 1959-06-03 ┆ 1989-06-03 ┆ David Geffen School of Medicine … ┆ 305845.584636 │

│ Heather Smith ┆ 3 ┆ 1965-06-15 ┆ 1995-06-15 ┆ NYU Grossman Medical School ┆ 295239.766689 │

│ Kayla Hunter DDS ┆ 4 ┆ 1978-10-19 ┆ 2008-10-19 ┆ David Geffen School of Medicine … ┆ 298751.355201 │

└──────────────────┴──────────────┴───────────────┴─────────────────────┴───────────────────────────────────┴───────────────┘

As you can see from the code block, there are 500 physicians in physicians.csv. The first few rows from physicians.csv give you a feel for what the data looks like. For instance, Heather Smith has a physician ID of 3, was born on June 15, 1965, graduated medical school on June 15, 1995, attended NYU Grossman Medical School, and her salary is about $295,239.

payers.csv

The next file, payers.csv, records information about the insurance companies that your hospitals bills for patient visits. Similar to hospitals.csv, it’s a small file with a couple fields:

payer_id: An integer that uniquely identifies each payer.payer_name: The payer’s company name.

The only five payers in the data are Medicaid, UnitedHealthcare, Aetna, Cigna, and Blue Cross. Your stakeholders are very interested in payer activity, so payers.csv will be helpful once it’s connected to patients, hospitals, and physicians.

reviews.csv

The reviews.csv file contains patient reviews about their experience at the hospital. It has these fields:

review_id: An integer that uniquely identifies a review.visit_id: An integer that identifies the patient’s visit that the review was about.review: This is the free form text review left by the patient.physician_name: The name of the physician who treated the patient.hospital_name: The hospital where the patient stayed.patient_name: The patient’s name.

This dataset is the first one you’ve seen that contains the free text review field, and your chatbot should use this to answer questions about review details and patient experiences.

Here’s what reviews.csv looks like:

>>> REVIEWS_DATA_PATH = "data/reviews.csv"

>>> data_reviews = pl.read_csv(REVIEWS_DATA_PATH)

>>> data_reviews.shape

(1005, 6)

>>> data_reviews.head()

shape: (5, 6)

┌───────────┬──────────┬───────────────────────────────────┬─────────────────────┬──────────────────┬──────────────────┐

│ review_id ┆ visit_id ┆ review ┆ physician_name ┆ hospital_name ┆ patient_name │

│ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- │

│ i64 ┆ i64 ┆ str ┆ str ┆ str ┆ str │

╞═══════════╪══════════╪═══════════════════════════════════╪═════════════════════╪══════════════════╪══════════════════╡

│ 0 ┆ 6997 ┆ The medical staff at the hospita… ┆ Laura Brown ┆ Wallace-Hamilton ┆ Christy Johnson │

│ 9 ┆ 8138 ┆ The hospital's commitment to pat… ┆ Steven Watson ┆ Wallace-Hamilton ┆ Anna Frazier │

│ 11 ┆ 680 ┆ The hospital's commitment to pat… ┆ Chase Mcpherson Jr. ┆ Wallace-Hamilton ┆ Abigail Mitchell │

│ 892 ┆ 9846 ┆ I had a positive experience over… ┆ Jason Martinez ┆ Wallace-Hamilton ┆ Kimberly Rivas │

│ 822 ┆ 7397 ┆ The medical team at the hospital… ┆ Chelsey Davis ┆ Wallace-Hamilton ┆ Catherine Yang │

└───────────┴──────────┴───────────────────────────────────┴─────────────────────┴──────────────────┴──────────────────┘

There are 1005 reviews in this dataset, and you can see how each review relates to a visit. For instance, the review with ID 9 corresponds to visit ID 8138, and the first few words are “The hospital’s commitment to pat…”. You might be wondering how you can connect a review to a patient, or more generally, how you can connect all of the datasets described so far to each other. This is where visits.csv comes in.

visits.csv

The last file, visits.csv, records details about every hospital visit your company has serviced. Continuing with the star schema analogy, you can think of visits.csv as a fact table that connects hospitals, physicians, patients, and payers. Here are the fields:

visit_id: The unique identifier of a hospital visit.patient_id: The ID of the patient associated with the visit.date_of_admission: The date the patient was admitted to the hospital.room_number: The patient’s room number.admission_type: One of ‘Elective’, ‘Emergency’, or ‘Urgent’.chief_complaint: A string describing the patient’s primary reason for being at the hospital.primary_diagnosis: A string describing the primary diagnosis made by the physician.treatment_description: A text summary of the treatment given by the physician.test_results: One of ‘Inconclusive’, ‘Normal’, or ‘Abnormal’.discharge_date: The date the patient was discharged from the hospitalphysician_id: The ID of the physician that treated the patient.hospital_id: The ID of the hospital the patient stayed at.payer_id: The ID of the insurance payer used by the patient.billing_amount: The amount of money billed to the payer for the visit.visit_status: One of ‘OPEN’ or ‘DISCHARGED’.

This dataset gives you everything you need to answer questions about the relationship between each hospital entity. For example, if you know a physician ID, you can use visits.csv to figure out which patients, payers, and hospitals the physician is associated with. Take a look at what visits.csv looks like in Polars:

>>> VISITS_DATA_PATH = "data/visits.csv"

>>> data_visits = pl.read_csv(VISITS_DATA_PATH)

>>> data_visits.shape

(9998, 15)

>>> data_visits.head()

shape: (5, 15)

┌─────────┬─────────┬─────────┬─────────┬─────────┬─────────┬────────┬────────┬────────┬────────┬────────┬────────┬────────┬────────┬────────┐

│ patient ┆ date_of ┆ billing ┆ room_nu ┆ admissi ┆ dischar ┆ test_r ┆ visit_ ┆ physic ┆ payer_ ┆ hospit ┆ chief_ ┆ treatm ┆ primar ┆ visit_ │

│ _id ┆ _admiss ┆ _amount ┆ mber ┆ on_type ┆ ge_date ┆ esults ┆ id ┆ ian_id ┆ id ┆ al_id ┆ compla ┆ ent_de ┆ y_diag ┆ status │

│ --- ┆ ion ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ --- ┆ int ┆ script ┆ nosis ┆ --- │

│ i64 ┆ --- ┆ f64 ┆ i64 ┆ str ┆ str ┆ str ┆ i64 ┆ i64 ┆ i64 ┆ i64 ┆ --- ┆ ion ┆ --- ┆ str │

│ ┆ str ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ str ┆ --- ┆ str ┆ │

│ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ str ┆ ┆ │

╞═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪════════╪════════╪════════╪════════╪════════╪════════╪════════╪════════╪════════╡

│ 0 ┆ 2022-11 ┆ 37490.9 ┆ 146 ┆ Electiv ┆ 2022-12 ┆ Inconc ┆ 0 ┆ 102 ┆ 1 ┆ 0 ┆ null ┆ null ┆ null ┆ DISCHA │

│ ┆ -17 ┆ 83364 ┆ ┆ e ┆ -01 ┆ lusive ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ RGED │

│ 1 ┆ 2023-06 ┆ 47304.0 ┆ 404 ┆ Emergen ┆ null ┆ Normal ┆ 1 ┆ 435 ┆ 4 ┆ 5 ┆ null ┆ null ┆ null ┆ OPEN │

│ ┆ -01 ┆ 64845 ┆ ┆ cy ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ │

│ 2 ┆ 2019-01 ┆ 36874.8 ┆ 292 ┆ Emergen ┆ 2019-02 ┆ Normal ┆ 2 ┆ 348 ┆ 2 ┆ 6 ┆ null ┆ null ┆ null ┆ DISCHA │

│ ┆ -09 ┆ 96997 ┆ ┆ cy ┆ -08 ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ RGED │

│ 3 ┆ 2020-05 ┆ 23303.3 ┆ 480 ┆ Urgent ┆ 2020-05 ┆ Abnorm ┆ 3 ┆ 270 ┆ 4 ┆ 15 ┆ null ┆ null ┆ null ┆ DISCHA │

│ ┆ -02 ┆ 22092 ┆ ┆ ┆ -03 ┆ al ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ RGED │

│ 4 ┆ 2021-07 ┆ 18086.3 ┆ 477 ┆ Urgent ┆ 2021-08 ┆ Normal ┆ 4 ┆ 106 ┆ 2 ┆ 29 ┆ Persis ┆ Prescr ┆ J45.90 ┆ DISCHA │

│ ┆ -09 ┆ 44184 ┆ ┆ ┆ -02 ┆ ┆ ┆ ┆ ┆ ┆ tent ┆ ibed a ┆ 9 - ┆ RGED │

│ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ cough ┆ combin ┆ Unspec ┆ │

│ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ and ┆ ation ┆ ified ┆ │

│ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ shortn ┆ of ┆ asthma ┆ │

│ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ┆ ess o… ┆ inha… ┆ , un… ┆ │

└─────────┴─────────┴─────────┴─────────┴─────────┴─────────┴────────┴────────┴────────┴────────┴────────┴────────┴────────┴────────┴────────┘

You can see there are 9998 visits recorded along with the 15 fields described above. Notice that chief_complaint, treatment_description, and primary_diagnosis might be missing for a visit. You’ll have to keep this in mind as your stakeholders might not be aware that many visits are missing critical data—this may be a valuable insight in itself! Lastly, notice that when a visit is still open, the discharged_date will be missing.

You now have an understanding of the data you’ll use to build the chatbot your stakeholders want. To recap, the files are broken out to simulate what a traditional SQL database might look like. Every hospital, patient, physician, review, and payer are connected through visits.csv.

Wait Times

You might have noticed there’s no data to answer questions like What is the current wait time at XYZ hospital? or Which hospital currently has the shortest wait time?. Unfortunately, the hospital system doesn’t record historical wait times. Your chatbot will have to call an API to get current wait time information. You’ll see how this works later.

With an understanding of the business requirements, available data, and LangChain functionalities, you can create a design for your chatbot.

Design the Chatbot

Now that you know the business requirements, data, and LangChain prerequisites, you’re ready to design your chatbot. A good design gives you and others a conceptual understanding of the components needed to build your chatbot. Your design should clearly illustrate how data flows through your chatbot, and it should serve as a helpful reference during development.

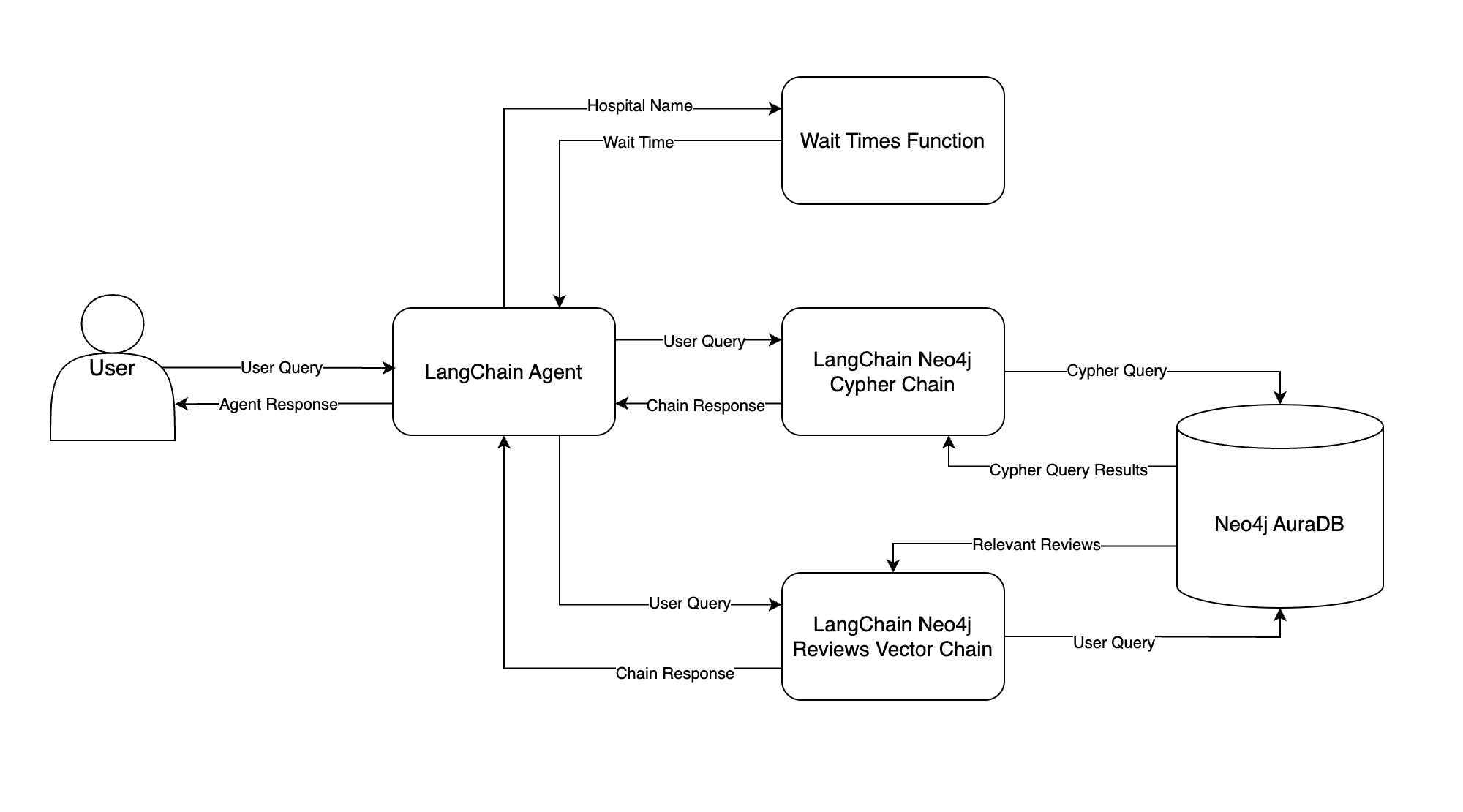

Your chatbot will use multiple tools to answer diverse questions about your hospital system. Here’s a flowchart illustrating how you’ll accomplish this:

This flowchart illustrates how data moves through your chatbot, starting from the user’s input query all the way to the final response. Here’s a summary of each component:

- LangChain Agent: The LangChain agent is the brain of your chatbot. Given a user query, the agent decides which tool to call and what to give the tool as input. The agent then observes the tool’s output and decides what to return to the user—this is the agent’s response.

- Neo4j AuraDB: You’ll store both structured hospital system data and patient reviews in a Neo4j AuraDB graph database. You’ll learn all about this in the next section.

- LangChain Neo4j Cypher Chain: This chain tries to convert the user query into Cypher, Neo4j’s query language, and execute the Cypher query in Neo4j. The chain then answers the user query using the Cypher query results. The chain’s response is fed back to the LangChain agent and sent to the user.

- LangChain Neo4j Reviews Vector Chain: This is very similar to the chain you built in Step 1, except now patient review embeddings are stored in Neo4j. The chain searches for relevant reviews based on those semantically similar to the user query, and the reviews are used to answer the user query.

- Wait Times Function: Similar to the logic in Step 1, the LangChain agent tries to extract a hospital name from the user query. The hospital name is passed as input to a Python function that gets wait times, and the wait time is returned to the agent.

To walk through an example, suppose a user asks How many emergency visits were there in 2023? The LangChain agent will receive this question and decide which tool, if any, to pass the question to. In this case, the agent should pass the question to the LangChain Neo4j Cypher Chain. The chain will try to convert the question to a Cypher query, run the Cypher query in Neo4j, and use the query results to answer the question.

Once the LangChain Neo4j Cypher Chain answers the question, it will return the answer to the agent, and the agent will relay the answer to the user.

With this design in mind, you can start building your chatbot. Your first task is to set up a Neo4j AuraDB instance for your chatbot to access.

Step 3: Set Up a Neo4j Graph Database

As you saw in step 2, your hospital system data is currently stored in CSV files. Before building your chatbot, you need to store this data in a database that your chatbot can query. You’ll use Neo4j AuraDB for this.

Before learning how to set up a Neo4j AuraDB instance, you’ll get an overview of graph databases, and you’ll see why using a graph database may be a better choice than a relational database for this project.

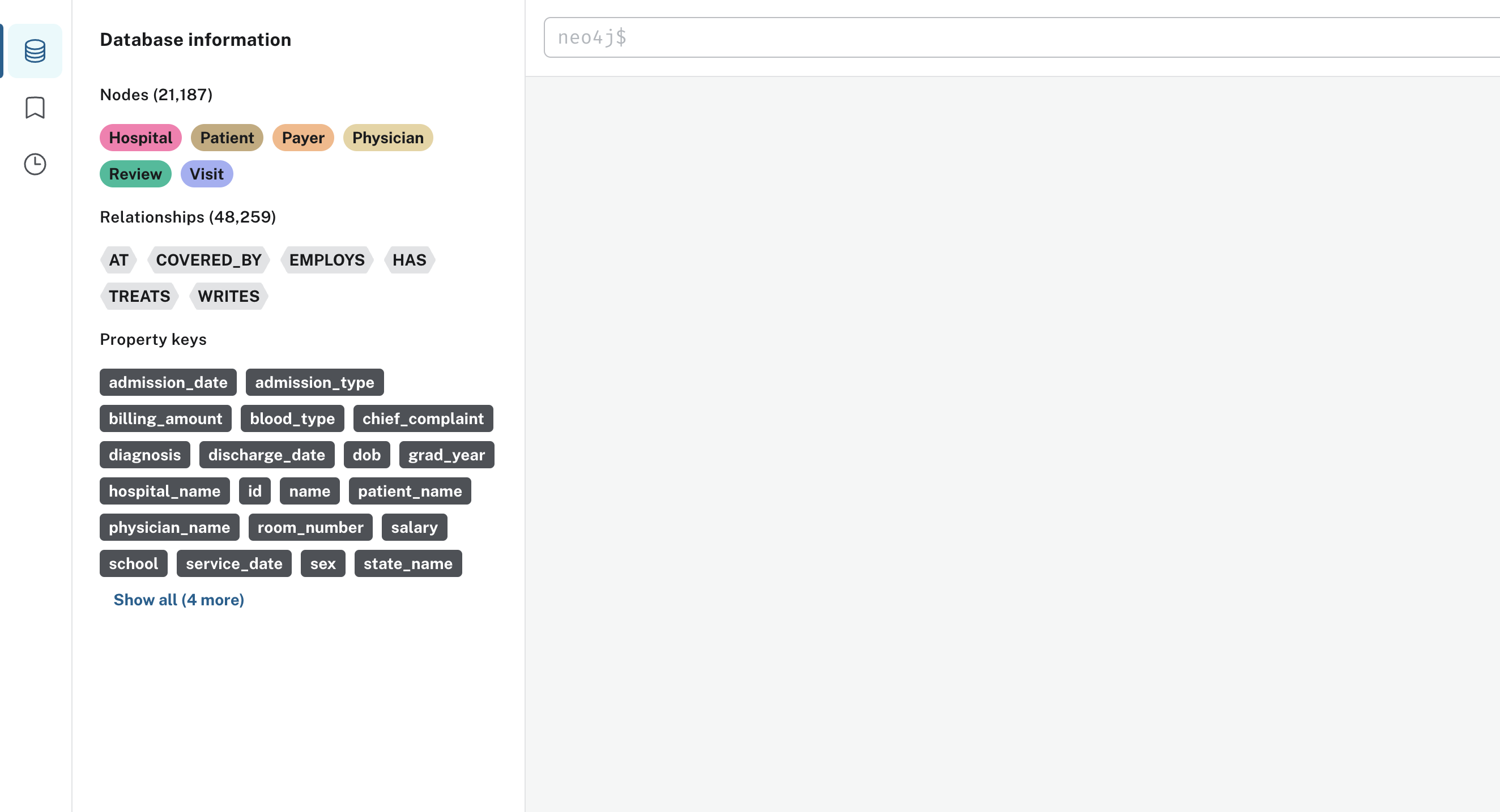

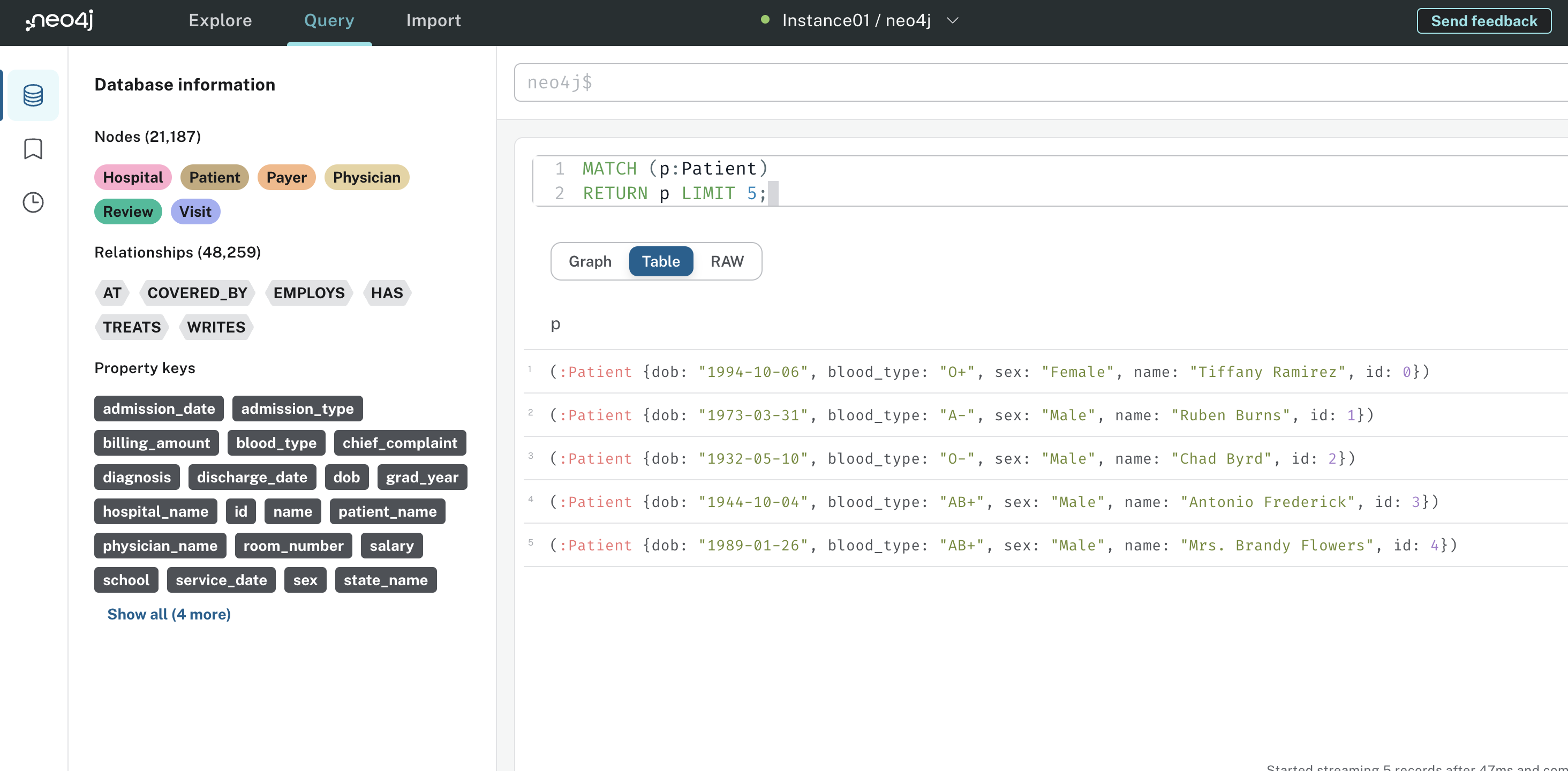

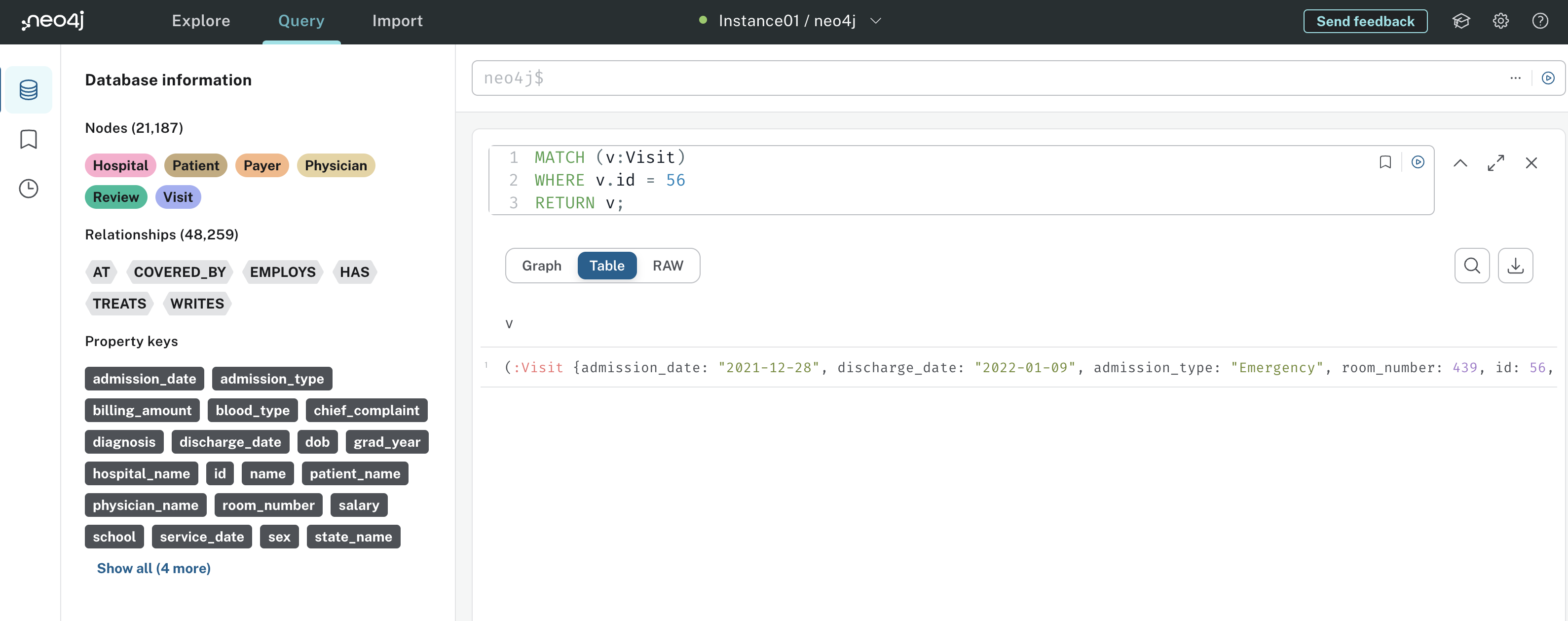

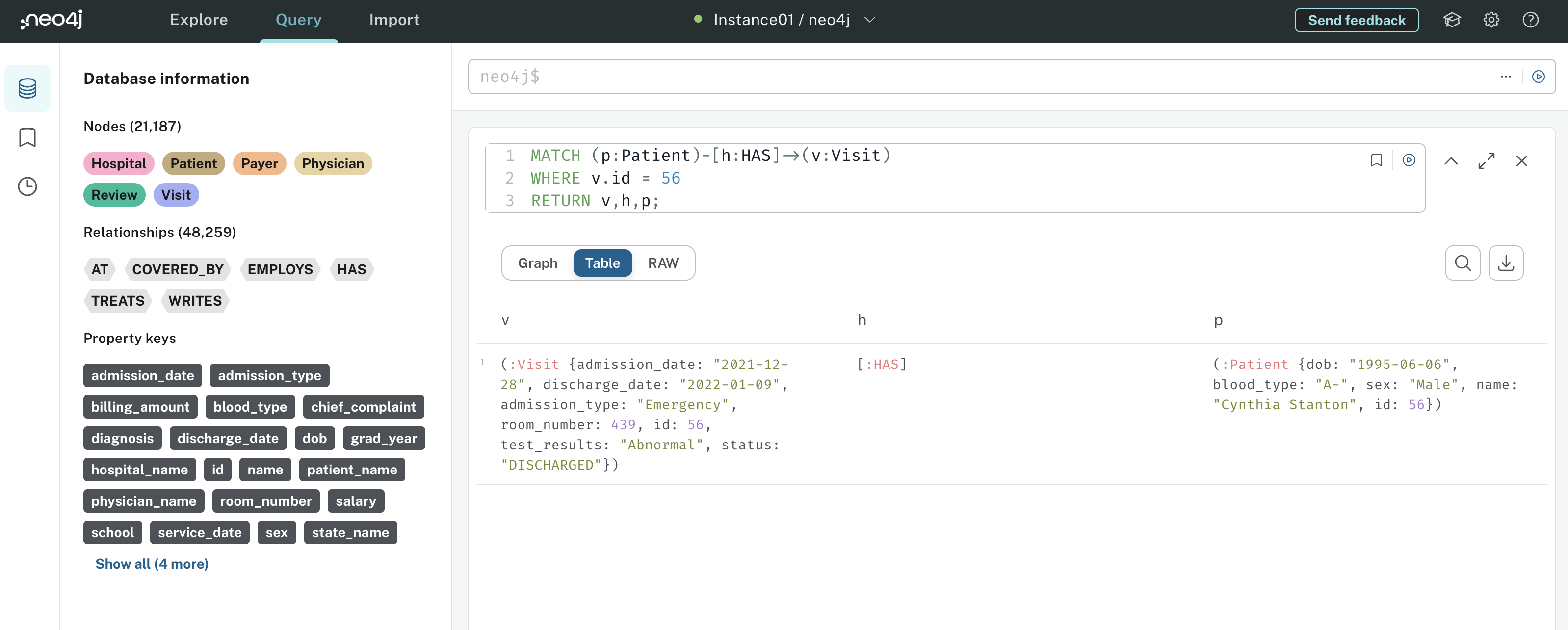

A Brief Overview of Graph Databases

Graph databases, such as Neo4j, are databases designed to represent and process data stored as a graph. Graph data consists of nodes, edges or relationships, and properties. Nodes represent entities, relationships connect entities, and properties provide additional metadata about nodes and relationships.

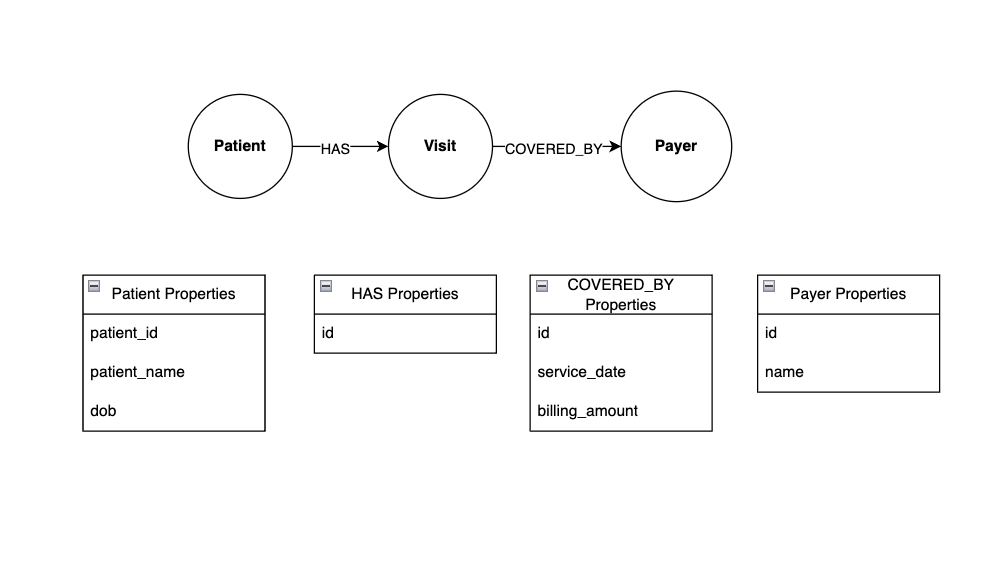

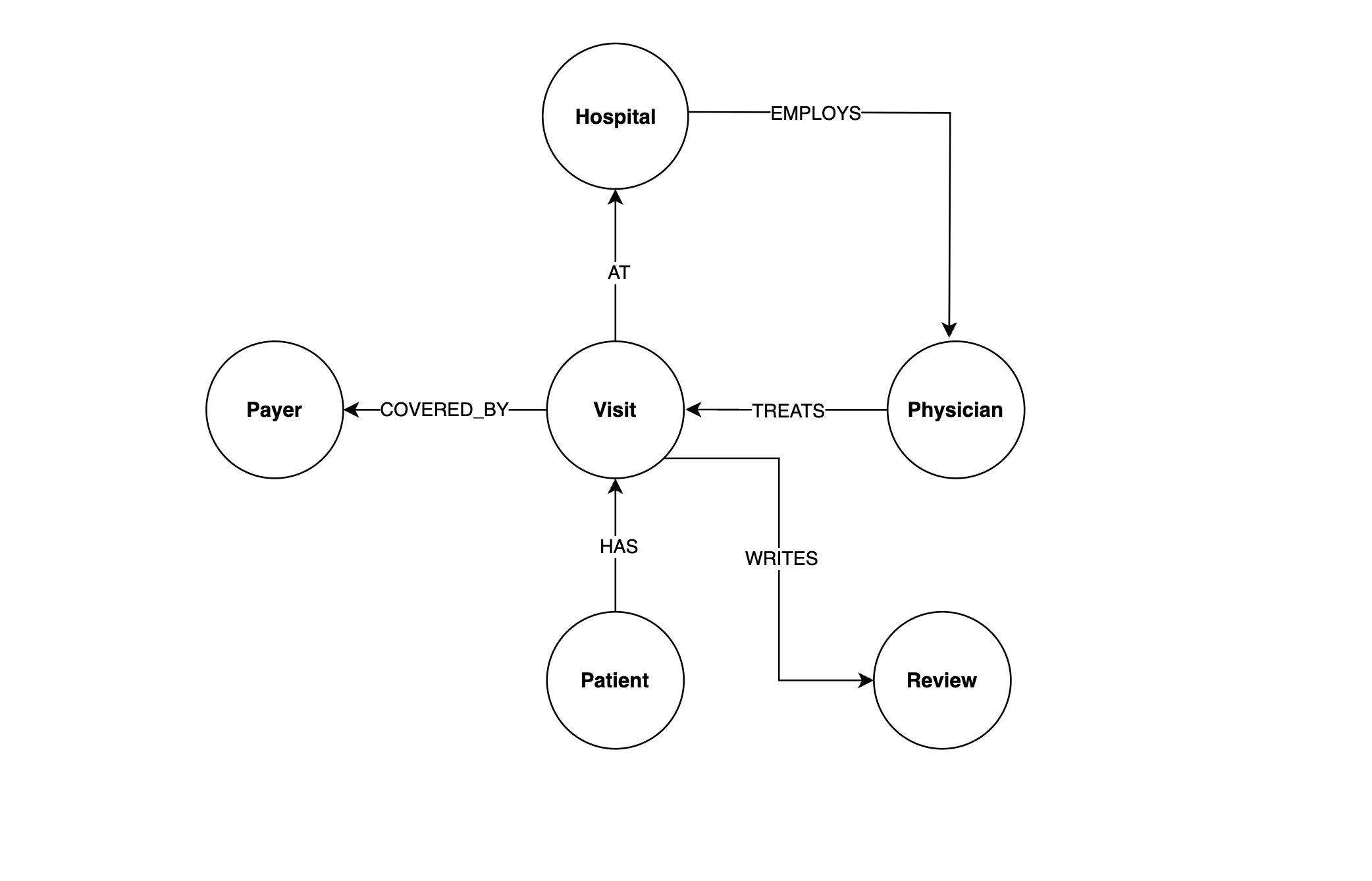

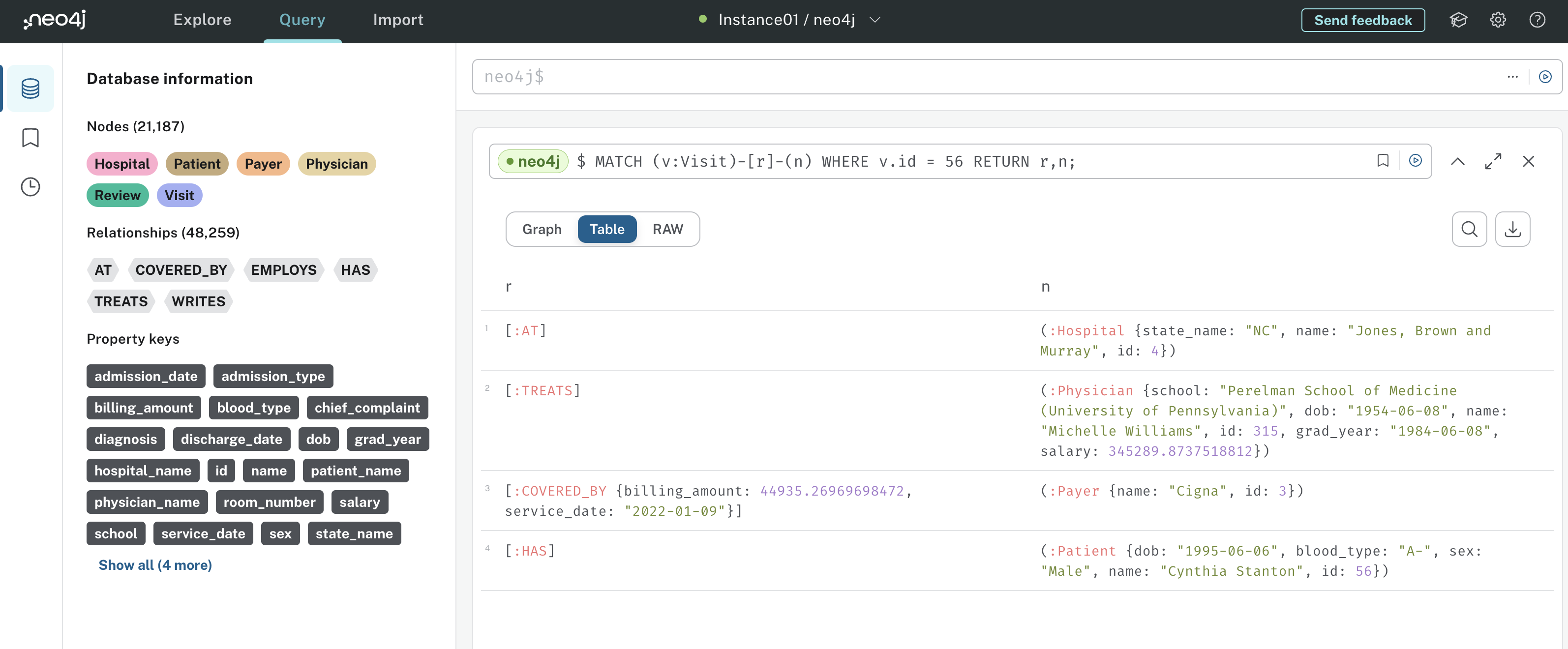

For example, here’s how you might represent hospital system nodes and relationships in a graph:

This graph has three nodes - Patient, Visit, and Payer. Patient and Visit are connected by the HAS relationship, indicating that a hospital patient has a visit. Similarly, Visit and Payer are connected by the COVERED_BY relationship, indicating that an insurance payer covers a hospital visit.

Notice how the relationships are represented by an arrow indicating their direction. For example, the direction of the HAS relationship tells you that a patient can have a visit, but a visit cannot have a patient.

Both nodes and relationships can have properties. In this example, Patient nodes have id, name, and date of birth properties, and the COVERED_BY relationship has service date and billing amount properties. Storing data in a graph like this has several advantages:

-

Simplicity: Modeling real-world relationships between entities is natural in graph databases, reducing the need for complex schemas that require multiple join operations to answer queries.

-

Relationships: Graph databases excel at handling complex relationships. Traversing relationships is efficient, making it easy to query and analyze connected data.

-

Flexibility: Graph databases are schema-less, allowing for easy adaptation to changing data structures. This flexibility is beneficial for evolving data models.

-

Performance: Retrieving connected data is faster in graph databases than in relational databases, especially for scenarios involving complex queries with multiple relationships.

-

Pattern Matching: Graph databases support powerful pattern-matching queries, making it easier to express and find specific structures within the data.

When you have data with many complex relationships, the simplicity and flexibility of graph databases makes them easier to design and query compared to relational databases. As you’ll see later, specifying relationships in graph database queries is concise and doesn’t involve complicated joins. If you’re interested, Neo4j illustrates this well with a realistic example database in their documentation.

Because of this concise data representation, there’s less room for error when an LLM generates graph database queries. This is because you only need to tell the LLM about the nodes, relationships, and properties in your graph database. Contrast this with relational databases where the LLM must navigate and retain knowledge of the table schemas and foreign key relationships throughout your database, leaving more room for error in SQL generation.

Next, you’ll begin working with graph databases by setting up a Neo4j AuraDB instance. After that, you’ll move the hospital system into your Neo4j instance and learn how to query it.

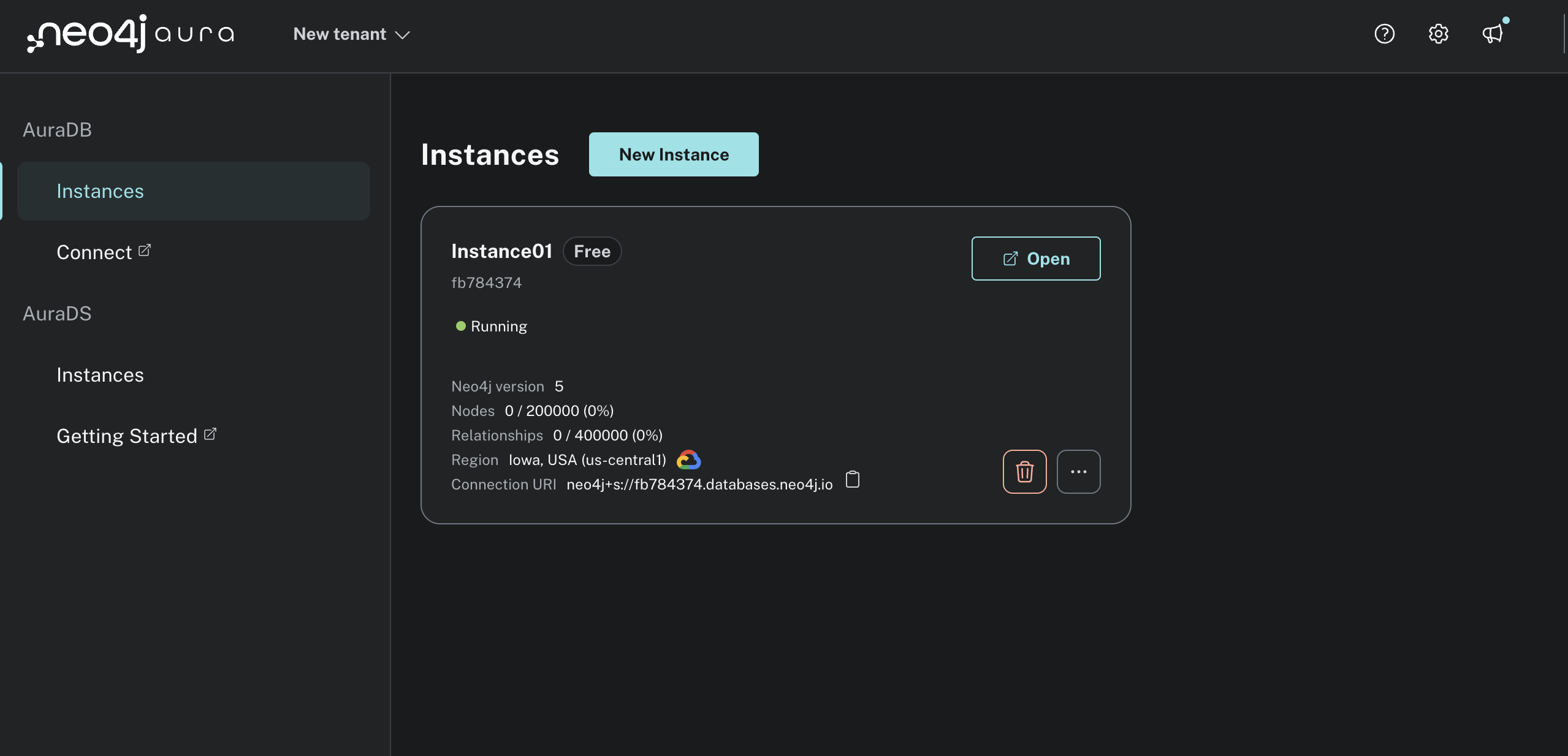

Create a Neo4j Account and AuraDB Instance

To get started using Neo4j, you can create a free Neo4j AuraDB account. The landing page should look something like this:

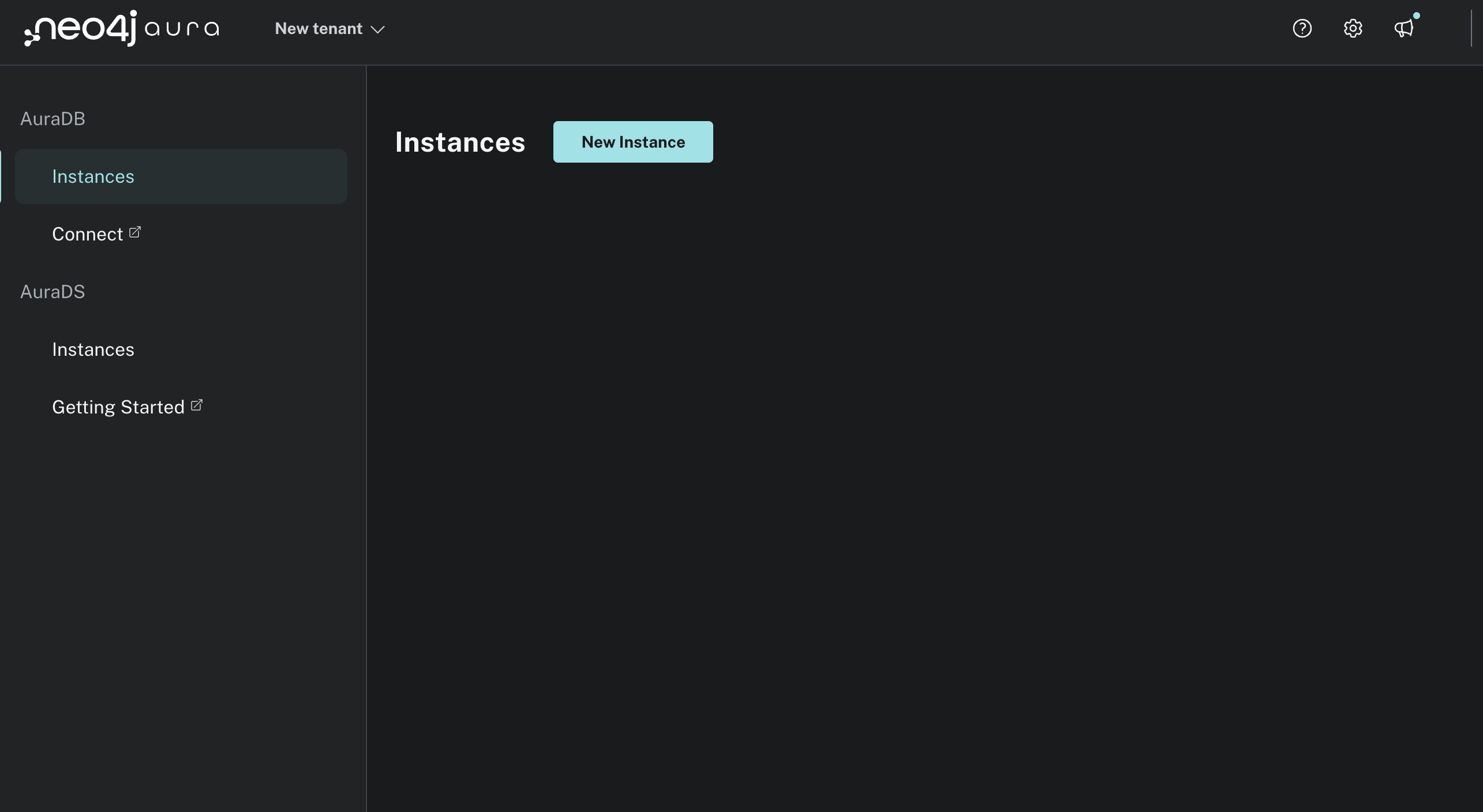

Click the Start Free button and create an account. Once you’re signed in, you should see the Neo4j Aura console:

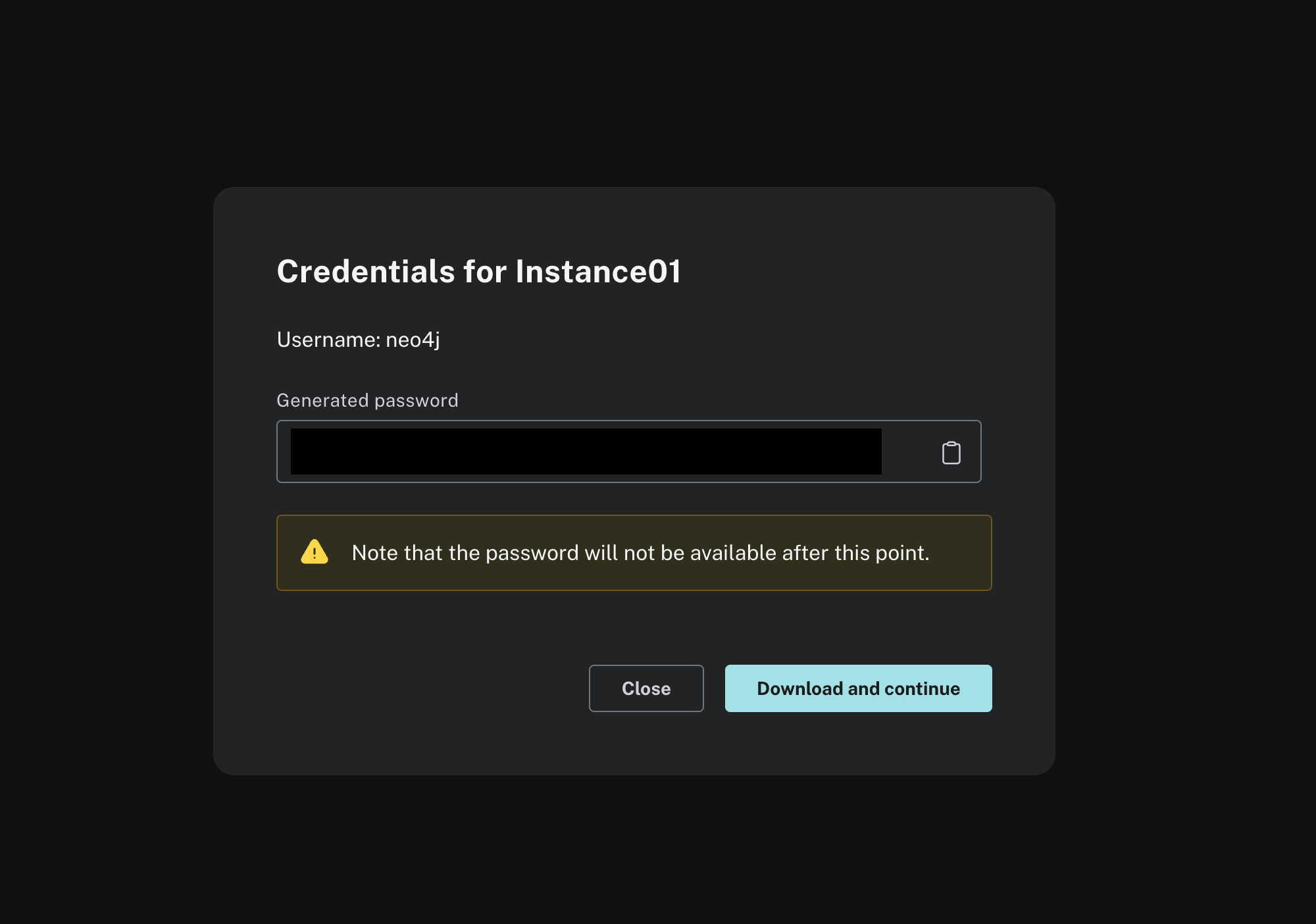

Click New Instance and create a free instance. A modal should pop up similar to this:

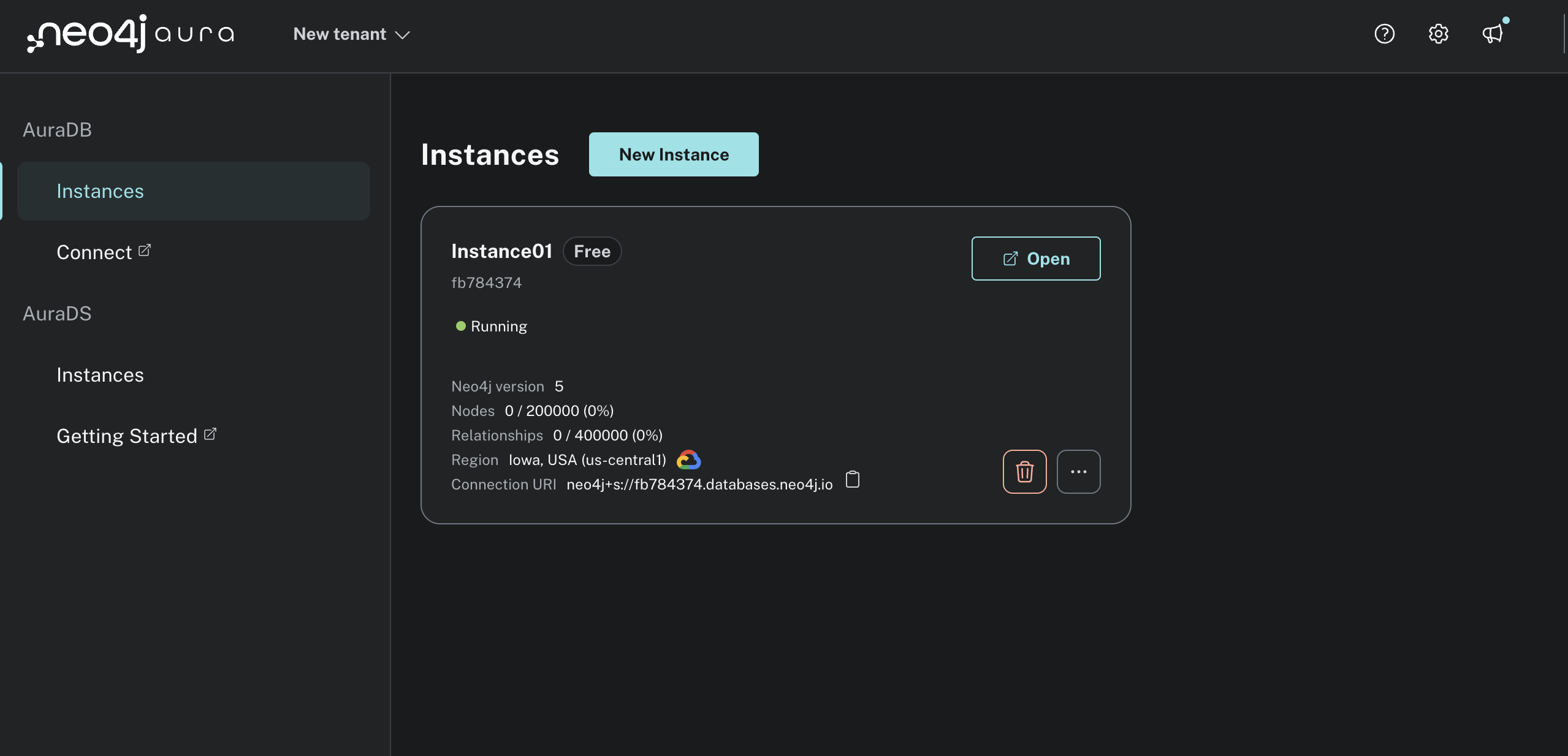

After you click Download and Continue, your instance should be created and a text file containing the Neo4j database credentials should download. Once the instance is created, you’ll see its status is Running. There should be no nodes or relationships yet:

Next, open the text file you downloaded with your Neo4j credentials and copy the NEO4J_URI, NEO4J_USERNAME, and NEO4J_PASSWORD into your .env file:

OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>

NEO4J_URI=<YOUR_NEO4J_URI>

NEO4J_USERNAME=<YOUR_NEO4J_URI>

NEO4J_PASSWORD=<YOUR_NEO4J_PASSWORD>

You’ll use these environment variables to connect to your Neo4j instance in Python so that your chatbot can execute queries.

Note: By default, your NEO4J_URI should be similar to neo4j+s://

You now have everything in place to interact with your Neo4j instance. Next up, you’ll design the hospital system graph database. This will tell you how the hospital entities are related, and it will inform the kinds of queries you can run.

Design the Hospital System Graph Database

Now that you have a running Neo4j AuraDB instance, you need to decide which nodes, relationships, and properties you want to store. One of the most popular ways to represent this is with a flowchart. Based on your understanding of the hospital system data, you come up with the following design:

This diagram shows you all of the nodes and relationships in the hospital system data. One useful way to think about this flowchart is to start with the Patient node and follow the relationships. A Patient has a visit at a hospital, and the hospital employs a physician to treat the visit which is covered by an insurance payer.

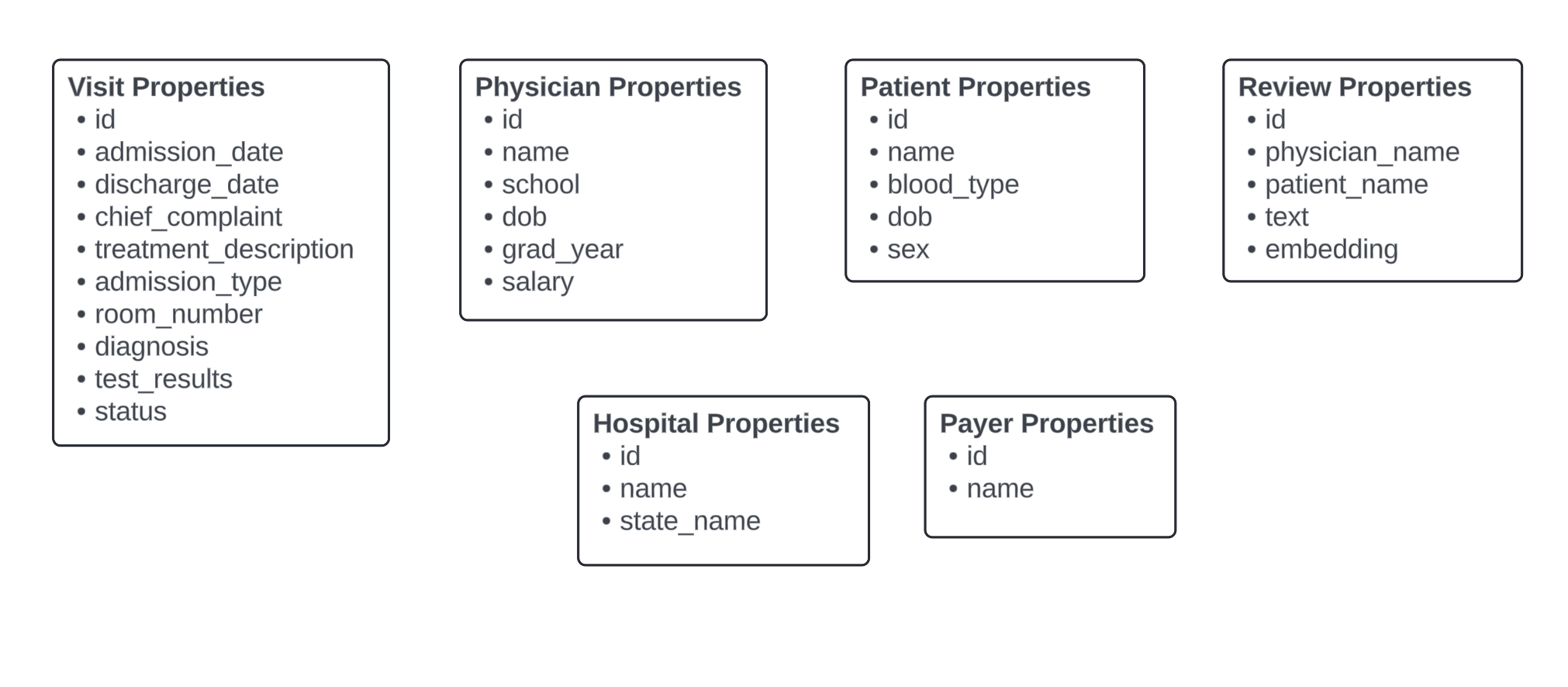

Here are the properties stored in each node:

The majority of these properties come directly from the fields you explored in step 2. One notable difference is that Review nodes have an embedding property, which is a vector representation of the patient_name, physician_name, and text properties. This allows you to do vector searches over review nodes like you did with ChromaDB.

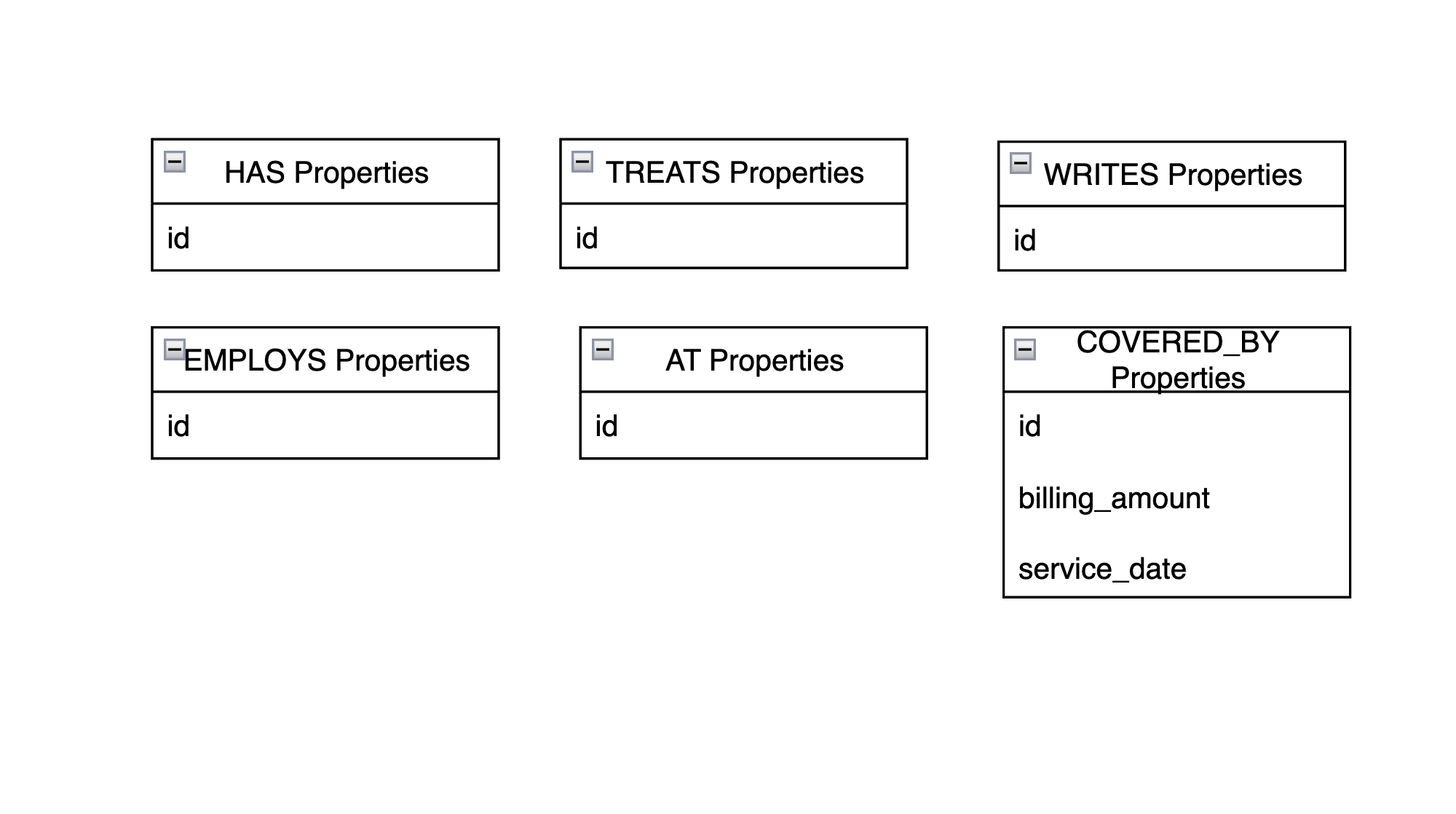

Here are the relationship properties:

As you can see, COVERED_BY is the only relationship with more than an id property. The service_date is the date the patient was discharged from a visit, and billing_amount is the amount charged to the payer for the visit.

Note: This fake hospital system data has a relatively small number of nodes and relationships than what you’d typically see in an enterprise setting. However, you can easily imagine how many more nodes and relationships you could add for a real hospital system. For instance, nurses, pharmacists, pharmacies, prescription drugs, surgeries, patient relatives, and many more hospital entities could be represented as nodes.

You could also redesign this so that diagnoses and symptoms are represented as nodes instead of properties, or you could add more relationship properties. You could do all of this without changing the design you already have. This is the beauty of graphs—you simply add more nodes and relationships as your data evolves.

Now that you have an overview of the hospital system design you’ll use, it’s time to move your data into Neo4j!

Upload Data to Neo4j

With a running Neo4j instance and an understanding of the nodes, properties, and relationships you want to store, you can move the hospital system data into Neo4j. For this, you’ll create a folder called hospital_neo4j_etl with a few empty files. You’ll also want to create a docker-compose.yml file in your project’s root directory:

./

│

├── hospital_neo4j_etl/

│ │

│ ├── src/

│ │ ├── entrypoint.sh

│ │ └── hospital_bulk_csv_write.py

│ │

│ ├── Dockerfile

│ └── pyproject.toml

│

├── .env

└── docker-compose.yml

Your .env file should have the following environment variables:

OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>

NEO4J_URI=<YOUR_NEO4J_URI>

NEO4J_USERNAME=<YOUR_NEO4J_URI>

NEO4J_PASSWORD=<YOUR_NEO4J_PASSWORD>

HOSPITALS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/hospitals.csv

PAYERS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/payers.csv

PHYSICIANS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/physicians.csv

PATIENTS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/patients.csv

VISITS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/visits.csv

REVIEWS_CSV_PATH=https://raw.githubusercontent.com/hfhoffman1144/langchain_neo4j_rag_app/main/data/reviews.csv

Notice that you’ve stored all of the CSV files in a public location on GitHub. Because your Neo4j AuraDB instance is running in the cloud, it can’t access files on your local machine, and you have to use HTTP or upload the files directly to your instance. For this example, you can either use the link above, or upload the data to another location.

Note: If you’re uploading proprietary data to Neo4j, always ensure that it’s stored in a secure location and transferred appropriately. The data used for this project is all synthetic and not proprietary, so there’s no problem with uploading it over a public HTTP connection. However, this would not be a good idea in practice. You can read more about secure ways to import data into Neo4j in their documentation.

Once you have your .env file populated, open pyproject.toml, which provides configuration, metadata, and dependencies defined in the TOML format:

hospital_neo4j_etl/pyproject.toml

[project]

name = "hospital_neo4j_etl"

version = "0.1"

dependencies = [

"neo4j==5.14.1",

"retry==0.9.2"

]

[project.optional-dependencies]

dev = ["black", "flake8"]

This project is a bare bones extract, transform, load (ETL) process that moves data into Neo4j, so it’s only dependencies are neo4j and retry. The main script for the ETL is hospital_neo4j_etl/src/hospital_bulk_csv_write.py. It’s too long to include the full script here, but you’ll get a feel for the main steps hospital_neo4j_etl/src/hospital_bulk_csv_write.py executes. You can copy the full script from the materials:

Get Your Code: Click here to download the free source code for your LangChain chatbot.

First, you import dependencies, load environment variables, and configure logging:

hospital_neo4j_etl/src/hospital_bulk_csv_write.py

import os

import logging

from retry import retry

from neo4j import GraphDatabase

HOSPITALS_CSV_PATH = os.getenv("HOSPITALS_CSV_PATH")

PAYERS_CSV_PATH = os.getenv("PAYERS_CSV_PATH")

PHYSICIANS_CSV_PATH = os.getenv("PHYSICIANS_CSV_PATH")

PATIENTS_CSV_PATH = os.getenv("PATIENTS_CSV_PATH")

VISITS_CSV_PATH = os.getenv("VISITS_CSV_PATH")

REVIEWS_CSV_PATH = os.getenv("REVIEWS_CSV_PATH")

NEO4J_URI = os.getenv("NEO4J_URI")

NEO4J_USERNAME = os.getenv("NEO4J_USERNAME")

NEO4J_PASSWORD = os.getenv("NEO4J_PASSWORD")

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s [%(levelname)s]: %(message)s",

datefmt="%Y-%m-%d %H:%M:%S",

)

LOGGER = logging.getLogger(__name__)

# ...

You import the GraphDatabase class from neo4j to connect to your running instance. Notice here that you’re no longer using Python-dotenv to load environment variables. Instead, you’ll pass environment variables into the Docker container that runs your script. Next, you’ll define functions to move hospital data into Neo4j following your design:

hospital_neo4j_etl/src/hospital_bulk_csv_write.py

# ...

NODES = ["Hospital", "Payer", "Physician", "Patient", "Visit", "Review"]

def _set_uniqueness_constraints(tx, node):

query = f"""CREATE CONSTRAINT IF NOT EXISTS FOR (n:{node})

REQUIRE n.id IS UNIQUE;"""

_ = tx.run(query, {})

@retry(tries=100, delay=10)

def load_hospital_graph_from_csv() -> None:

"""Load structured hospital CSV data following

a specific ontology into Neo4j"""

driver = GraphDatabase.driver(

NEO4J_URI, auth=(NEO4J_USERNAME, NEO4J_PASSWORD)

)

LOGGER.info("Setting uniqueness constraints on nodes")

with driver.session(database="neo4j") as session:

for node in NODES:

session.execute_write(_set_uniqueness_constraints, node)

# ...

# ...

First, you define a helper function, _set_uniqueness_constraints(), that creates and runs queries enforcing each node to have a unique ID.

In load_hospital_graph_from_csv(), you instantiate a driver that connects to your Neo4j instance and set uniqueness constraints for each hospital system node.