Continuous integration (CI) has become essential to software development, allowing teams to merge code changes frequently and catch errors early. Docker containers help facilitate the continuous integration process by providing a consistent environment where you can test and ship code on each commit.

In this tutorial, you’ll learn how to use Docker to create a robust continuous integration pipeline for a Flask web application. You’ll go through the steps of developing and testing the application locally, containerizing it, orchestrating containers using Docker Compose, and defining a CI pipeline using GitHub Actions. By the end of this tutorial, you’ll be able to create a fully automated CI pipeline for your web applications.

In this tutorial, you’ll:

- Run a Redis server locally in a Docker container

- Dockerize a Python web application written in Flask

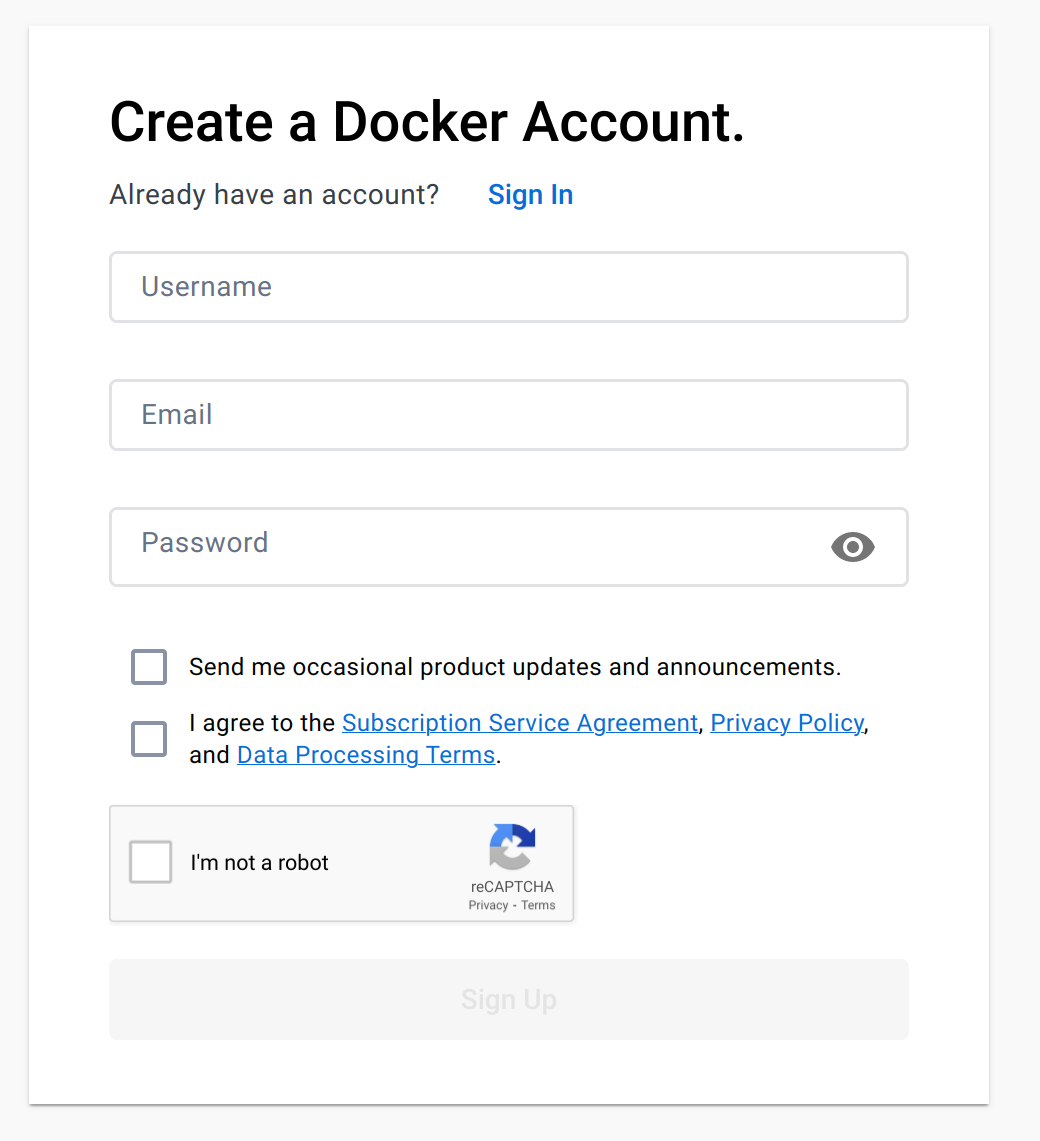

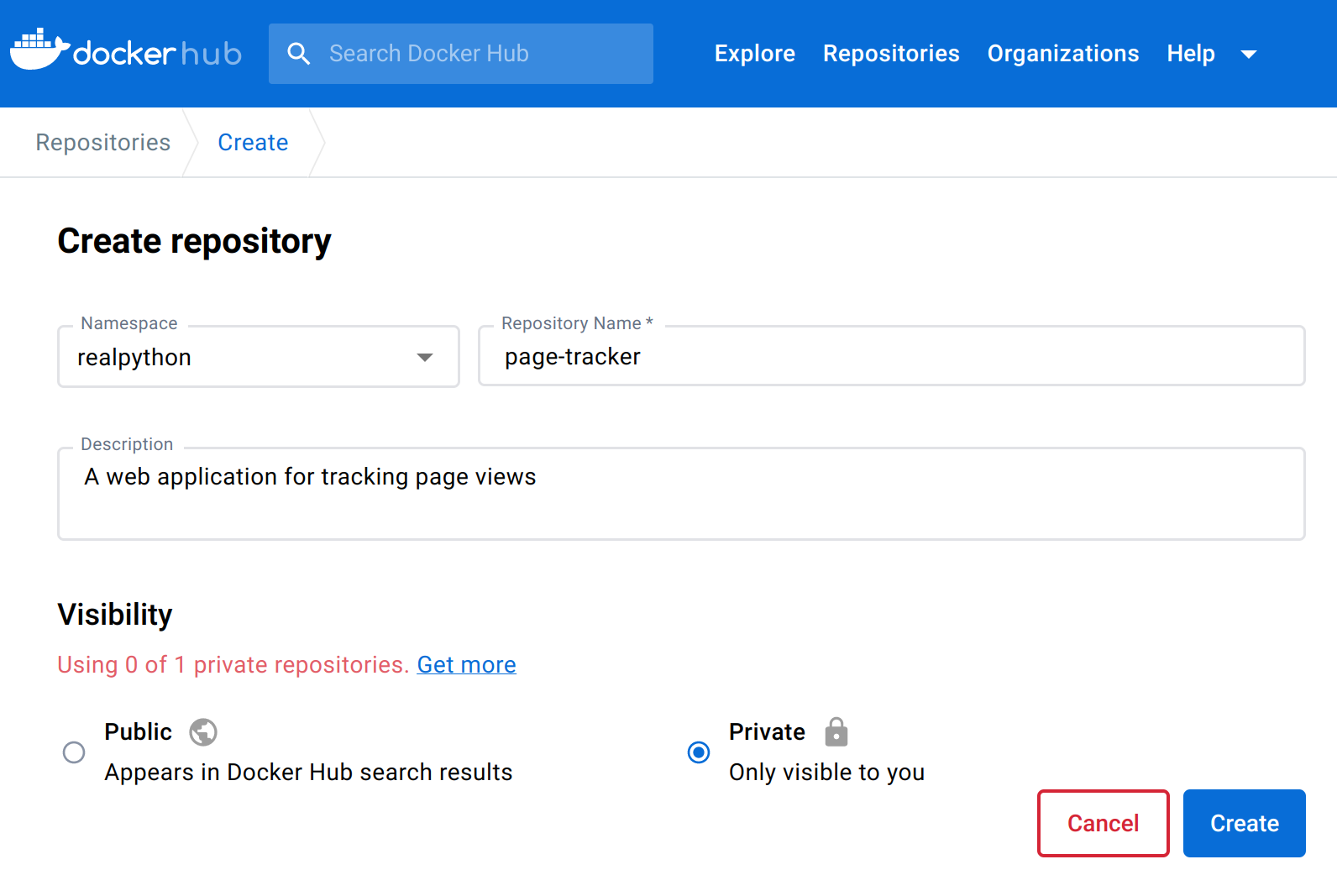

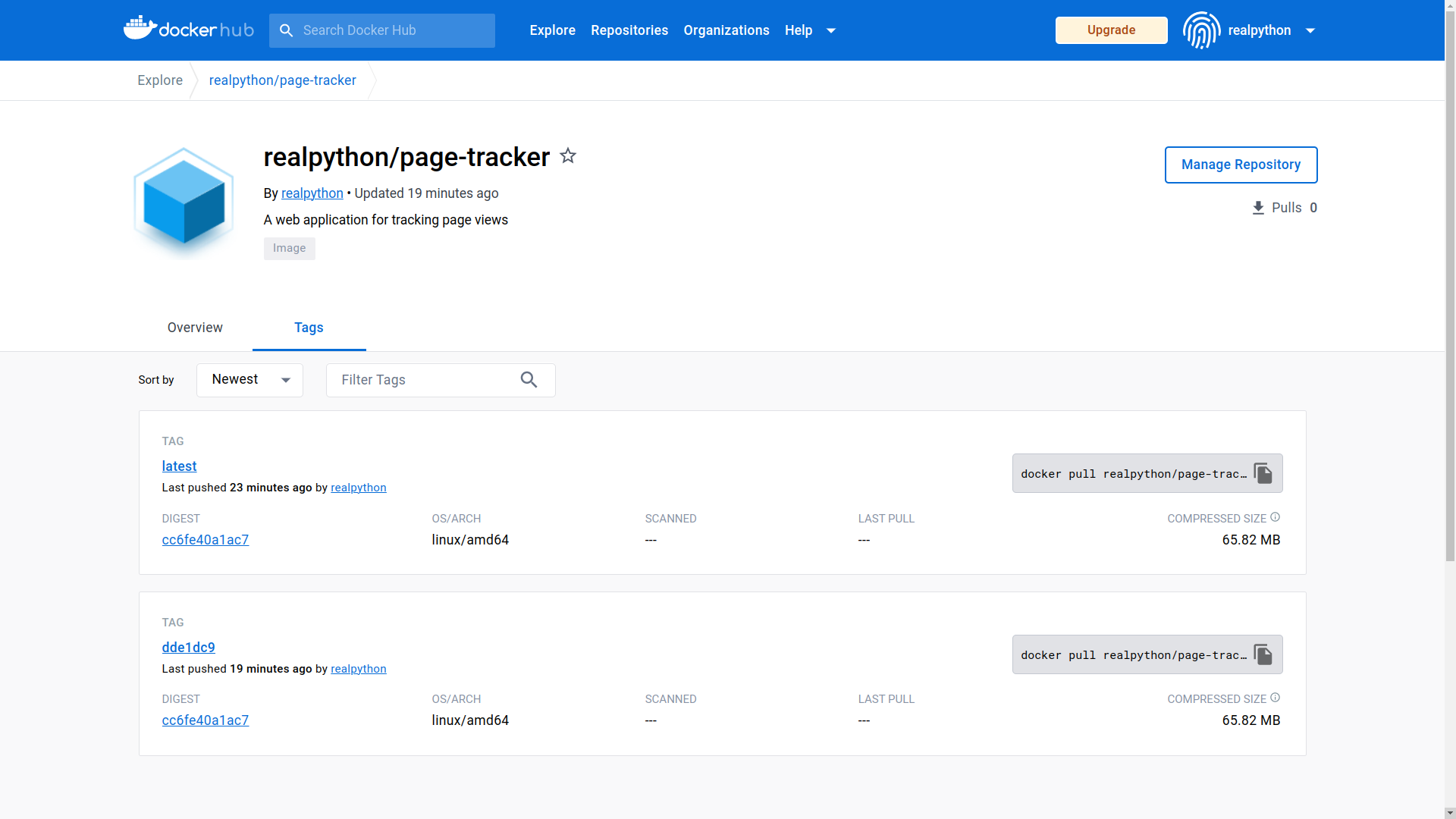

- Build Docker images and push them to the Docker Hub registry

- Orchestrate multi-container applications with Docker Compose

- Replicate a production-like infrastructure anywhere

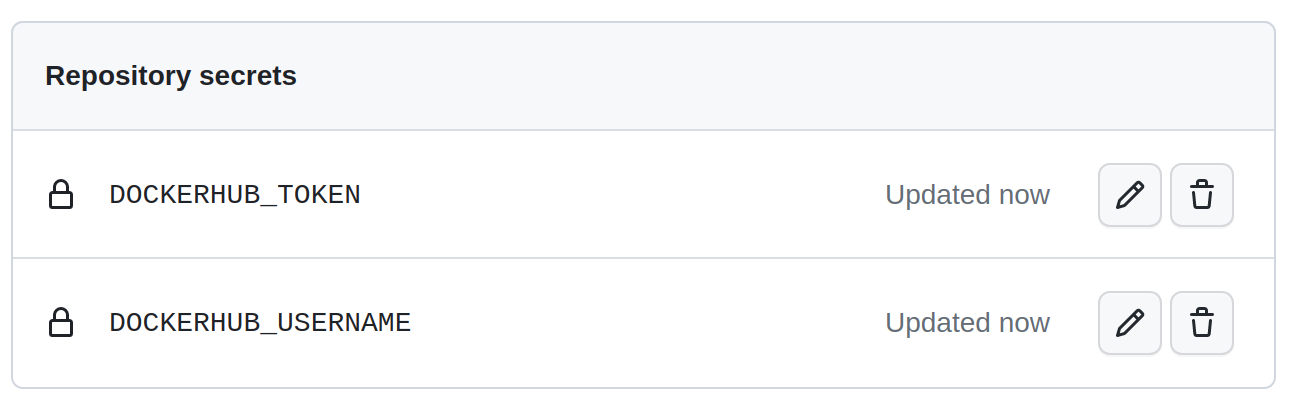

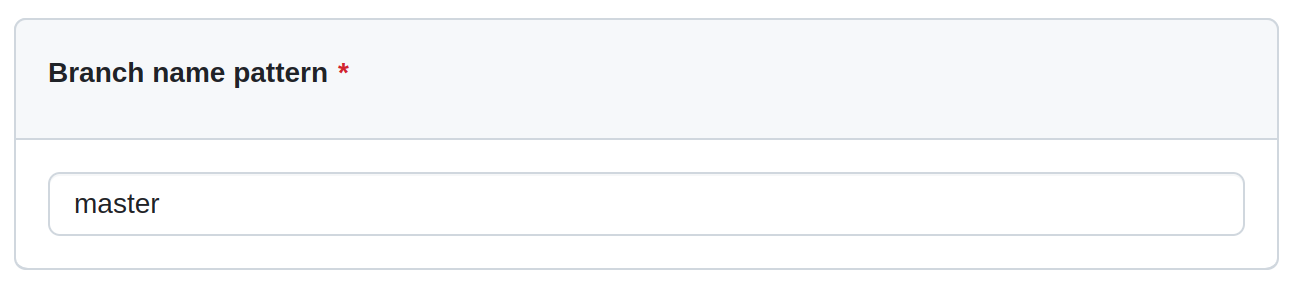

- Define a continuous integration workflow using GitHub Actions

Ideally, you should have some experience with web development in Python, test automation, the use of Redis with Python, and source code version control with Git and GitHub. Previous exposure to Docker would be a plus but isn’t necessary. You should also have a Git client and a GitHub account to follow along and replicate the steps of this tutorial.

Note: This tutorial is loosely based on an older tutorial entitled Docker in Action - Fitter, Happier, More Productive, which was written by Michael Herman, who presented his CI workflow at PyTennessee on February 8, 2015. You can view the corresponding slides presented at the conference if you’re interested.

Unfortunately, many of the tools described in the original tutorial are no longer supported or available for free. In this updated tutorial, you’ll use the latest tools and technologies, such as GitHub Actions.

If you’d like to skip the initial steps of setting up Docker on your computer and building a sample web application, then jump straight to defining a continuous integration pipeline. Either way, you’ll want to download the supporting materials, which come with a finished Flask web application and the related resources that will help you follow along with this tutorial:

Free Download: Click here to download your Flask application and related resources so you can define a continuous integration pipeline with Docker.

Get an Overview of the Project Architecture

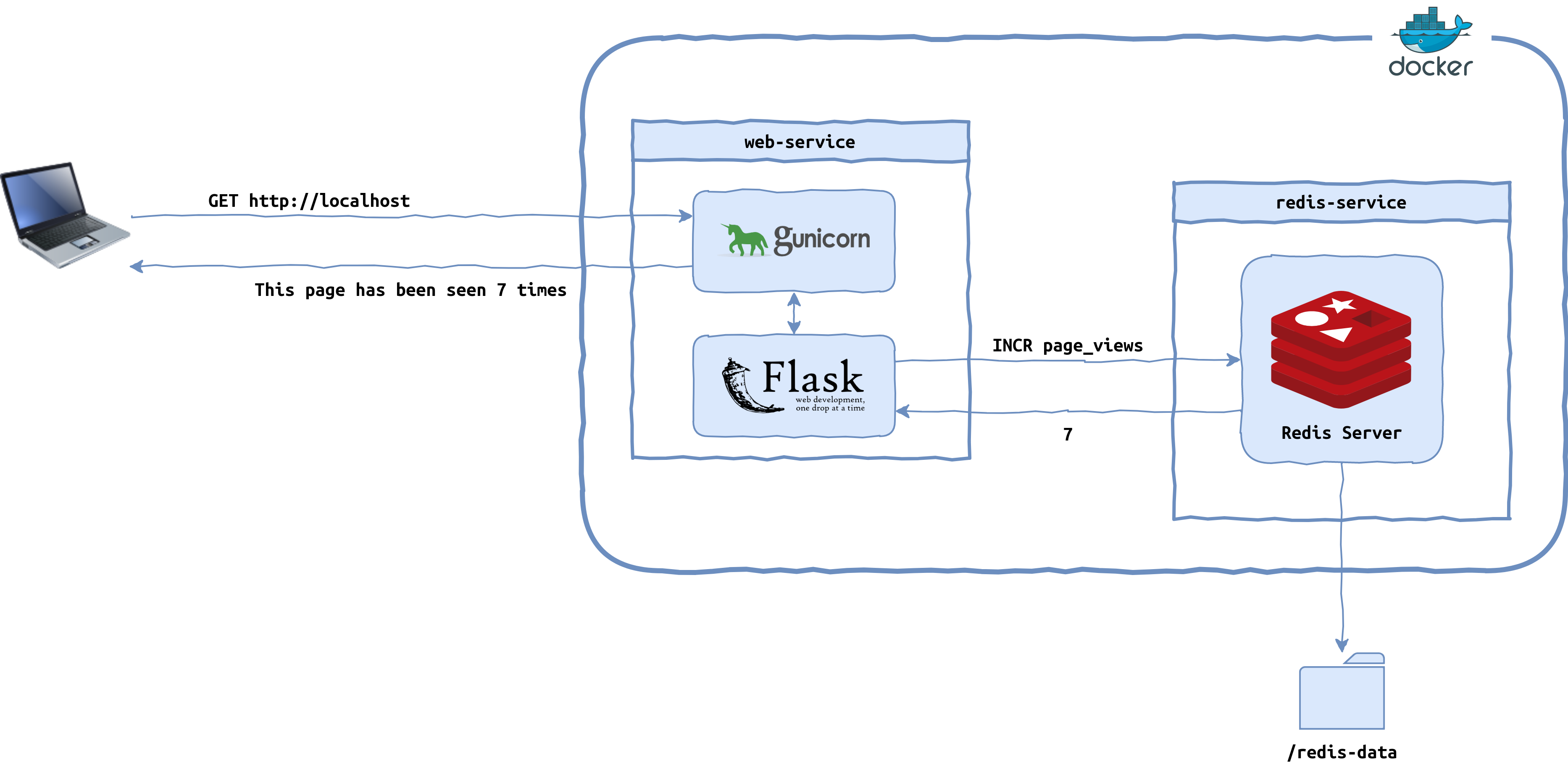

By the end of this tutorial, you’ll have a Flask web application for tracking page views stored persistently in a Redis data store. It’ll be a multi-container application orchestrated by Docker Compose that you’ll be able to build and test locally as well as in the cloud, paving the way for continuous integration:

The application consists of two Docker containers. The first container will run a Flask application on top of Gunicorn, responding to HTTP requests and updating the number of page views. The second container will run a Redis instance for storing page view data persistently in a local volume on the host machine.

Docker is all that’s required to run this application, and you’ll set it up now.

Set Up Docker on Your Computer

Docker is an umbrella term that can have a few different meanings for different people depending on the context. For example, when someone refers to docker, they can mean one of the following:

- Docker, Inc.: The company behind the platform and the related tools

- Docker: The open-source container platform

- Docker CLI: The

dockerclient command-line program dockerd: The Docker daemon that manages the containers

There are also several tools and projects associated with the Docker platform, such as:

- Docker Compose

- Docker Desktop

- Docker Engine

- Docker Hub

- Docker Swarm Mode

In this tutorial, you’ll use all but the last one from the list above. By the way, don’t confuse the legacy Docker Classic Swarm, which was an external tool, with the Docker Swarm Mode built into the Docker Engine since version 1.12.

Note: You may have heard of Docker Machine and Docker Toolbox. These are old tools that are no longer maintained.

The main problem that Docker solves is the ability to run applications anywhere in consistent and reproducible environments with little or no configuration. It can package your application code, binaries, and dependencies, such as language runtimes and libraries, into a single artifact. You’ll use Docker to simulate a hypothetical production environment on your local machine during development and on a continuous integration server.

You have two choices for installing Docker:

If you’re comfortable around the terminal and appreciate an extra level of control, then look no further than the open-source Docker Engine, which provides the core runtime and the command-line interface for managing your containers. On the other hand, if you prefer a one-stop-shop solution with an intuitive graphical user interface, then you should consider Docker Desktop instead.

Note: Out of the box, the desktop application comes with Docker Compose, which you’ll need later on, when you’re orchestrating containers for continuous integration.

When you browse the official Docker documentation, you may get the impression that Docker Desktop takes the spotlight. It’s a truly fantastic tool, but you must remember that Docker Desktop remains free of charge for personal use only. Since August 2021, you’ve needed a paid subscription to use it in commercial projects.

While it’s technically possible to have both tools installed side by side, you should generally avoid using them at the same time to minimize the risk of any potential interference between their virtual networks or port bindings. You can do so by stopping one of them and switching the context between Docker Engine and Docker Desktop.

Note: Docker Desktop used to be available only on Windows and macOS, but that’s changed, and you can now install it on certain Linux distributions, too, including Ubuntu, Debian, and Fedora. However, the Linux version of Docker Desktop runs on top of a virtual machine to mimic the user experience of working with it on other operating systems.

To verify that you’ve successfully installed Docker on your system, either as Docker Engine or the Docker Desktop wrapper application, open the terminal and type the following command:

$ docker --version

Docker version 23.0.4, build f480fb1

You should see your Docker version along with the build number. If you’re on Linux, then you might want to follow the post-installation steps to use the docker command without prefacing it with sudo for administrative privileges.

Before you can start using Docker to help with continuous integration, you’ll need to create a rudimentary web application.

Develop a Page View Tracker in Flask

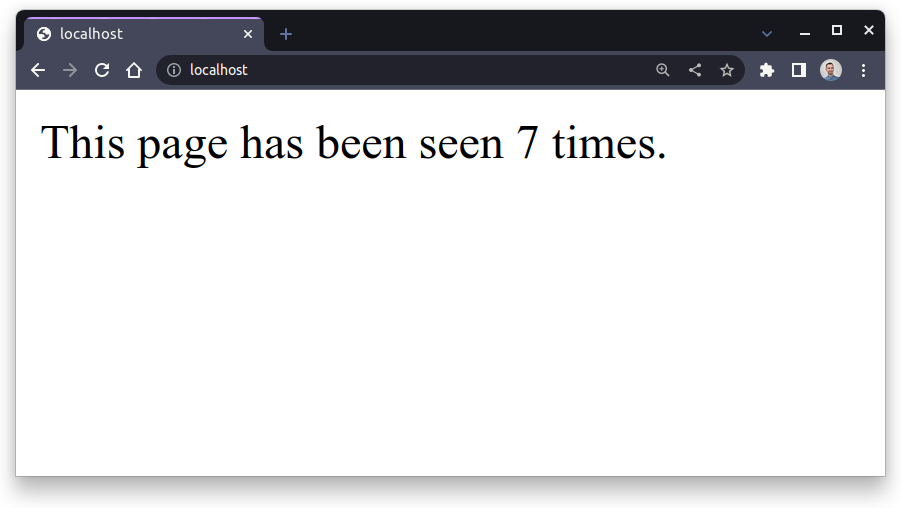

Over the next few sections, you’ll be implementing a bare-bones web application using the Flask framework. Your application will keep track of the total number of page views and display that number to the user with each request:

The current state of the application will be saved in a Redis data store, which is commonly used for caching and other types of data persistence. This way, stopping your web server won’t reset the view count. You can think of Redis as a kind of database.

If you’re not interested in building this application from scratch, then feel free to download its complete source code by clicking the link below, and jump ahead to dockerizing your Flask web application:

Free Download: Click here to download your Flask application and related resources so you can define a continuous integration pipeline with Docker.

Even if you intend to write the code yourself, it’s still a good idea to download the finished project and use it as a reference to compare with your implementation in case you get stuck.

Before you can start developing the application, you’ll need to set up your working environment.

Prepare the Environment

As with every Python project, you should follow roughly the same steps when you start, which include making a new directory and then creating and activating an isolated virtual environment for your project. You can do so directly from your favorite code editor like Visual Studio Code or a full-fledged IDE such as PyCharm, or you can type a few commands in the terminal:

First, make a new directory named page-tracker/ and then create a Python virtual environment called venv/ right inside of it. Give the virtual environment a descriptive prompt to make it easily recognizable. Finally, after activating the newly created virtual environment, upgrade pip to the latest version in order to avoid potential issues when installing Python packages in the future.

Note: On Windows, you may need to run Windows Terminal as administrator first and loosen your script execution policy before creating the virtual environment.

In this tutorial, you’ll use the modern way of specifying your project’s dependencies and metadata through a pyproject.toml configuration file and setuptools as the build back end. Additionally, you’ll follow the src layout by placing your application’s source code in a separate src/ subdirectory to better organize the files in your project. This will make it straightforward to package your code without the automated tests that you’ll add later.

Go ahead and scaffold your Python project placeholder using the following commands:

When you’re done, you should have the following directory structure in place:

page-tracker/

│

├── src/

│ └── page_tracker/

│ ├── __init__.py

│ └── app.py

│

├── venv/

│

├── constraints.txt

└── pyproject.toml

As you can see, you’ll have only one Python module, app, defined in a package called page_tracker, sitting inside the src/ directory. The constraints.txt file will specify pinned versions of your project’s dependencies in order to achieve repeatable installs.

This project will depend on two external libraries, Flask and Redis, which you can declare in your pyproject.toml file:

# pyproject.toml

[build-system]

requires = ["setuptools>=67.0.0", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "page-tracker"

version = "1.0.0"

dependencies = [

"Flask",

"redis",

]

Note that you don’t typically specify dependency versions here. Instead, you can freeze them along with any transitive dependencies in a requirements or constraints file. The first one tells pip what packages to install, and the latter enforces specific package versions of the transitive dependencies, resembling a Pipenv or Poetry lock file.

To generate a constraints file, you must first install your page-tracker project into the active virtual environment, which will bring the required external libraries from the Python Package Index (PyPI). Make sure that you’ve created the desired folder structure, and then issue the following commands:

(page-tracker) $ python -m pip install --editable .

(page-tracker) $ python -m pip freeze --exclude-editable > constraints.txt

Even though you haven’t typed a single line of code yet, Python will recognize and install your package placeholder. Because your package follows the src layout, it’s convenient to install it in editable mode during development. This will allow you to make changes to your source code and have them reflected in the virtual environment immediately without a reinstall. However, you want to exclude the editable package from the constraints file.

Note: The generated constraints file is super valuable if you’re planning to share your project. It means someone else can run the following command in their terminal to reproduce the same environment as yours:

(page-tracker) $ python -m pip install -c constraints.txt .

Because you provided a constraints file through the -c option, pip installed the pinned dependencies instead of the latest ones available. That means you have repeatable installs. You’ll use a similar command later to build a Docker image.

Okay. You’re almost ready to start coding your Flask web application. Before doing so, you’ll switch gears for a moment and prepare a local Redis server to connect to over a network.

Run a Redis Server Through Docker

The name Redis is a portmanteau of the words remote dictionary server, which pretty accurately conveys its purpose as a remote, in-memory data structure store. Being a key-value store, Redis is like a remote Python dictionary that you can connect to from anywhere. It’s also considered one of the most popular NoSQL databases used in many different contexts. Frequently, it serves the purpose of a cache on top of a relational database.

Note: While Redis keeps all of its data in volatile memory, which makes it extremely fast, the server comes with a variety of persistence options. They can ensure different levels of data durability in case of a power outage or reboot. However, configuring Redis correctly often proves difficult, which is why many teams decide to use a managed service outsourced to cloud providers.

Installing Redis on your computer is quite straightforward, but running it through Docker is even simpler and more elegant, assuming that you’ve installed and configured Docker before. When you run a service, such as Redis, in a Docker container, it remains isolated from the rest of your system without causing clutter or hogging system resources like network port numbers, which are limited.

To run Redis without installing it on your host machine, you can run a new Docker container from the official Redis image by invoking the following command:

$ docker run -d --name redis-server redis

Unable to find image 'redis:latest' locally

latest: Pulling from library/redis

26c5c85e47da: Pull complete

39f79586dcf2: Pull complete

79c71d0520e5: Pull complete

60e988668ca1: Pull complete

873c3fc9fdc6: Pull complete

50ce7f9bf183: Pull complete

Digest: sha256:f50031a49f41e493087fb95f96fdb3523bb25dcf6a3f0b07c588ad3cdb...

Status: Downloaded newer image for redis:latest

09b9842463c78a2e9135add810aba6c4573fb9e2155652a15310009632c40ea8

This creates a new Docker container based on the latest version of the redis image, with the custom name redis-server, which you’ll refer to later. The container is running in the background in detached mode (-d). When you run this command for the first time, Docker will pull the corresponding Docker image from Docker Hub, which is the official repository of Docker images, akin to PyPI.

As long as everything goes according to plan, your Redis server should be up and running. Because you started the container in detached mode (-d), it’ll remain active in the background. To verify that, you can list your Docker containers using the docker container ls command or the equivalent docker ps alias:

$ docker ps

CONTAINER ID IMAGE ... STATUS PORTS NAMES

09b9842463c7 redis ... Up About a minute 6379/tcp redis-server

Here, you can see that a container with an ID prefix matching the one you got when running the docker run command has been up since about a minute ago. The container is based on the redis image, has been named redis-server, and uses TCP port number 6379, which is the default port for Redis.

Next, you’ll try connecting to that Redis server in various ways.

Test the Connection to Redis

On the overview page of the official Redis image on Docker Hub, you’ll find instructions on how to connect to a Redis server running in a Docker container. Specifically, this page talks about using the dedicated interactive command-line interface, Redis CLI, that comes with your Docker image.

You can start another Docker container from the same redis image, but this time, set the container’s entry point to the redis-cli command instead of the default Redis server binary. When you set up multiple containers to work together, you should use Docker networks, which require a few extra steps to configure.

First, create a new user-defined bridge network named after your project, for example:

$ docker network create page-tracker-network

c942131265bf097da294edbd2ac375cd5410d6f0d87e250041827c68a3197684

By defining a virtual network such as this, you can hook up as many Docker containers as you like and let them discover each other through descriptive names. You can list the networks that you’ve created by running the following command:

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

1bf8d998500e bridge bridge local

d5cffd6ea76f host host local

a85d88fc3abe none null local

c942131265bf page-tracker-network bridge local

Next, connect your existing redis-server container to this new virtual network, and specify the same network for the Redis CLI when you start its corresponding container:

$ docker network connect page-tracker-network redis-server

$ docker run --rm -it \

--name redis-client \

--network page-tracker-network \

redis redis-cli -h redis-server

The --rm flag tells Docker to remove the created container as soon as you terminate it since this is a temporary or ephemeral container that you don’t need to start ever again. The -i and -t flags, abbreviated to -it, run the container interactively, letting you type commands by hooking up to your terminal’s standard streams. With the --name option, you give your new container a descriptive name.

The --network option connects your new redis-client container to the previously created virtual network, allowing it to communicate with the redis-server container. This way, both containers will receive hostnames corresponding to their names given by the --name option. Notice that, by using the -h parameter, you tell Redis CLI to connect to a Redis server identified by its container name.

Note: There’s an even quicker way to connect your two containers through a virtual network without explicitly creating one. You can specify the --link option when running a new container:

$ docker run --rm -it \

--name redis-client \

--link redis-server:redis-client \

redis redis-cli -h redis-server

However, this option is deprecated and may be removed from Docker at some point.

When your new Docker container starts, you’ll drop into an interactive Redis CLI, which resembles a Python REPL with the following prompt:

redis-server:6379> SET pi 3.14

OK

redis-server:6379> GET pi

"3.14"

redis-server:6379> DEL pi

(integer) 1

redis-server:6379> KEYS *

(empty array)

Once there, you can test a few Redis commands by, for example, setting a key-value pair, getting the value of the corresponding key, deleting that key-value pair, or retrieving the list of all keys currently stored in the server. To exit the interactive Redis CLI, press Ctrl+C on your keyboard.

If you installed Docker Desktop, then, in most cases, it won’t route traffic from your host machine to the containers. There’ll be no connection between your local network and the default Docker network:

Docker Desktop for Mac can’t route traffic to containers. (Source)

Docker Desktop for Windows can’t route traffic to Linux containers. However, you can ping the Windows containers. (Source)

The same is true for Docker Desktop on Linux. On the other hand, if you’re using Docker Engine or running Windows containers on a Windows host machine, then you’ll be able to access such containers by their IP addresses.

Therefore, it may sometimes be possible for you to communicate with the Redis server directly from your host machine. First, find out the IP address of the corresponding Docker container:

$ docker inspect redis-server \

-f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{println}}{{end}}'

172.17.0.2

172.18.0.2

If you see more than one IP address, then it means that your container is connected to multiple networks. Containers get automatically connected to the default Docker network when you start them.

Take note of one of these addresses, which may be different for you. Now, you can use this IP address for the -h parameter’s value instead of the linked container name in redis-cli. You can also use this IP address to connect to Redis with netcat or a Telnet client, like PuTTY or the telnet command:

$ telnet 172.17.0.2 6379

Trying 172.17.0.2...

Connected to 172.17.0.2.

Escape character is '^]'.

SET pi 3.14

+OK

GET pi

$4

3.14

DEL pi

:1

KEYS *

*0

^]

telnet> Connection closed.

Remember to provide the port number, which defaults to 6379, on which Redis listens for incoming connections. You can type Redis commands in plaintext here because the server uses an unencrypted protocol unless you explicitly enable TLS support in the configuration.

Finally, you can take advantage of port mapping to make Redis available outside of the Docker container. During development, you’ll want to connect to Redis directly rather than through a virtual network from another container, so you don’t have to connect it to any network just yet.

To use port mapping, stop and remove your existing redis-server, and then run a new container with the -p option defined as below:

$ docker stop redis-server

$ docker rm redis-server

$ docker run -d --name redis-server -p 6379:6379 redis

The number on the left of the colon (:) represents the port number on the host machine or your computer, while the number on the right represents the mapped port inside the Docker container that’s about to run. Using the same port number on both sides effectively forwards it so that you can connect to Redis as if it were running locally on your computer:

$ telnet localhost 6379

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

INCR page_views

:1

INCR page_views

:2

INCR page_views

:3

^]

telnet> Connection closed.

After connecting to Redis, which is now visible on localhost and the default port, you use the INCR command to increment the number of page views. If the underlying key doesn’t exist yet, then Redis will initialize it with a value of 1.

Note: If you have Redis installed locally, or some system process is also using port 6379 on your host machine, then you’ll need to map your port numbers differently using an unoccupied port. For example, you could do the following:

$ docker run -d --name redis-server -p 9736:6379 redis

This will allow you to connect to localhost on port 9736 if that’s not already taken by another service. It doesn’t matter what port you use as long as it’s available.

Now that you know how to connect to Redis from the command line, you can move on and see how to do the same from a Python program.

Connect to Redis From Python

At this point, you have a Redis server running in a Docker container, which you can access on localhost using the default port number for Redis. If you’d like to learn more about your container, or any other Docker resource, then you can always retrieve valuable information by inspecting the object at hand:

$ docker inspect redis-server

[

{

"Id": "09b9842463c78a2e9135add810aba6...2a15310009632c40ea8",

⋮

"NetworkSettings": {

⋮

"Ports": {

"6379/tcp": null

},

⋮

"IPAddress": "172.17.0.2",

⋮

}

}

]

In this case, you’re asking for information about the redis-server container, which includes a plethora of details, such as the container’s network configuration. The docker inspect command returns data in the JSON format by default, which you can filter down further using Go templates.

Next, open the terminal, activate your project’s virtual environment, and start a new Python REPL:

Assuming you previously installed the redis package in this virtual environment, you should be able to import the Redis client for Python and call one of its methods:

>>> from redis import Redis

>>> redis = Redis()

>>> redis.incr("page_views")

4

>>> redis.incr("page_views")

5

When you create a new Redis instance without specifying any arguments, it’ll try to connect to a Redis server running on localhost and the default port, 6379. In this case, calling .incr() confirms that you’ve successfully established a connection with Redis sitting in your Docker container because it remembered the last value of the page_views key.

If you need to connect to Redis located on a remote machine, then supply a custom host and a port number as parameters:

>>> from redis import Redis

>>> redis = Redis(host="127.0.0.1", port=6379)

>>> redis.incr("page_views")

6

Note that you should pass the port number as an integer, though the library won’t complain if you pass a string instead.

Another way to connect to Redis is by using a specially formatted string, which represents a URL:

>>> from redis import Redis

>>> redis = Redis.from_url("redis://localhost:6379/")

>>> redis.incr("page_views")

7

This can be especially convenient if you want to store your Redis configuration in a file or environment variable.

Great! You can grab one of these code snippets and integrate it with your Flask web application. In the next section, you’ll see how to do just that.

Implement and Run the Flask Application Locally

Go back to your code editor, open the app module in your page-tracker project, and write the following few lines of Python code:

# src/page_tracker/app.py

from flask import Flask

from redis import Redis

app = Flask(__name__)

redis = Redis()

@app.get("/")

def index():

page_views = redis.incr("page_views")

return f"This page has been seen {page_views} times."

You start by importing Flask and Redis from your project’s respective third-party libraries listed as dependencies. Next, you instantiate a Flask application and a Redis client using default arguments, which means that the client will try connecting to a local Redis server. Finally, you define a controller function to handle HTTP GET requests arriving at the web server’s root address (/).

Your endpoint increments the number of page views in Redis and displays a suitable message in the client’s web browser. That’s it! You have a complete web application that can handle HTTP traffic and persist state in a remote data store using fewer than ten lines of code.

To verify if your Flask application is working as expected, issue the following command in the terminal:

(page-tracker) $ flask --app page_tracker.app run

* Serving Flask app 'page_tracker.app'

* Debug mode: off

WARNING: This is a development server. Do not use it in a production

⮑ deployment. Use a production WSGI server instead.

* Running on http://127.0.0.1:5000

Press CTRL+C to quit

You can run this command anywhere in your file system as long as you’ve activated the correct virtual environment with your page-tracker package installed. This should run the Flask development server on localhost and port 5000 with debug mode disabled.

If you’d like to access your server from another computer on the same network, then you must bind it to all network interfaces by using the special address 0.0.0.0 instead of the default localhost, which represents the loopback interface:

(page-tracker) $ flask --app page_tracker.app run --host=0.0.0.0 \

--port=8080 \

--debug

* Serving Flask app 'page_tracker.app'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production

⮑ deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8080

* Running on http://192.168.0.115:8080

Press CTRL+C to quit

* Restarting with stat

* Debugger is active!

* Debugger PIN: 123-167-546

You can also change the port number and enable debug mode with an appropriate command-line option or flag if you want to.

Once you’ve started the server, you can follow the link displayed in the terminal and see the page with the number of views in your web browser. Every time you refresh this page, the counter should increase by one:

Nicely done! You’ve managed to create a bare-bones Flask application that tracks the number of page views using Redis. Next up, you’ll learn how to test and secure your web application.

Test and Secure Your Web Application

Before packaging and deploying any project to production, you should thoroughly test, examine, and secure the underlying source code. In this part of the tutorial, you’ll exercise unit, integration, and end-to-end tests. You’ll also perform static code analysis and security scanning to identify potential issues and vulnerabilities when it’s still cheap to fix them.

Cover the Source Code With Unit Tests

Unit testing involves testing a program’s individual units or components to ensure that they work as expected. It has become a necessary part of software development these days. Many engineers even take it a step further, rigorously following the test-driven development methodology by writing their unit tests first to drive the code design.

When it comes to writing unit tests, it’s quite common for those in the Python community to choose pytest over the standard library’s unittest module. Thanks to the relative simplicity of pytest, this testing framework is quick to start with. Go ahead and add pytest as an optional dependency to your project:

# pyproject.toml

[build-system]

requires = ["setuptools>=67.0.0", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "page-tracker"

version = "1.0.0"

dependencies = [

"Flask",

"redis",

]

[project.optional-dependencies]

dev = [

"pytest",

]

You can group optional dependencies that are somehow related under a common name. Here, for example, you created a group called dev to collect tools and libraries that you’ll use during development. By keeping pytest separate from the main dependencies, you’ll be able to install it on demand only when needed. After all, there’s no point in bundling your tests or the associated testing framework with the built distribution package.

Don’t forget to reinstall your Python package with the optional dependencies to get pytest into your project’s virtual environment:

(page-tracker) $ python -m pip install --editable ".[dev]"

You can use square brackets to list the names of optional dependency groups defined in your pyproject.toml file. In this case, you ask to install the dependencies for development purposes, including a testing framework. Note that using quotes ("") around the square brackets is recommended to prevent a potential filename expansion in the shell.

Because you followed the src layout in your project, you don’t have to keep the test modules either in the same folder or the same namespace package as your code under test. You can create a separate directory branch for your tests, as follows:

page-tracker/

│

├── src/

│ └── page_tracker/

│ ├── __init__.py

│ └── app.py

│

├── test/

│ └── unit/

│ └── test_app.py

│

├── venv/

│

├── constraints.txt

└── pyproject.toml

You’ve placed your test module in a test/unit/ folder to keep things organized. The pytest framework will discover your tests when you prefix them with the word test. Although you can change that, it’s customary to keep the default convention while mirroring each Python module with the corresponding test module. For example, you’ll cover the app module with test_app in your test/unit/ folder.

You’ll start by testing the happy path of your web application, which would typically mean sending a request to the server. Each Flask application comes with a convenient test client that you can use to make simulated HTTP requests. Because the test client doesn’t require a live server to be running, your unit tests will execute much faster and will become more isolated.

You can get the test client and conveniently wrap it in a test fixture to make it available to your test functions:

# test/unit/test_app.py

import pytest

from page_tracker.app import app

@pytest.fixture

def http_client():

return app.test_client()

First, you import the pytest package to take advantage of its @fixture decorator against your custom function. Choose your function’s name carefully because it’ll also become the name of the fixture that you can pass around as an argument to the individual test functions. You also import the Flask application from your page_tracker package to get the corresponding test client instance.

When you intend to write a unit test, you must always isolate it by eliminating any dependencies that your unit of code may have. This means that you should mock or stub out any external services, databases, or libraries that your code relies on. In your case, the Redis server is such a dependency.

Unfortunately, your code currently uses a hard-coded Redis client, which prevents mocking. This is a good argument for following test-driven development from the start, but it doesn’t mean you have to go back and start over. Instead, you’re going to refactor your code by implementing the dependency injection design pattern:

# src/page_tracker/app.py

+from functools import cache

from flask import Flask

from redis import Redis

app = Flask(__name__)

-redis = Redis()

@app.get("/")

def index():

- page_views = redis.incr("page_views")

+ page_views = redis().incr("page_views")

return f"This page has been seen {page_views} times."

+@cache

+def redis():

+ return Redis()

Essentially, you move the Redis client creation code from the global scope to a new redis() function, which your controller function calls at runtime on each incoming request. This will allow your test case to substitute the returned Redis instance with a mock counterpart at the right time. But, to ensure that there’s only one instance of the client in memory, effectively making it a singleton, you also cache the result of your new function.

Go back to your test module now and implement the following unit test:

# test/unit/test_app.py

import unittest.mock

import pytest

from page_tracker.app import app

@pytest.fixture

def http_client():

return app.test_client()

@unittest.mock.patch("page_tracker.app.redis")

def test_should_call_redis_incr(mock_redis, http_client):

# Given

mock_redis.return_value.incr.return_value = 5

# When

response = http_client.get("/")

# Then

assert response.status_code == 200

assert response.text == "This page has been seen 5 times."

mock_redis.return_value.incr.assert_called_once_with("page_views")

You wrap your test function with Python’s @patch decorator to inject a mocked Redis client into it as an argument. You also tell pytest to inject your HTTP test client fixture as another argument. The test function has a descriptive name that starts with the verb should and follows the Given-When-Then pattern. Both of these conventions, commonly used in behavior-driven development, make your test read as behavioral specifications.

In your test case, you first set up the mock Redis client to always return 5 whenever its .incr() method gets called. Then, you make a forged HTTP request to the root endpoint (/) and check the server’s response status and body. Because mocking helps you test the behavior of your unit, you only verify that the server calls the correct method with the expected argument, trusting that the Redis client library works correctly.

To execute your unit tests, you can either use the test runner integrated in your code editor, or you can type the following command in the terminal:

(page-tracker) $ python -m pytest -v test/unit/

You run pytest as a Python module from your virtual environment, instructing it to scan the test/unit/ directory in order to look for test modules there. The -v switch increases the test report’s verbosity so that you can see more details about the individual test cases.

Staring at a green report after all your unit tests have passed can feel satisfying. It gives you some level of confidence in your code, but it’s hardly enough to make any sort of guarantees. Plenty of memes illustrate the importance of running integration tests even after unit tests have passed.

For example, one of the classic memes shows two drawers, but only one can open at a time. While each individual drawer or unit has been tested and works on its own, it’s when you try integrating them into a piece of furniture that problems arise. Next up, you’ll add a rudimentary integration test to your project.

Check Component Interactions Through Integration Tests

Integration testing should be the next phase after running your unit tests. The goal of integration testing is to check how your components interact with each other as parts of a larger system. For example, your page tracker web application might have integration tests that check the communication with a genuine Redis server instead of a mocked one.

You can reuse pytest to implement and run the integration tests. However, you’ll install an additional pytest-timeout plugin to allow you to force the failure of test cases that take too long to run:

# pyproject.toml

[build-system]

requires = ["setuptools>=67.0.0", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "page-tracker"

version = "1.0.0"

dependencies = [

"Flask",

"redis",

]

[project.optional-dependencies]

dev = [

"pytest",

"pytest-timeout",

]

Ideally, you don’t need to worry about unit tests timing out because they should be optimized for speed. On the other hand, integration tests will take longer to run and could hang infinitely on a stalled network connection, preventing your test suite from finishing. So, it’s important to have a way to abort them in cases like this.

Remember to reinstall your package with optional dependencies once again to make the pytest-timeout plugin available:

(page-tracker) $ python -m pip install --editable ".[dev]"

Before moving on, add another subfolder for your integration tests and define a conftest.py file in your test/ folder:

page-tracker/

│

├── src/

│ └── page_tracker/

│ ├── __init__.py

│ └── app.py

│

├── test/

│ ├── integration/

│ │ └── test_app_redis.py

│ │

│ ├── unit/

│ │ └── test_app.py

│ │

│ └── conftest.py

│

├── venv/

│

├── constraints.txt

└── pyproject.toml

You’ll place common fixtures in conftest.py, which different types of tests will share.

While your web application has just one component, you can think of Redis as another component that Flask needs to work with. Therefore, an integration test might look similar to your unit test, except that the Redis client won’t be mocked anymore:

# test/integration/test_app_redis.py

import pytest

@pytest.mark.timeout(1.5)

def test_should_update_redis(redis_client, http_client):

# Given

redis_client.set("page_views", 4)

# When

response = http_client.get("/")

# Then

assert response.status_code == 200

assert response.text == "This page has been seen 5 times."

assert redis_client.get("page_views") == b"5"

Conceptually, your new test case consists of the same steps as before, but it interacts with the real Redis server. That’s why you give the test at most 1.5 seconds to finish using the @pytest.mark.timeout decorator. The test function takes two fixtures as parameters:

- A Redis client connected to a local data store

- Flask’s test client hooked to your web application

To make the second one available in your integration test as well, you must move the http_client() fixture from the test_app module to the conftest.py file:

# test/conftest.py

import pytest

import redis

from page_tracker.app import app

@pytest.fixture

def http_client():

return app.test_client()

@pytest.fixture(scope="module")

def redis_client():

return redis.Redis()

Because this file is located one level up in the folder hierarchy, pytest will pick up all the fixtures defined in it and make them visible throughout your nested folders. Apart from the familiar http_client() fixture, which you moved from another Python module, you define a new fixture that returns a default Redis client. Notice that you give it the scope module to reuse the same Redis client instance for all functions within a test module.

To perform your integration test, you’ll have to double-check that a Redis server is running locally on the default port, 6379, and then start pytest as before, but point it to the folder with your integration tests:

(page-tracker) $ python -m pytest -v test/integration/

Because your integration test connects to an actual Redis server, it’ll overwrite the value that you might have previously stored under the page_views key. However, if the Redis server isn’t running while your integration tests are executing, or if Redis is running elsewhere, then your test will fail. This failure may be for the wrong reasons, making the outcome a false negative error, as your code might actually be working as expected.

To observe this problem, stop Redis now and rerun your integration test:

(page-tracker) $ docker stop redis-server

redis-server

(page-tracker) $ python -m pytest -v test/integration/

⋮

========================= short test summary info ==========================

FAILED test/integration/test_app_redis.py::test_should_update_redis -

⮑redis.exceptions.ConnectionError: Error 111 connecting to localhost:6379.

⮑Connection refused

============================ 1 failed in 0.19s =============================

This uncovers an issue in your code, which doesn’t gracefully handle Redis connection errors at the moment. In the spirit of test-driven development, you may first codify a test case that reproduces that problem and then fix it. Switch gears for a moment and add the following unit test in your test_app module with a mocked Redis client:

# test/unit/test_app.py

import unittest.mock

from redis import ConnectionError

# ...

@unittest.mock.patch("page_tracker.app.redis")

def test_should_handle_redis_connection_error(mock_redis, http_client):

# Given

mock_redis.return_value.incr.side_effect = ConnectionError

# When

response = http_client.get("/")

# Then

assert response.status_code == 500

assert response.text == "Sorry, something went wrong \N{pensive face}"

You set the mocked .incr() method’s side effect so that calling that method will raise the redis.ConnectionError exception, which you observed when the integration test failed. Your new unit test, which is an example of a negative test, expects Flask to respond with an HTTP status code 500 and a descriptive message. Here’s how you can satisfy that unit test:

# src/page_tracker/app.py

from functools import cache

from flask import Flask

from redis import Redis, RedisError

app = Flask(__name__)

@app.get("/")

def index():

try:

page_views = redis().incr("page_views")

except RedisError:

app.logger.exception("Redis error")

return "Sorry, something went wrong \N{pensive face}", 500

else:

return f"This page has been seen {page_views} times."

@cache

def redis():

return Redis()

You intercept the top-level exception class, redis.RedisError, which is the ancestor of all exception types raised by the Redis client. If anything goes wrong, then you return the expected HTTP status code and a message. For convenience, you also log the exception using the logger built into Flask.

Note: While a parent is the immediate base class that a child class is directly extending, an ancestor can be anywhere further up the inheritance hierarchy.

Great! You amended your unit tests, implemented an integration test, and fixed a defect in your code after finding out about it, thanks to testing. Nonetheless, when you deploy your application to a remote environment, how will you know that all the pieces fit together and everything works as expected?

In the next section, you’ll simulate a real-world scenario by performing an end-to-end test against your actual Flask server rather than the test client.

Test a Real-World Scenario End to End (E2E)

End-to-end testing, also known as broad stack testing, encompasses many kinds of tests that can help you verify the system as a whole. They put the complete software stack to the test by simulating an actual user’s flow through the application. Therefore, end-to-end testing requires a deployment environment that mimics the production environment as closely as possible. A dedicated team of test engineers is usually needed, too.

Note: Because end-to-end tests have a high maintenance cost and tend to take a lot time to set up and run, they sit atop Google’s testing pyramid. In other words, you should aim for more integration tests, and even more unit tests in your projects.

As you’ll eventually want to build a full-fledged continuous integration pipeline for your Docker application, having some end-to-end tests in place will become essential. Start by adding another subfolder for your E2E tests:

page-tracker/

│

├── src/

│ └── page_tracker/

│ ├── __init__.py

│ └── app.py

│

├── test/

│ ├── e2e/

│ │ └── test_app_redis_http.py

│ │

│ ├── integration/

│ │ └── test_app_redis.py

│ │

│ ├── unit/

│ │ └── test_app.py

│ │

│ └── conftest.py

│

├── venv/

│

├── constraints.txt

└── pyproject.toml

The test scenario that you’re about to implement will look similar to your integration test. The main difference, though, is that you’ll be sending an actual HTTP request through the network to a live web server instead of relying on Flask’s test client. To do so, you’ll use the third-party requests library, which you must first specify in your pyproject.toml file as another optional dependency:

# pyproject.toml

[build-system]

requires = ["setuptools>=67.0.0", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "page-tracker"

version = "1.0.0"

dependencies = [

"Flask",

"redis",

]

[project.optional-dependencies]

dev = [

"pytest",

"pytest-timeout",

"requests",

]

You won’t be using requests to run your server in production, so there’s no need to require it as a regular dependency. Again, reinstall your Python package with optional dependencies using the editable mode:

(page-tracker) $ python -m pip install --editable ".[dev]"

You can now use the installed requests library in your end-to-end test:

1# test/e2e/test_app_redis_http.py

2

3import pytest

4import requests

5

6@pytest.mark.timeout(1.5)

7def test_should_update_redis(redis_client, flask_url):

8 # Given

9 redis_client.set("page_views", 4)

10

11 # When

12 response = requests.get(flask_url)

13

14 # Then

15 assert response.status_code == 200

16 assert response.text == "This page has been seen 5 times."

17 assert redis_client.get("page_views") == b"5"

This code is nearly identical to your integration test except for line 12, which is responsible for sending an HTTP GET request. Previously, you sent that request to the test client’s root address, denoted with a slash character (/). Now, you don’t know the exact domain or IP address of the Flask server, which may be running on a remote host. Therefore, your function receives a Flask URL as an argument, which pytest injects as a fixture.

You may provide the specific web server’s address through the command line. Similarly, your Redis server may be running on a different host, so you’ll want to provide its address as a command-line argument as well. But wait! Your Flask application currently expects Redis to always run on the localhost. Go ahead and update your code to make this configurable:

# src/page_tracker/app.py

import os

from functools import cache

from flask import Flask

from redis import Redis, RedisError

app = Flask(__name__)

@app.get("/")

def index():

try:

page_views = redis().incr("page_views")

except RedisError:

app.logger.exception("Redis error")

return "Sorry, something went wrong \N{pensive face}", 500

else:

return f"This page has been seen {page_views} times."

@cache

def redis():

return Redis.from_url(os.getenv("REDIS_URL", "redis://localhost:6379"))

It’s common to use environment variables for setting sensitive data, such as a database URL, because it provides an extra level of security and flexibility. In this case, your program expects a custom REDIS_URL variable to exist. If that variable isn’t specified in the given environment, then you fall back to the default host and port.

To extend pytest with custom command-line arguments, you must edit conftest.py and hook into the framework’s argument parser in the following way:

# test/conftest.py

import pytest

import redis

from page_tracker.app import app

def pytest_addoption(parser):

parser.addoption("--flask-url")

parser.addoption("--redis-url")

@pytest.fixture(scope="session")

def flask_url(request):

return request.config.getoption("--flask-url")

@pytest.fixture(scope="session")

def redis_url(request):

return request.config.getoption("--redis-url")

@pytest.fixture

def http_client():

return app.test_client()

@pytest.fixture(scope="module")

def redis_client(redis_url):

if redis_url:

return redis.Redis.from_url(redis_url)

return redis.Redis()

You define two optional arguments, --flask-url and --redis-url, using syntax similar to Python’s argparse module. Then, you wrap these arguments in session-scoped fixtures, which you’ll be able to inject into your test functions and other fixtures. Specifically, your existing redis_client() fixture now takes advantage of the optional Redis URL.

Note: Because your end-to-end and integration tests rely on the same redis_client() fixture, you’ll be able to connect to a remote Redis server by specifying the --redis-url option in both types of tests.

This is how you can run your end-to-end test with pytest by specifying the URL of the Flask web server and the corresponding Redis server:

(page-tracker) $ python -m pytest -v test/e2e/ \

--flask-url http://127.0.0.1:5000 \

--redis-url redis://127.0.0.1:6379

In this case, you can access both Flask and Redis through localhost (127.0.0.1), but your application could be deployed to a geographically distributed environment consisting of multiple remote machines. When you execute this command locally, make sure that Redis is running and start your Flask server separately first:

(page-tracker) $ docker start redis-server

(page-tracker) $ flask --app page_tracker.app run

To improve the code quality, you can keep adding more types of tests to your application if you have the capacity. Still, that usually takes a team of full-time software quality assurance engineers. On the other hand, performing a code review or another type of static code analysis is fairly low-hanging fruit that can uncover surprisingly many problems. You’ll take a look at this process now.

Perform Static Code Analysis and Security Scanning

Now that your application works as expected, it’s time to perform static code analysis without executing the underlying code. It’s a common practice in the software development industry that helps developers identify potential software defects and security risks in their code. While some steps of static analysis can be automated, others are usually done manually through, for example, a peer review.

You’ll use the following automated tools, so please add them to your pyproject.toml file as optional dependencies:

# pyproject.toml

[build-system]

requires = ["setuptools>=67.0.0", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "page-tracker"

version = "1.0.0"

dependencies = [

"Flask",

"redis",

]

[project.optional-dependencies]

dev = [

"bandit",

"black",

"flake8",

"isort",

"pylint",

"pytest",

"pytest-timeout",

"requests",

]

Don’t forget to reinstall and pin your dependencies afterward:

(page-tracker) $ python -m pip install --editable ".[dev]"

(page-tracker) $ python -m pip freeze --exclude-editable > constraints.txt

This will bring a few command-line utility tools into your virtual environment. First of all, you should clean up your code by formatting it consistently, sorting the import statements, and checking for PEP 8 compliance:

(page-tracker) $ python -m black src/ --check

would reformat /home/realpython/page-tracker/src/page_tracker/app.py

Oh no! 💥 💔 💥

1 file would be reformatted, 1 file would be left unchanged.

(page-tracker) $ python -m isort src/ --check

ERROR: /home/.../app.py Imports are incorrectly sorted and/or formatted.

(page-tracker) $ python -m flake8 src/

src/page_tracker/app.py:23:1: E302 expected 2 blank lines, found 1

You use black to flag any formatting inconsistencies in your code, isort to ensure that your import statements stay organized according to the official recommendation, and flake8 to check for any other PEP 8 style violations.

If you don’t see any output after running these tools, then it means there’s nothing to fix. On the other hand, if warnings or errors appear, then you can correct any reported problems by hand or let those tools do it automatically when you drop the --check flag:

(page-tracker) $ python -m black src/

reformatted /home/realpython/page-tracker/src/page_tracker/app.py

All done! ✨ 🍰 ✨

1 file reformatted, 1 file left unchanged.

(page-tracker) $ python -m isort src/

Fixing /home/realpython/page-tracker/src/page_tracker/app.py

(page-tracker) $ python -m flake8 src/

Without the --check flag, both black and isort go ahead and reformat the affected files in place without asking. Running these two commands also addresses PEP 8 compliance, as flake8 no longer returns any style violations.

Note: It’s useful to keep the code tidy by following common code style conventions across your team. This way, when one person updates a source file, team members won’t have to sort through changes to irrelevant parts of code, such as whitespace.

Once everything’s clean, you can lint your code to find potential code smells or ways to improve it:

(page-tracker) $ python -m pylint src/

When you run pylint against your web application’s source code, it may start complaining about more or less useful things. It generally emits messages belonging to a few categories, including:

- E: Errors

- W: Warnings

- C: Convention violations

- R: Refactoring suggestions

Each remark has a unique identifier, such as C0116, which you can suppress if you don’t find it helpful. You may include the suppressed identifiers in a global configuration file for a permanent effect or use a command-line switch to ignore certain errors on a given run. You can also add a specially formatted Python comment on a given line to account for special cases:

# src/page_tracker/app.py

import os

from functools import cache

from flask import Flask

from redis import Redis, RedisError

app = Flask(__name__)

@app.get("/")

def index():

try:

page_views = redis().incr("page_views")

except RedisError:

app.logger.exception("Redis error") # pylint: disable=E1101

return "Sorry, something went wrong \N{pensive face}", 500

else:

return f"This page has been seen {page_views} times."

@cache

def redis():

return Redis.from_url(os.getenv("REDIS_URL", "redis://localhost:6379"))

In this case, you tell pylint to ignore a particular instance of the error E1101 without suppressing it completely. It’s a false positive because .logger is a dynamic attribute generated at runtime by Flask, which isn’t available during a static analysis pass.

Note: If you intend to use pylint as part of your automated continuous integration pipeline, then you may want to specify when it should exit with an error code, which would typically stop the subsequent steps of the pipeline. For example, you can configure it to always return a neutral exit code zero:

(page-tracker) $ python -m pylint src/ --exit-zero

This will never stop the pipeline from running, even when pylint finds some problems in the code. Alternatively, with --fail-under, you can specify an arbitrary score threshold at which pylint will exit with an error code.

You’ll notice that pylint gives a score to your code and keeps track of it. When you fix a problem one way or another and run the tool again, then it’ll report a new score and tell you how much it has improved or worsened. Use your best judgment to decide whether issues that pylint reports are worth fixing.

Finally, it’s too common to inadvertently leak sensitive data through your source code or expose other security vulnerabilities. It happens even to the best software engineers. Recently, GitHub exposed its private key in a public repository, which could’ve allowed attackers to impersonate the giant. To reduce the risk of such incidents, you should perform security or vulnerability scanning of your source code before deploying it anywhere.

To scan your code, you can use bandit, which you installed as an optional dependency earlier:

(page-tracker) $ python -m bandit -r src/

When you specify a path to a folder rather than to a file, then you must also include the -r flag to scan it recursively. At this point, bandit shouldn’t find any issues in your code. But, if you run it again after adding the following two lines at the bottom of your Flask application, then the tool will report issues with different severity levels:

# src/page_tracker/app.py

# ...

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000, debug=True)

This name-main idiom is a common pattern found in many Flask applications because it makes development more convenient, letting you run the Python module directly. On the other hand, it exposes Flask’s debugger, allowing the execution of arbitrary code, and binding to all network interfaces through the address 0.0.0.0 opens up your service to public traffic.

Therefore, to make sure that your Flask application is secure, you should always run bandit or a similar tool before deploying the code to production.

Okay. Your web application is covered with the unit, integration, and end-to-end tests. That means a number of automated tools have statically analyzed and modified its source code. Next, you’ll continue on the path to continuous integration by wrapping the application in a Docker container so that you can deploy the whole project to a remote environment or faithfully replicate it on a local computer.

Dockerize Your Flask Web Application

In this section, you’ll run your page tracker web application as a Docker container that can speak to Redis running in another container. Such a setup is useful for development and testing as well as for deploying your application to a remote environment. Even if you haven’t installed Python or Redis on your computer, you’ll still be able to run your project through Docker.

Understand the Docker Terminology

Dockerizing an application involves creating a Dockerfile, which is a declarative description of the desired state of your environment. It provides a template for the runtime environment, configuration, and all the dependencies and libraries required to run your application.

To breathe life into your application, you must build a Docker image based on that description. You can think of a Docker image as a snapshot of your operating system at a given time. When you share your Docker image with the world or within your company, others can reproduce precisely the same environment and run the same application as you do. This sidesteps the classic but it works on my machine issue.

A Docker image is the blueprint for Docker containers. Each Docker container is an instance of your Docker image. A container has an independent state and resources, including its own file system, environment variables, and network interfaces. A single Docker container usually runs a single process, making it ideal for hosting a particular microservice.

By adding more containers, you can temporarily scale up one or more microservices to handle a peak in traffic, which could be typical during the holiday season, for example. However, your microservices must be well-architected and remain stateless for this to be effective.

Note: Docker containers resemble virtual machines like Vagrant or VirtualBox, but are much more lightweight and faster to spin up. As a result, you can run many more containers than virtual machines on your host machine simultaneously.

The reason for this is that containers have less overhead because they share your operating system’s kernel, while virtual machines run on a hypervisor that emulates the complete hardware stack. On the other hand, containers aren’t as secure, nor do they provide the same level of isolation as virtual machines.

Typical applications consist of multiple services running within isolated Docker containers that can communicate with each other. Your page tracker application has these two:

- Web service

- Redis service

You already know how to run Redis through Docker. Now, it’s time to sandbox your Flask web application within a Docker container to streamline the development and deployment process of both services.

Learn the Anatomy of a Dockerfile

To get started, you’ll define a relatively short Dockerfile that’s applicable in the development phase. Create a file named Dockerfile in your project root folder on the same level as the src/ subfolder and the pyproject.toml configuration file in the file hierarchy:

page-tracker/

│

├── src/

│ └── page_tracker/

│ ├── __init__.py

│ └── app.py

│

├── test/

│

├── venv/

│

├── constraints.txt

├── Dockerfile

└── pyproject.toml

You can name this file however you like, but sticking to the default naming convention will spare you from having to specify the filename each time you want to build an image. The default filename that Docker expects is Dockerfile with no file extension. Note that it starts with the capital letter D.

A Dockerfile is a plain text document that lists the steps necessary to assemble an image. It adheres to a specific format, which defines a fixed set of instructions for you to use.

Note: You must place each instruction in your Dockerfile on a separate line, but it’s not uncommon to see really long lines broken up several times with the line continuation character (\). In fact, cramming more than one operation on a single line is often desired to take advantage of the caching mechanism that you’ll learn about now.

When you build an image from a Dockerfile, you’re relying on a sequence of layers. Each instruction creates a read-only layer on top of the previous layer, encapsulating some modification to the image’s underlying file system. Layers have globally unique identifiers, which allow Docker to store the layers in a cache. This has two main advantages:

- Speed: Docker can skip layers that haven’t changed since the last build and load them from the cache instead, which leads to significantly faster image builds.

- Size: Multiple images can share common layers, which reduces their individual size. Other than that, having fewer layers contributes to the smaller image size.

Now that you know this layered anatomy of a Dockerfile, you can start adding instructions to it while learning about the best practices for creating efficient Docker images.

Choose the Base Docker Image

The first instruction in every Dockerfile, FROM, must always define the base image to build your new image from. This means that you don’t have to start from scratch but can pick a suitable image that’s already built. For example, you can use an image that ships with the Python interpreter:

# Dockerfile

FROM python:3.11.2-slim-bullseye

Here, you use the official Python image named python, which is hosted on Docker Hub. Official images are built and maintained by the official maintainers of the respective language or technology. They don’t belong to any particular user or team on Docker Hub but are available in the global namespace, implicitly called library/, as opposed to more specialized variants, like circleci/python.

You also specify an optional label or tag name after the colon (:) to narrow down the specific version of the base image. You can browse all the available tags of the given Docker image by clicking on the Tags tab on the corresponding Docker Hub page.

Note: Tags aren’t mandatory, but including them in the FROM instruction is considered best practice. You should be as specific as possible to avoid unwanted surprises. If you omit the tag, then Docker will pull an image tagged latest, which might contain an unsuitable operating system or unexpected changes in the runtime affecting your application.

The tag 3.11.2-slim-bullseye means that your base image will be a slimmed-down variant of Debian Bullseye with only the bare essentials, letting you install any additional packages later as needed. This reduces the image’s size and speeds up its download time. The difference in size between the regular and slim variants of this image is a whopping eight hundred megabytes!

The tag also indicates that your base image will ship with Python 3.11.2 already installed, so you can start using it right away.

The next task that you may want to do immediately after pulling a base image is to patch it with the most recent security updates and bug fixes, which may have been released since the image was published on Docker Hub:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

In Debian, you use the apt-get command to fetch the latest package list and upgrade any packages that have updates available. Notice that both commands are executed as part of one RUN instruction to minimize the number of layers in the file system, so you avoid taking up too much disk space.

Note: The order of instructions in your Dockerfile is important because it can affect how long it’ll take to build an image. In particular, you should place instructions whose layers often change toward the bottom of your Dockerfile because they’re most likely to invalidate all the subsequent layers in the cache.

Okay. You’ve chosen your base image and installed the most recent security updates. You’re almost ready to set up your Flask application, but there are still a few steps ahead.

Isolate Your Docker Image

Another good practice when working with Dockerfiles is to create and switch to a regular user without administrative privileges as soon as you don’t need them anymore. By default, Docker runs your commands as the superuser, which a malicious attacker could exploit to gain unrestricted access to your host system. Yes, Docker gives root-level access to the container and your host machine!

Here’s how you can avoid this potential security risk:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

You create a new user named realpython and tell Docker to use that user in the Dockerfile from now on. You also set the current working directory to this user’s home directory so that you don’t have to specify the full file path explicitly in later commands.

Even though your Docker container will run a single Flask application, consider setting up a dedicated virtual environment inside the container itself. While you don’t need to worry about isolating multiple Python projects from each other, and Docker provides a reasonable insulation layer from your host machine, you still risk interfering with the container’s own system tools.

Unfortunately, many Linux distributions rely on the global Python installation to run smoothly. If you start installing packages directly into the global Python environment, then you open the door for potential version conflicts. That could even lead to breaking your system.

Note: If you’re still unconvinced about creating a virtual environment inside a Docker container, then maybe this warning message will change your mind:

WARNING: Running pip as the 'root' user can result in broken permissions

and conflicting behaviour with the system package manager. It is

⮑recommended to use a virtual environment instead:

⮑https://pip.pypa.io/warnings/venv

You may see this after trying to install a Python package using the system’s global pip command on Debian or a derived distribution like Ubuntu.

The most reliable way of creating and activating a virtual environment within your Docker image is to directly modify its PATH environment variable:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

ENV VIRTUALENV=/home/realpython/venv

RUN python3 -m venv $VIRTUALENV

ENV PATH="$VIRTUALENV/bin:$PATH"

First, you define a helper variable, VIRTUALENV, with the path to your project’s virtual environment, and then use Python’s venv module to create that environment there. However, rather than activating your new environment with a shell script, you update the PATH variable by overriding the path to the python executable.

Why? This is necessary because activating your environment in the usual way would only be temporary and wouldn’t affect Docker containers derived from your image. Moreover, if you activated the virtual environment using Dockerfile’s RUN instruction, then it would only last until the next instruction in your Dockerfile because each one starts a new shell session.

Once you have the virtual environment for your project, you can install the necessary dependencies.

Cache Your Project Dependencies

Installing dependencies in a Dockerfile looks slightly different compared to working locally on your host machine. Normally, you’d install the dependencies and then your Python package immediately afterward. In contrast, when you build a Docker image, it’s worthwhile to split that process into two steps to leverage layer caching, reducing the total time it takes to build the image.

First, COPY the two files with the project metadata from your host machine into the Docker image:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

ENV VIRTUALENV=/home/realpython/venv

RUN python3 -m venv $VIRTUALENV

ENV PATH="$VIRTUALENV/bin:$PATH"

COPY --chown=realpython pyproject.toml constraints.txt ./

You only copy the pyproject.toml and constraints.txt files, which contain information about the project’s dependencies, into the home directory of your realpython user in the Docker image. By default, files are owned by the superuser, so you may want to change their owner with --chown to a regular user that you created before. The --chown option is similar to the chown command, which stands for change owner.

Many Dockerfile examples that you can find online would copy everything in one go, but that’s wasteful! There might be a ton of extra files, such as your local Git repository with the project’s entire history, code editor settings, or other temporary files hanging around in your project root folder. Not only do they bloat the resulting image, but they also increase the likelihood of invalidating Docker’s layer cache prematurely.

Note: You should only copy the individual files that you need at the moment in your Dockerfile. Otherwise, even the slightest change in an unrelated file will cause your remaining layers to rebuild. Alternatively, you could define a .dockerignore file that works similarly to a .gitignore counterpart, but it’s safer to be explicit about what you want to copy.

Another piece of the puzzle that’s easy to miss is when you forget to upgrade pip itself before trying to install your project dependencies. In rare cases, an old version of pip can actually prevent the latest versions of other packages from installing! In your case, it’s also worth upgrading setuptools, which you use as a build back end, to get the newest security patches.

You can combine the following two commands in one RUN instruction to install your dependencies:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

ENV VIRTUALENV=/home/realpython/venv

RUN python3 -m venv $VIRTUALENV

ENV PATH="$VIRTUALENV/bin:$PATH"

COPY --chown=realpython pyproject.toml constraints.txt ./

RUN python -m pip install --upgrade pip setuptools && \

python -m pip install --no-cache-dir -c constraints.txt ".[dev]"

You upgrade pip and setuptools to their most recent versions. Then, you install the third-party libraries that your project requires, including optional dependencies for development. You constrain their versions to ensure a consistent environment, and you tell pip to disable caching with --no-cache-dir. You won’t need those packages outside your virtual environment, so there’s no need to cache them. That way, you make your Docker image smaller.

Note: Because you installed dependencies without installing your page-tracker package in the Docker image, they’ll stay in a cached layer. Therefore, any changes to your source code won’t require reinstalling those dependencies.

Your Dockerfile is growing and becoming more complex, but don’t worry. There are only a few more steps to complete, so you’re almost there.

Run Tests as Part of the Build Process

Finally, it’s time to copy your source code into the Docker image and run your tests along with linters and other static analysis tools:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

ENV VIRTUALENV=/home/realpython/venv

RUN python3 -m venv $VIRTUALENV

ENV PATH="$VIRTUALENV/bin:$PATH"

COPY --chown=realpython pyproject.toml constraints.txt ./

RUN python -m pip install --upgrade pip setuptools && \

python -m pip install --no-cache-dir -c constraints.txt ".[dev]"

COPY --chown=realpython src/ src/

COPY --chown=realpython test/ test/

RUN python -m pip install . -c constraints.txt && \

python -m pytest test/unit/ && \

python -m flake8 src/ && \

python -m isort src/ --check && \

python -m black src/ --check --quiet && \

python -m pylint src/ --disable=C0114,C0116,R1705 && \

python -m bandit -r src/ --quiet

After copying the src/ and test/ folders from your host machine, you install the page-tracker package into the virtual environment. By baking the automated testing tools into the build process, you ensure that if any one of them returns a non-zero exit status code, then building your Docker image will fail. That’s precisely what you want when implementing a continuous integration pipeline.

Note that you had to disable the low-severity pylint issues C0114, C0116, and R1705, which are of little importance now. Otherwise, they would prevent your Docker image from building successfully.

The reason for combining the individual commands in one RUN instruction is to reduce the number of layers to cache. Remember that the more layers you have, the bigger the resulting Docker image will be.

Note: At this point, you can’t execute the integration or end-to-end tests, which require Redis, because your Docker image only concerns the Flask application. You’ll be able to execute them after deploying your application to some environment.

When all the tests pass and none of the static analysis tools report any issues, your Docker image will be almost done. However, you still need to tell Docker what command to run when you create a new container from your image.

Specify the Command to Run in Docker Containers

The last step is specifying the command to execute inside each new Docker container derived from your Docker image. At this stage, you can start your web application on top of Flask’s built-in development server:

# Dockerfile

FROM python:3.11.2-slim-bullseye

RUN apt-get update && \

apt-get upgrade --yes

RUN useradd --create-home realpython

USER realpython

WORKDIR /home/realpython

ENV VIRTUALENV=/home/realpython/venv

RUN python3 -m venv $VIRTUALENV

ENV PATH="$VIRTUALENV/bin:$PATH"

COPY --chown=realpython pyproject.toml constraints.txt ./

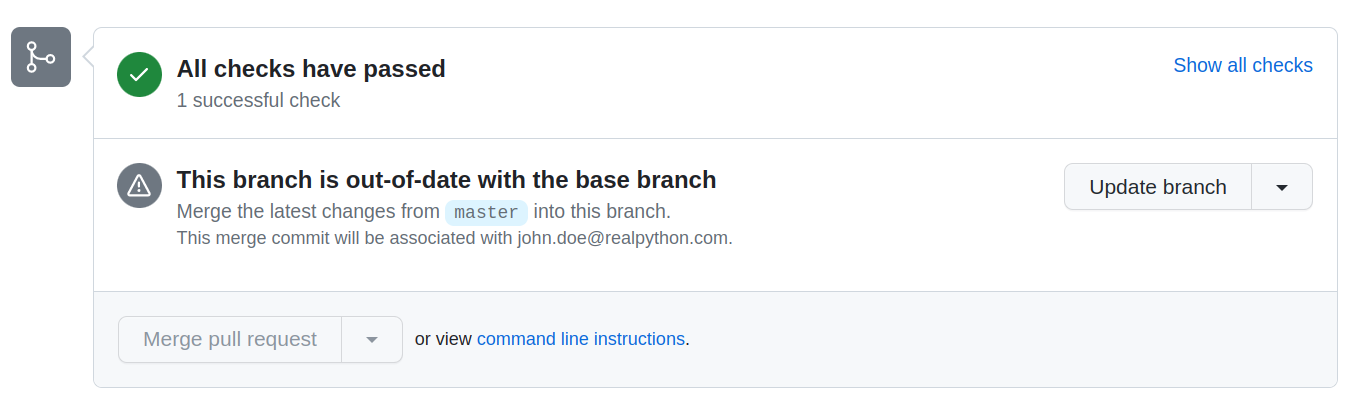

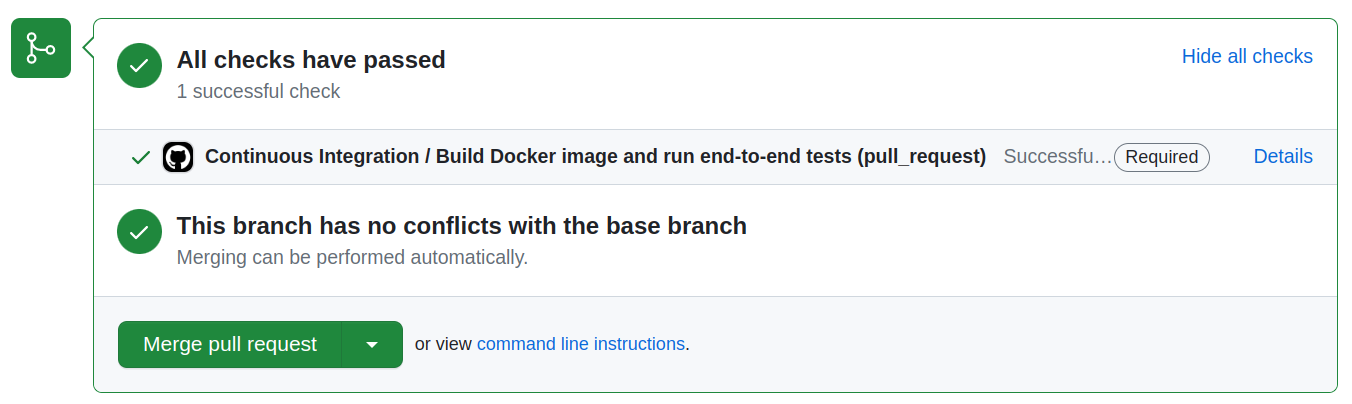

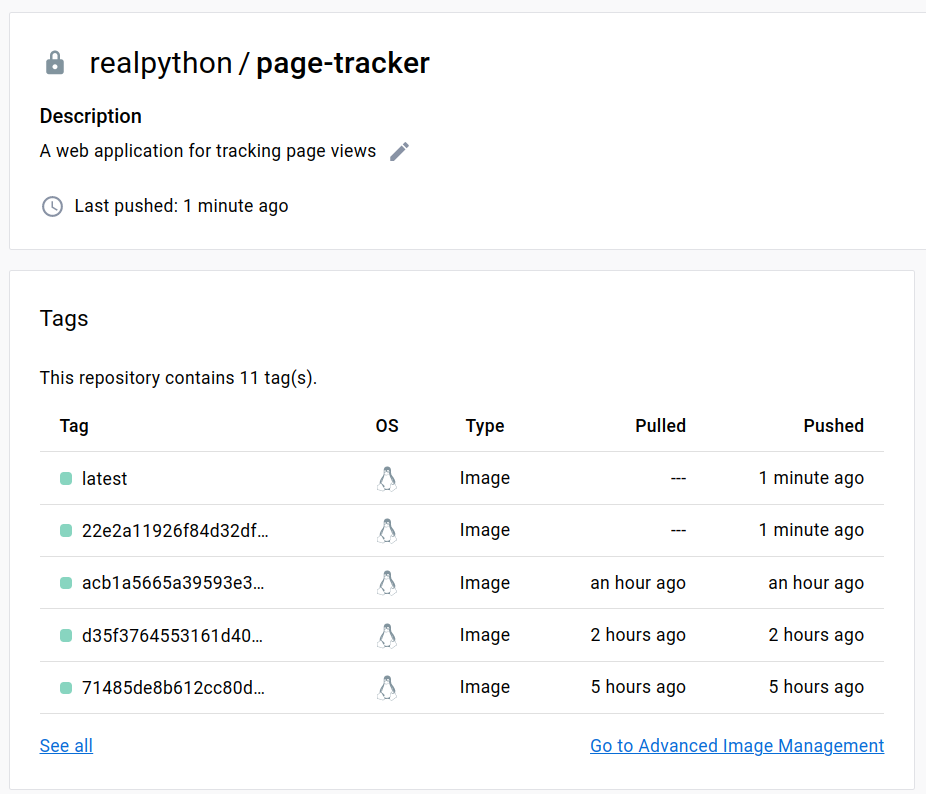

RUN python -m pip install --upgrade pip setuptools && \