Queues are the backbone of numerous algorithms found in games, artificial intelligence, satellite navigation, and task scheduling. They’re among the top abstract data types that computer science students learn early in their education. At the same time, software engineers often leverage higher-level message queues to achieve better scalability of a microservice architecture. Plus, using queues in Python is simply fun!

Python provides a few built-in flavors of queues that you’ll see in action in this tutorial. You’re also going to get a quick primer on the theory of queues and their types. Finally, you’ll take a look at some external libraries for connecting to popular message brokers available on major cloud platform providers.

In this tutorial, you’ll learn how to:

- Differentiate between various types of queues

- Implement the queue data type in Python

- Solve practical problems by applying the right queue

- Use Python’s thread-safe, asynchronous, and interprocess queues

- Integrate Python with distributed message queue brokers through libraries

To get the most out of this tutorial, you should be familiar with Python’s sequence types, such as lists and tuples, and the higher-level collections in the standard library.

You can download the complete source code for this tutorial with the associated sample data by clicking the link in the box below:

Get Source Code: Click here to get access to the source code and sample data that you’ll use to explore queues in Python.

Learning About the Types of Queues

A queue is an abstract data type that represents a sequence of elements arranged according to a set of rules. In this section, you’ll learn about the most common types of queues and their corresponding element arrangement rules. At the very least, every queue provides operations for adding and removing elements in constant time or O(1) using the Big O notation. That means both operations should be instantaneous regardless of the queue’s size.

Some queues may support other, more specific operations. It’s time to learn more about them!

Queue: First-In, First-Out (FIFO)

The word queue can have different meanings depending on the context. However, when people refer to a queue without using any qualifiers, they usually mean a FIFO queue, which resembles a line that you might find at a grocery checkout or tourist attraction:

Note that unlike the line in the photo, where people are clustering side by side, a queue in a strict sense will be single file, with people admitted one at a time.

FIFO is short for first-in, first-out, which describes the flow of elements through the queue. Elements in such a queue will be processed on a first-come, first-served basis, which is how most real-life queues work. To better visualize the element movement in a FIFO queue, have a look at the following animation:

Notice that, at any given time, a new element is only allowed to join the queue on one end called the tail—which is on the right in this example—while the oldest element must leave the queue from the opposite end. When an element leaves the queue, then all of its followers shift by exactly one position towards the head of the queue. These few rules ensure that elements are processed in the order of their arrival.

Note: You can think of elements in a FIFO queue as cars stopping at a traffic light.

Adding an element to the FIFO queue is commonly referred to as an enqueue operation, while retrieving one from it is known as a dequeue operation. Don’t confuse a dequeue operation with the deque (double-ended queue) data type that you’ll learn about later!

Enqueuing and dequeuing are two independent operations that may be taking place at different speeds. This fact makes FIFO queues the perfect tool for buffering data in streaming scenarios and for scheduling tasks that need to wait until some shared resource becomes available. For example, a web server flooded with HTTP requests might place them in a queue instead of immediately rejecting them with an error.

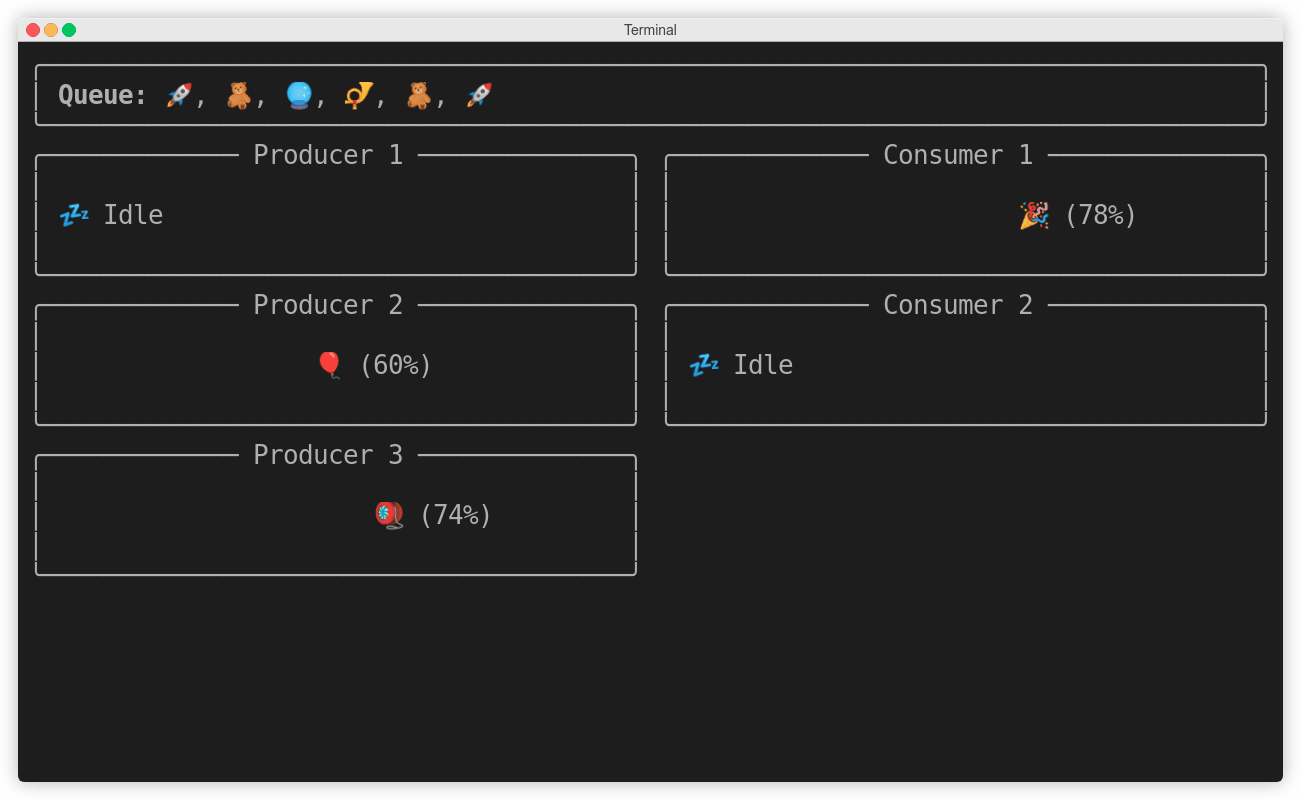

Note: In programs that leverage concurrency, a FIFO queue often becomes the shared resource itself to facilitate two-way communication between asynchronous workers. By temporarily locking the read or write access to its elements, a blocking queue can elegantly coordinate a pool of producers and a pool of consumers. You’ll find more information about this use case in later sections about queues in multithreading and multiprocessing.

Another point worth noting about the queue depicted above is that it can grow without bounds as new elements arrive. Picture a checkout line stretching to the back of the store during a busy shopping season! In some situations, however, you might prefer to work with a bounded queue that has a fixed capacity known up front. A bounded queue can help to keep scarce resources under control in two ways:

- By irreversibly rejecting elements that don’t fit

- By overwriting the oldest element in the queue

Under the first strategy, once a FIFO queue becomes saturated, it won’t take any more elements until others leave the queue to make some space. You can see an animated example of how this works below:

This queue has a capacity of three, meaning it can hold at most three elements. If you try to stuff more elements into it, then they’ll bounce off into oblivion without leaving any trace behind. At the same time, as soon as the number of elements occupying the queue decreases, the queue will start accepting new elements again.

Where would you find such a bounded FIFO queue in the wild?

Most digital cameras support the burst mode for continuously shooting a series of pictures as rapidly as possible in the hope of capturing at least one sharp photo of a moving object. Because saving data onto a memory card is the bottleneck, there’s usually an internal buffer that enables the camera to keep taking new pictures while earlier ones are being compressed and saved.

In older still cameras, the buffer was usually quite small and would fill up within a few seconds. When that happened, holding the shutter button down would no longer have any effect, or the pace of snapping new pictures would reduce noticeably. The camera’s maximum speed would fully recover only after draining the data buffer.

The second strategy for dealing with incoming elements in a bounded FIFO queue lets you implement a basic cache that forgets the oldest element without considering how many times or how frequently you’ve accessed it. A FIFO cache works best when newer elements are more likely to be reused than older ones. For example, you can use the FIFO cache eviction strategy to forcefully log out users who logged in a long time ago regardless if they’re still active.

Note: For a brief comparison of other cache eviction strategies, head over to Caching in Python Using the LRU Cache Strategy.

Here’s a visual depiction of a bounded FIFO queue that can hold up to three elements but, unlike before, won’t prevent you from adding more elements:

When this queue reaches its maximum capacity, then adding a new element will first shift all existing elements by one position towards the head, discarding the oldest element and making room for the new one. Notice that the discarded element gets overwritten by its immediate neighbor.

While the unbounded FIFO queue and its two bounded counterparts cover a wide range of use cases, they all share one common feature—that is, having separate entry and exit points. In the next section, you’ll learn about yet another popular type of queue, which has a slightly different layout.

Stack: Last-In, First-Out (LIFO)

A stack is a more specialized queue, also known as a LIFO or last-in, first-out queue. It works almost exactly like a regular queue, except that elements must now join and leave it through only one end called the top of the stack. The name top reflects the fact that real-world stacks tend to be vertical. A pile of plates in the kitchen sink is an example of a stack:

When the dishwasher is full, employees will push their dirty plates on the top of the stack after having a meal. Once there are no more clean plates in the cabinet, a hungry employee will have to pop the last dirty plate from the top of the stack and clean it with a sponge before microwaving their lunch.

If there’s a much-needed fork at the bottom of the stack, then some poor soul will have to dig through all of the plates above, one by one, to get to the desired utensil. Similarly, when the cleaning personnel comes to the office at the end of a business day, they’ll have to go through the plates in reverse order before getting to the last one at the bottom of the stack.

You’ll see this element movement in the following animated stack:

Even though the LIFO queue above is oriented horizontally, it preserves the general idea of a stack. New elements grow the stack by joining it only on the right end, as in the previous examples. This time, however, only the last element pushed onto the stack can leave it. The rest must wait until there are no more elements that have joined the stack later.

Stacks are widely used in computing for various purposes. Perhaps the most familiar context for a programmer is the call stack containing the functions in the order they were called. Python will reveal this stack to you through a traceback in case of an unhandled exception. It’s usually a bounded stack with a limited capacity, which you’ll find out about during a stack overflow error caused by too many recursive calls.

In compiled languages with static type checking, local variables are allocated on the stack, which is a fast memory region. Stacks can help detect unmatched brackets in a code block or evaluate arithmetic expressions represented in reverse Polish notation (RPN). You can also use stacks to solve the Tower of Hanoi puzzle or keep track of the visited nodes in a graph or a tree traversed with the depth-first search (DFS) algorithm.

Note: When you replace the stack, or LIFO queue, with a FIFO queue in the DFS algorithm and make a few minor tweaks, then you’ll get the breadth-first search (BFS) algorithm almost for free! You’ll explore both algorithms in more detail later in this tutorial.

While a stack is a specialization of a queue, the deque or double-ended queue is a generalization that you can use as a basis to implement both FIFO and LIFO queues. You’ll see how deques work and where you can use them in the next section.

Deque: Double-Ended Queue

A double-ended queue or deque (pronounced deck) is a more generic data type that combines and extends the ideas behind the stack and the queue. It allows you to enqueue or dequeue elements from both ends in constant time at any given moment. Therefore, a deque can work as a FIFO or a LIFO queue, as well as anything in between and beyond.

Using the same example of a line of people as before, you can take advantage of a deque to model more sophisticated corner cases. In real life, the last person in the queue might get impatient and decide to leave the queue early or join another queue at a new checkout that has just opened. Conversely, someone who has booked a visit online for a particular date and time in advance may be allowed to join the queue at the front without waiting.

Below is an animation that shows an unbounded deque in action:

In this particular example, most elements generally follow one direction by joining the queue on the right and leaving it on the left, just like in a plain FIFO queue. However, some privileged elements are allowed to join the queue from the left end, while the last element can leave the queue through the opposite end.

Adding an element to a bounded deque that has already reached its full capacity will overwrite the element currently located at the opposite end. That feature might be handy for isolating the first few or the last few elements from a sequence. You may also want to stop anywhere in that sequence and then move to the left or right in smaller steps:

Suppose you were calculating the moving average of pixel intensities in a scan line of a raster image. Moving left or right would give you a preview of the few consecutive pixel values and dynamically calculate their average. This is more or less how convolution kernels work for applying filters in advanced image processing.

Most deques support two additional operations called rotate left and rotate right, which shift the elements a specified number of places in one or the other direction in a circular fashion. Because the deque’s size remains unchanged, elements that would stick out get wrapped around at the ends, as in an analog car odometer:

When rotated right, the last element in the deque becomes first. On the other hand, when rotated left, the first element becomes the last one. Perhaps you could imagine this process more easily by arranging the deque’s elements in a circle so that both ends meet. Then, rotating right and left would correspond to a clockwise and counterclockwise rotation, respectively.

Rotations, combined with the deque’s core capabilities, open up interesting possibilities. For example, you could use a deque to implement a load balancer or a task scheduler working in a round-robin fashion. In a GUI application, you could use a deque to store recently opened files, allow a user to undo and redo their actions, or let the user navigate back and forth through their web browsing history.

As you can see, deques find many practical uses, especially in tracking the most recent activity. However, some problems will require you to take advantage of yet another type of queue, which you’ll read about next.

Priority Queue: Sorted From High to Low

A priority queue is different from those you’ve seen so far because it can’t store ordinary elements. Instead, each element must now have an associated priority to compare against other elements. The queue will maintain a sorted order, letting new elements join where necessary while shuffling the existing elements around if needed. When two elements are of equal priority, they’ll follow their insertion order.

Note: Make sure to choose a data type for your priorities whose values are comparable through the comparison operators, such as less than (<). For example, integers and timestamps would be fine, while complex numbers wouldn’t work for indicating priority because they don’t implement any relevant comparison operator.

This kind of queue works in a way that’s analogous to priority boarding on a plane:

Regular passengers will join the queue at the very end unless they’re accompanied by small children, have disabilities, or have earned loyalty points, in which case they’ll be fast-tracked to the front of the line. Business-class travelers usually enjoy the luxury of a separate, much smaller queue, but even they sometimes have to let the first-class travelers pass.

The animation below illustrates a sample flow of elements having three distinct priorities through an unbounded priority queue:

Blue squares represent the lowest priority, yellow triangles are higher in the hierarchy, and red circles are the most important. A new element gets inserted between one with a higher or equal priority and another one with a lower priority. This rule resembles the insertion sort algorithm, which happens to be stable, as elements with the same priority never swap their initial places.

You could use the priority queue to sort a sequence of elements by a given key or get the top few elements. However, that would be overkill because there are far more efficient sorting algorithms available. The priority queue is better suited for situations when elements can come and go dynamically. One such situation would be searching for the shortest path in a weighted graph using Dijkstra’s algorithm, which you’ll read about later.

Note: Even though the priority queue is conceptually a sequence, its most efficient implementation builds on top of the heap data structure, which is a kind of binary tree. Therefore, the terms heap and priority queue are sometimes used interchangeably.

That was a longish introduction to the theory and taxonomy of queues. Along the way, you’ve learned about FIFO queues, stacks (LIFO queues), deques, and priority queues. You’ve also seen the difference between bounded and unbounded queues, and you’ve gotten an idea about their potential applications. Now, it’s time to take a stab at implementing some of those queues yourself.

Implementing Queues in Python

First of all, should you implement a queue yourself in Python? In most cases, the answer to that question will be a decisive no. The language comes with batteries included, and queues are no exception. In fact, you’ll discover that Python has an abundance of queue implementations suited to solving various problems.

That being said, trying to build something from scratch can be an invaluable learning experience. You might also get asked to provide a queue implementation during a technical interview. So, if you find this topic interesting, then please read on. Otherwise, if you only seek to use queues in practice, then feel free to skip this section entirely.

Representing FIFO and LIFO Queues With a Deque

To represent a FIFO queue in the computer’s memory, you’ll need a sequence that has O(1), or constant time, performance for the enqueue operation on one end, and a similarly efficient dequeue operation on the other end. As you already know by now, a deque or double-ended queue satisfies those requirements. Plus, it’s universal enough to adapt for a LIFO queue as well.

However, because coding one would be out of scope of this tutorial, you’re going to leverage Python’s deque collection from the standard library.

Note: A deque is an abstract data type that you may implement in a few ways. Using a doubly linked list as the underlying implementation will ensure that accessing and removing elements from both ends will have the desired O(1) time complexity. If your deque has a fixed size, then you can use a circular buffer instead, letting you access any element in constant time. Unlike a linked list, a circular buffer is a random-access data structure.

Why not use a Python list instead of collections.deque as a building block for your FIFO queue?

Both sequences allow for enqueuing elements with their .append() methods rather cheaply, with a small reservation for lists, which will occasionally require copying all elements to a new memory location when their number exceeds a certain threshold.

Unfortunately, dequeuing an element from the front of a list with list.pop(0), or equivalently inserting one with list.insert(0, element), is far less efficient. Such operations always shift the remaining elements, resulting in a linear, or O(n), time complexity. In contrast, deque.popleft() and deque.appendleft() avoid that step altogether.

With that, you can proceed to define your custom Queue class based on Python’s deque collection.

Building a Queue Data Type

Now that you’ve chosen a suitable queue representation, you can fire up your favorite code editor, such as Visual Studio Code or PyCharm, and create a new Python module for the different queue implementations. You can call the file queues.py (plural form) to avoid a conflict with the similarly named queue (singular form) module already available in Python’s standard library.

Note: You’ll have a closer look at the built-in queue module in a later section devoted to thread-safe queues in Python.

Because you want your custom FIFO queue to support at least the enqueue and dequeue operations, go ahead and write a bare-bones Queue class that’ll delegate those two operations to deque.append() and deque.popleft() methods, respectively:

# queues.py

from collections import deque

class Queue:

def __init__(self):

self._elements = deque()

def enqueue(self, element):

self._elements.append(element)

def dequeue(self):

return self._elements.popleft()

This class merely wraps a collections.deque instance and calls it ._elements. The leading underscore in the attribute’s name indicates an internal bit of implementation, which only the class should access and modify. Such fields are sometimes called private because they’re not supposed to be visible outside of the class body.

You can test your FIFO queue by importing it from the local module within an interactive Python interpreter session:

>>> from queues import Queue

>>> fifo = Queue()

>>> fifo.enqueue("1st")

>>> fifo.enqueue("2nd")

>>> fifo.enqueue("3rd")

>>> fifo.dequeue()

'1st'

>>> fifo.dequeue()

'2nd'

>>> fifo.dequeue()

'3rd'

As expected, the enqueued elements come back to you in their original order. If you want, you may improve your class by making it iterable and able to report its length and optionally accept initial elements:

# queues.py

from collections import deque

class Queue:

def __init__(self, *elements):

self._elements = deque(elements)

def __len__(self):

return len(self._elements)

def __iter__(self):

while len(self) > 0:

yield self.dequeue()

def enqueue(self, element):

self._elements.append(element)

def dequeue(self):

return self._elements.popleft()

A deque takes an optional iterable, which you can provide through a varying number of positional arguments, *elements, in your initializer method. By implementing the special .__iter__() method, you’ll make your class instances usable in a for loop, while implementing .__len__() will make them compatible with the len() function. The .__iter__() method above is an example of a generator iterator, which yields elements lazily.

Note: The implementation of .__iter__() causes your custom queue to reduce its size by dequeuing elements from itself as you iterate over it.

Restart the Python interpreter and import your class again to see the updated code in action:

>>> from queues import Queue

>>> fifo = Queue("1st", "2nd", "3rd")

>>> len(fifo)

3

>>> for element in fifo:

... print(element)

...

1st

2nd

3rd

>>> len(fifo)

0

The queue has three elements initially, but its length drops to zero after consuming all elements in a loop. Next up, you’ll implement a stack data type that’ll dequeue elements in reverse order.

Building a Stack Data Type

Building a stack data type is considerably more straightforward because you’ve already done the bulk of the hard work. Since most of the implementation will remain the same, you can extend your Queue class using inheritance and override the .dequeue() method to remove elements from the top of the stack:

# queues.py

# ...

class Stack(Queue):

def dequeue(self):

return self._elements.pop()

That’s it! Elements are now popped from the same end of the queue that you pushed them through before. You can quickly verify this in an interactive Python session:

>>> from queues import Stack

>>> lifo = Stack("1st", "2nd", "3rd")

>>> for element in lifo:

... print(element)

...

3rd

2nd

1st

With an identical setup and test data as before, the elements return to you in reverse order, which is the expected behavior of a LIFO queue.

Note: In this tutorial, you use inheritance as a convenient mechanism to reuse code. However, the current class relationship isn’t semantically correct, because a stack isn’t a subtype of a queue. You could just as well define the stack first and let the queue extend it. In the real world, you should probably make both classes inherit from an abstract base class because they share the same interface.

In one-off scripts, you could probably get away with using a plain old Python list as a rudimentary stack when you don’t mind the extra overhead of having to copy the values from time to time:

>>> lifo = []

>>> lifo.append("1st")

>>> lifo.append("2nd")

>>> lifo.append("3rd")

>>> lifo.pop()

'3rd'

>>> lifo.pop()

'2nd'

>>> lifo.pop()

'1st'

Python lists are iterable out of the box. They can report their length and have a sensible textual representation. In the next section, you’ll choose them as the foundation for a priority queue.

Representing Priority Queues With a Heap

The last queue that you’ll implement in this tutorial will be a priority queue. Unlike a stack, the priority queue can’t extend the Queue class defined earlier, because it doesn’t belong to the same type hierarchy. The order of elements in a FIFO or LIFO queue is determined solely by the elements’ time of arrival. In a priority queue, it’s an element’s priority and the insertion order that together determine the ultimate position within the queue.

There are many ways to implement a priority queue, such as:

- An unordered list of elements and their priorities, which you search through every time before dequeuing the element with the highest priority

- An ordered list of elements and their priorities, which you sort every time you enqueue a new element

- An ordered list of elements and their priorities, which you keep sorted by finding the right spot for a new element using binary search

- A binary tree that maintains the heap invariant after the enqueue and dequeue operations

You can think of a priority queue as a list that needs to be sorted every time a new element arrives so that you’ll be able to remove the last one with the highest priority when performing the dequeue operation. Alternatively, you could ignore the element order until removing one with the highest priority, which you could find using the linear search algorithm.

Looking up an element in an unordered list has O(n) time complexity. Sorting the entire queue would be even more expensive, especially when exercised often. Python’s list.sort() method employs an algorithm called Timsort, which has O(n log(n)) worst-case time complexity. Inserting an element with bisect.insort() is slightly better because it can take advantage of an already sorted list, but the gain is offset by the slow insertion that follows.

Fortunately, you can be smart about keeping the elements sorted in a priority queue by using a heap data structure under the hood. It provides a more efficient implementation than those listed earlier. Here’s a table with a quick comparison of the time complexity for the enqueue and dequeue operations provided by those different implementations:

| Implementation | Enqueue | Dequeue |

|---|---|---|

| Find Max on Dequeue | O(1) | O(n) |

| Sort on Enqueue | O(n log(n)) | O(1) |

| Bisect and Insert on Enqueue | O(n) | O(1) |

| Push onto a Heap on Enqueue | O(log(n)) | O(log(n)) |

The heap has the best overall performance for large data volumes. Although using the bisection method to find the right spot for a new element is O(log(n)), the actual insertion of that element is O(n), making it less desirable than a heap.

Python has the heapq module, which conveniently provides a few functions that can turn a regular list into a heap and manipulate it efficiently. The two functions that’ll help you build a priority queue are:

heapq.heappush()heapq.heappop()

When you push a new element onto a non-empty heap, it’ll end up in the right spot, maintaining the heap invariant. Note that this doesn’t necessarily mean that the resulting elements will become sorted:

>>> from heapq import heappush

>>> fruits = []

>>> heappush(fruits, "orange")

>>> heappush(fruits, "apple")

>>> heappush(fruits, "banana")

>>> fruits

['apple', 'orange', 'banana']

Fruit names in the resulting heap in the example above don’t follow alphabetical order. If you pushed them in a different order, though, they could!

The point of a heap isn’t so much about sorting elements but rather keeping them in a certain relationship to allow for quick lookup. What really matters is that the first element on a heap always has the smallest (min-heap) or the highest (max-heap) value, depending on how you define the condition for the mentioned relationship. Python’s heaps are min-heaps, which means that the first element has the smallest value.

When you pop an element from a heap, you’ll always get the first one, while the remaining elements might shuffle a little bit:

>>> from heapq import heappop

>>> heappop(fruits)

'apple'

>>> fruits

['banana', 'orange']

Notice how the banana and orange swapped places to ensure the first element continues to be the smallest. When you tell Python to compare two string objects by value, it starts looking at their characters pairwise from left to right and checks each pair one by one. The character with a lower Unicode code point is considered smaller, which resolves the word order.

Now, how do you throw priorities into the mix? The heap compares elements by value rather than by their priority, after all. To work around this, you can leverage Python’s tuple comparison, which takes into account the tuple’s components, looking from left to right until the outcome is known:

>>> person1 = ("John", "Brown", 42)

>>> person2 = ("John", "Doe", 42)

>>> person3 = ("John", "Doe", 24)

>>> person1 < person2

True

>>> person2 < person3

False

Here, you have three tuples representing different people. Each has a first name, last name, and age. Python determines that person1 should go before person2 based on their last names since they share the same first name, but Python doesn’t look at their ages because the ordering is already known. The age becomes important in the second comparison between person2 and person3, who happen to have the same first and last names.

You can enforce a prioritized order on the heap by storing tuples whose first element is a priority. However, there will be a few fine details that you need to be careful about. You’ll learn more about them in the next section.

Building a Priority Queue Data Type

Imagine you were building software for an automotive company. Modern vehicles are practically computers on wheels, which leverage a controller area network (CAN) bus to broadcast messages about various events going on in your car, such as unlocking the doors or inflating an airbag. Clearly, some of those events are more important than others and should be prioritized accordingly.

Fun Fact: You can download a mobile app for your smartphone, such as Torque, that’ll let you connect to the CAN bus of your car over Bluetooth or an ad hoc WiFi network through a small scanner device hooked up to your car’s on-board diagnostics (OBD) port.

This setup will allow you to monitor your vehicle’s parameters in real time, even if they’re not exposed on the dashboard! This includes things like coolant temperature, battery voltage, miles per gallon, and emissions. Moreover, you’ll be able to check if your car’s ECUs report any fault codes.

It’s okay to miss a faulty headlight message or wait a little longer for the audio volume level to go down. However, when you press the brake pedal, you expect it to have an immediate effect because it’s a safety-critical subsystem. Each message has a priority in the CAN bus protocol, which tells the intermediate units whether they should relay the message further or disregard it completely.

Even though this is an oversimplification of the problem, you can think of the CAN bus as a priority queue that sorts the messages according to their importance. Now, return to your code editor and define the following class in the Python module that you created before:

# queues.py

from collections import deque

from heapq import heappop, heappush

# ...

class PriorityQueue:

def __init__(self):

self._elements = []

def enqueue_with_priority(self, priority, value):

heappush(self._elements, (priority, value))

def dequeue(self):

return heappop(self._elements)

It’s a basic priority queue implementation, which defines a heap of elements using a Python list and two methods that manipulate it. The .enqueue_with_priority() method takes two arguments, a priority and a corresponding value, which it then wraps in a tuple and pushes onto the heap using the heapq module. Notice that the priority comes before the value to take advantage of how Python compares tuples.

Unfortunately, there are a few problems with the above implementation that become apparent when you try to use it:

>>> from queues import PriorityQueue

>>> CRITICAL = 3

>>> IMPORTANT = 2

>>> NEUTRAL = 1

>>> messages = PriorityQueue()

>>> messages.enqueue_with_priority(IMPORTANT, "Windshield wipers turned on")

>>> messages.enqueue_with_priority(NEUTRAL, "Radio station tuned in")

>>> messages.enqueue_with_priority(CRITICAL, "Brake pedal depressed")

>>> messages.enqueue_with_priority(IMPORTANT, "Hazard lights turned on")

>>> messages.dequeue()

(1, 'Radio station tuned in')

You defined three priority levels: critical, important, and neutral. Next, you instantiated a priority queue and used it to enqueue a few messages with those priorities. However, instead of dequeuing the message with the highest priority, you got a tuple corresponding to the message with the lowest priority.

Note: Ultimately, it’s up to you how you want to define the order of your priorities. In this tutorial, a lower priority corresponds to a lower numeric value, while a higher priority has a greater value.

That said, it can be more convenient to reverse this order in some cases. For example, in Dijkstra’s shortest path algorithm, you’ll want to prioritize paths with a smaller total cost over those with a high cost. To handle such a situation, you’ll implement another class later.

Because Python’s heap is a min-heap, its first element always has the lowest value. To fix this, you can flip the sign of a priority when pushing a tuple onto the heap, making the priority a negative number so that the highest one becomes the lowest:

# queues.py

# ...

class PriorityQueue:

def __init__(self):

self._elements = []

def enqueue_with_priority(self, priority, value):

heappush(self._elements, (-priority, value))

def dequeue(self):

return heappop(self._elements)[1]

With this small change, you’ll push critical messages ahead of important and neutral ones. Additionally, when performing a dequeue operation, you’ll unpack the value from the tuple by accessing its second component, located at index one using the square bracket ([]) syntax.

Now, if you head back to your interactive Python interpreter, import the updated code, and enqueue the same messages once again, then they’ll come back to you in a more sensible order:

>>> messages.dequeue()

'Brake pedal depressed'

>>> messages.dequeue()

'Hazard lights turned on'

>>> messages.dequeue()

'Windshield wipers turned on'

>>> messages.dequeue()

'Radio station tuned in'

You get the critical message first, followed by the two important ones, and then the neutral message. So far, so good, right? However, there are two problems with your implementation. One of them you can already see in the output, while the other will only manifest itself under specific circumstances. Can you spot these problems?

Handling Corner Cases in Your Priority Queue

Your queue can correctly order elements by priority, but at the same time, it violates sort stability when comparing elements with equal priorities. This means that in the example above, flashing the hazard lights takes precedence over engaging the windshield wipers, even though this ordering doesn’t follow the chronology of events. Both messages have the same priority, important, so the queue should sort them by their insertion order.

To be clear, this is a direct consequence of tuple comparison in Python, which moves to the next component in a tuple if the earlier ones didn’t resolve the comparison. So, if two messages have equal priorities, then Python will compare them by value, which would be a string in your example. Strings follow the lexicographic order, in which the word Hazard comes before the word Windshield, hence the inconsistent order.

There’s another problem related to that, which would completely break the tuple comparison in rare cases. Specifically, it’d fail if you tried to enqueue an element that doesn’t support any comparison operators, such as an instance of a custom class, and the queue already contained at least one element with the same priority that you wanted to use. Consider the following data class to represent messages in your queue:

>>> from dataclasses import dataclass

>>> @dataclass

... class Message:

... event: str

...

>>> wipers = Message("Windshield wipers turned on")

>>> hazard_lights = Message("Hazard lights turned on")

>>> wipers < hazard_lights

Traceback (most recent call last):

...

TypeError: '<' not supported between instances of 'Message' and 'Message'

Message objects might be more convenient to work with than plain strings, but unlike strings, they aren’t comparable unless you tell Python how to perform the comparison. As you can see, custom class instances don’t provide the implementation for the less than (<) operator by default.

As long as you enqueue messages with different priorities, Python won’t compare them by value:

>>> messages = PriorityQueue()

>>> messages.enqueue_with_priority(CRITICAL, wipers)

>>> messages.enqueue_with_priority(IMPORTANT, hazard_lights)

For example, when you enqueue a critical message and an important message, Python determines their order unambiguously by looking at the corresponding priorities. However, as soon as you try enqueuing another critical message, you’ll get a familiar error:

>>> messages.enqueue_with_priority(CRITICAL, Message("ABS engaged"))

Traceback (most recent call last):

...

TypeError: '<' not supported between instances of 'Message' and 'Message'

This time around, the comparison fails because two of the messages are of equal priority and Python falls back to comparing them by value, which you haven’t defined for your custom Message class instances.

You can solve both problems—that is, the sort instability and the broken tuple comparison—by introducing another component to the elements stored on the heap. This extra component should be comparable and represent the time of arrival. When placed between the element’s priority and value in a tuple, it’ll resolve the order if two elements have the same priority, regardless of their values.

The most straightforward way of representing the arrival time in a priority queue is perhaps a monotonically increasing counter. In other words, you want to count the number of enqueue operations performed without considering the potential dequeue operations that might be taking place. Then, you’ll store the current value of the counter in every enqueued element to reflect the state of your queue at that instant.

You can use the count() iterator from the itertools module to count from zero to infinity in a concise way:

# queues.py

from collections import deque

from heapq import heappop, heappush

from itertools import count

# ...

class PriorityQueue:

def __init__(self):

self._elements = []

self._counter = count()

def enqueue_with_priority(self, priority, value):

element = (-priority, next(self._counter), value)

heappush(self._elements, element)

def dequeue(self):

return heappop(self._elements)[-1]

The counter gets initialized when you create a new PriorityQueue instance. Whenever you enqueue a value, the counter increments and retains its current state in a tuple pushed onto the heap. So, if you enqueue another value with the same priority later, then the earlier one will take precedence because you enqueued it with a smaller counter.

The last tiny detail to keep in mind after introducing this extra counter component into the tuple is updating the popped value index during a dequeue operation. Because elements are tuples with three components now, you ought to return the value located at index two instead of one. However, it’d be safer to use the negative one as an index to indicate the last component of the tuple, regardless of its length.

Your priority queue is almost ready, but it’s missing the two special methods, .__len__() and .__iter__(), which you implemented in the other two queue classes. While you can’t reuse their code through inheritance, as the priority queue is not a subtype of the FIFO queue, Python provides a powerful mechanism that lets you work around that issue.

Refactoring the Code Using a Mixin Class

To reuse code across unrelated classes, you can identify their least common denominator and then extract that code into a mixin class. A mixin class is like a spice. It can’t stand on its own, so you wouldn’t typically instantiate it, but it can add that extra flavor once you mix it into another class. Here’s how it would work in practice:

# queues.py

# ...

class IterableMixin:

def __len__(self):

return len(self._elements)

def __iter__(self):

while len(self) > 0:

yield self.dequeue()

class Queue(IterableMixin):

# ...

class Stack(Queue):

# ...

class PriorityQueue(IterableMixin):

# ...

You moved the .__len__() and .__iter__() methods from the Queue class to a separate IterableMixin class and made the former extend that mixin. You also made the PriorityQueue inherit from the same mixin class. How is this different from the standard inheritance?

Unlike programming languages like Scala that support mixins directly with traits, Python uses multiple inheritance to implement the same concept. However, extending a mixin class is semantically different from extending a regular class, which is no longer a form of type specialization. To emphasize this difference, some people call it the inclusion of a mixin class rather than pure inheritance.

Notice that your mixin class refers to an ._elements attribute, which you haven’t defined yet! It’s provided by the concrete classes, such as Queue and PriorityQueue, that you throw into the mix much later. Mixins are great for encapsulating behavior rather than state, much like default methods in Java interfaces. By composing a class with one or more mixins, you can change or augment its original behavior.

Expand the collapsible section below to reveal the complete source code:

# queues.py

from collections import deque

from heapq import heappop, heappush

from itertools import count

class IterableMixin:

def __len__(self):

return len(self._elements)

def __iter__(self):

while len(self) > 0:

yield self.dequeue()

class Queue(IterableMixin):

def __init__(self, *elements):

self._elements = deque(elements)

def enqueue(self, element):

self._elements.append(element)

def dequeue(self):

return self._elements.popleft()

class Stack(Queue):

def dequeue(self):

return self._elements.pop()

class PriorityQueue(IterableMixin):

def __init__(self):

self._elements = []

self._counter = count()

def enqueue_with_priority(self, priority, value):

element = (-priority, next(self._counter), value)

heappush(self._elements, element)

def dequeue(self):

return heappop(self._elements)[-1]

With the three queue classes in place, you can finally apply them to solving a real problem!

Using Queues in Practice

As mentioned in the introduction to this tutorial, queues are the backbone of many important algorithms. One particularly interesting area of application is visiting nodes in a graph, which might represent a map of roads between cities, for example. Queues can be useful in finding the shortest or the most optimal path between two places.

In this section, you’re going to use the queues that you just built to implement classic graph traversal algorithms. There are numerous ways to represent graphs in code and an equal number of Python libraries that already do that well. For the sake of simplicity, you’ll take advantage of the networkx and pygraphviz libraries, as well as the widely used DOT graph description language.

You can install those libraries into your virtual environment using pip:

(venv) $ python -m pip install networkx pygraphviz

Alternatively, you can install all dependencies needed for the rest of this tutorial in one step by following the instructions in the README file that you’ll find in the supplemental materials. Note that installing pygraphviz can be a bit challenging because it requires a C compiler toolchain. Check the official installation guide for more details.

Sample Data: Road Map of the United Kingdom

Once you’ve installed the required libraries, you’ll read a weighted and undirected graph of the cities in the United Kingdom from a DOT file, which you can find in the accompanying materials:

Get Source Code: Click here to get access to the source code and sample data that you’ll use to explore queues in Python.

This graph has 70 nodes representing UK cities and 137 edges weighted by the estimated distance in miles between the connected cities:

Note that the graph depicted above is a simplified model of the road network in the UK, as it doesn’t account for the road types, their capacity, speed limits, traffic, or bypasses. It also ignores the fact that there’s usually more than one road connecting two cities. So, the shortest path determined by satellite navigation or Google Maps will most likely differ from the one that you’ll find with queues in this tutorial.

That said, the graph above represents actual road connections between the cities as opposed to straight lines as the crow flies. Even though the edges might look like straight lines in the visualization, they most certainly aren’t in real life. Graphically, you can represent the same graph in a multitude of ways.

Next up, you’ll use the networkx library to read this graph into Python.

Object Representation of the Cities and Roads

While networkx can’t read DOT files by itself, the library provides a few helper functions that delegate this task to other third-party libraries. You’ll use pygraphviz to read the sample DOT file in this tutorial:

>>> import networkx as nx

>>> print(nx.nx_agraph.read_dot("roadmap.dot"))

MultiGraph named 'Cities in the United Kingdom' with 70 nodes and 137 edges

While pygraphviz might be a bit challenging to install on some operating systems, it’s by far the fastest and most compliant with the DOT format’s advanced features. By default, networkx represents graph nodes using textual identifiers that can optionally have an associated dictionary of attributes:

>>> import networkx as nx

>>> graph = nx.nx_agraph.read_dot("roadmap.dot")

>>> graph.nodes["london"]

{'country': 'England',

'latitude': '51.507222',

'longitude': '-0.1275',

'pos': '80,21!',

'xlabel': 'City of London',

'year': 0}

For example, the "london" string maps to a corresponding dictionary of key-value pairs. The pos attribute, which contains normalized coordinates after applying the Mercator projection to latitude and longitude, is respected by Graphviz for the placement of nodes in the graph visualization. The year attribute indicates when a city got its status. When equal to zero, it means time immemorial.

Because that isn’t the most convenient way to think about graphs, you’ll define a custom data type representing a city in your road map. Go ahead, create a new file called graph.py and implement the following class in it:

# graph.py

from typing import NamedTuple

class City(NamedTuple):

name: str

country: str

year: int | None

latitude: float

longitude: float

@classmethod

def from_dict(cls, attrs):

return cls(

name=attrs["xlabel"],

country=attrs["country"],

year=int(attrs["year"]) or None,

latitude=float(attrs["latitude"]),

longitude=float(attrs["longitude"]),

)

You extend a named tuple to ensure that your node objects are hashable, which is required by networkx. You could use a properly configured data class instead, but a named tuple is hashable out of the box. It’s also comparable, which you might need later to determine the graph traversal order. The .from_dict() class method takes a dictionary of attributes extracted from a DOT file and returns a new instance of your City class.

To take advantage of your new class, you’re going to need to create a new graph instance and take note of the mapping of node identifiers to city instances. Add the following helper function to your graph module:

# graph.py

import networkx as nx

# ...

def load_graph(filename, node_factory):

graph = nx.nx_agraph.read_dot(filename)

nodes = {

name: node_factory(attributes)

for name, attributes in graph.nodes(data=True)

}

return nodes, nx.Graph(

(nodes[name1], nodes[name2], weights)

for name1, name2, weights in graph.edges(data=True)

)

The function takes a filename and a callable factory for the node objects, such as your City.from_dict() class method. It starts by reading a DOT file and building a mapping of node identifiers to the object-oriented representation of the graph nodes. Finally, it returns that mapping and a new graph comprising nodes and weighted edges.

You can now start playing with the UK road map again in an interactive Python interpreter session:

>>> from graph import City, load_graph

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> nodes["london"]

City(

name='City of London',

country='England',

year=None,

latitude=51.507222,

longitude=-0.1275

)

>>> print(graph)

Graph with 70 nodes and 137 edges

After importing the helper function and the City class from your module, you load the graph from a sample DOT file and store the result in two variables. The nodes variable lets you obtain a reference to an instance of the City class by the specified name, whereas the graph variable holds the entire networkx Graph object.

When looking for the shortest path between two cities, you’ll want to identify the immediate neighbors of a given city to find the available routes to follow. You can do that in a few ways with a networkx graph. In the simplest case, you’ll call the .neighbors() method on a graph with the specified node as an argument:

>>> for neighbor in graph.neighbors(nodes["london"]):

... print(neighbor.name)

...

Bath

Brighton & Hove

Bristol

Cambridge

Canterbury

Chelmsford

Coventry

Oxford

Peterborough

Portsmouth

Southampton

Southend-on-Sea

St Albans

Westminster

Winchester

This only reveals the neighboring nodes without the possible weights of the connecting edges, such as distances or the estimated travel times, which you might need to know about to pick the best path. If you’d like to include the weights, then access a node using the square bracket syntax:

>>> for neighbor, weights in graph[nodes["london"]].items():

... print(weights["distance"], neighbor.name)

...

115 Bath

53 Brighton & Hove

118 Bristol

61 Cambridge

62 Canterbury

40 Chelmsford

100 Coventry

58 Oxford

85 Peterborough

75 Portsmouth

79 Southampton

42 Southend-on-Sea

25 St Albans

1 Westminster

68 Winchester

The neighbors are always listed in the same order in which you defined them in the DOT file. To sort them by one or more weights, you can use the following code snippet:

>>> def sort_by(neighbors, strategy):

... return sorted(neighbors.items(), key=lambda item: strategy(item[1]))

...

>>> def by_distance(weights):

... return float(weights["distance"])

...

>>> for neighbor, weights in sort_by(graph[nodes["london"]], by_distance):

... print(f"{weights['distance']:>3} miles, {neighbor.name}")

...

1 miles, Westminster

25 miles, St Albans

40 miles, Chelmsford

42 miles, Southend-on-Sea

53 miles, Brighton & Hove

58 miles, Oxford

61 miles, Cambridge

62 miles, Canterbury

68 miles, Winchester

75 miles, Portsmouth

79 miles, Southampton

85 miles, Peterborough

100 miles, Coventry

115 miles, Bath

118 miles, Bristol

First, you define a helper function that returns a list of neighbors and their weights sorted by the specified strategy. The strategy takes a dictionary of all the weights associated with an edge and returns a sorting key. Next, you define a concrete strategy that produces a floating-point distance based on the input dictionary. Finally, you iterate over the neighbors of London, sorted by distance in ascending order.

With this elementary knowledge of the networkx library, you can now move on to implementing graph traversal algorithms based on the custom queue data types that you built earlier.

Breadth-First Search Using a FIFO Queue

In the breadth-first search algorithm, you look for a node that satisfies a particular condition by exploring the graph in concentric layers or levels. You start traversing the graph at an arbitrarily chosen source node unless the graph is a tree data structure, in which case you typically start at the root node of that tree. At each step, you visit all immediate neighbors of the current node before going deeper.

Note: To avoid getting stuck in a loop when the graph contains cycles, keep track of the neighbors that you’ve visited and skip them the next time you encounter them. For example, you can add the visited nodes to a Python set and later use the in operator to check if the set contains a given node.

For example, say you wanted to find any place in the United Kingdom that has been granted city status in the twentieth century, starting your search in Edinburgh. The networkx library already has many algorithms implemented, including the breadth-first search, which can help cross-check your future implementation. Call the nx.bfs_tree() function on your graph to reveal the breadth-first order of traversal:

>>> import networkx as nx

>>> from graph import City, load_graph

>>> def is_twentieth_century(year):

... return year and 1901 <= year <= 2000

...

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> for node in nx.bfs_tree(graph, nodes["edinburgh"]):

... print("📍", node.name)

... if is_twentieth_century(node.year):

... print("Found:", node.name, node.year)

... break

... else:

... print("Not found")

...

📍 Edinburgh

📍 Dundee

📍 Glasgow

📍 Perth

📍 Stirling

📍 Carlisle

📍 Newcastle upon Tyne

📍 Aberdeen

📍 Inverness

📍 Lancaster

Found: Lancaster 1937

The highlighted lines indicate all six immediate neighbors of Edinburgh, which is your source node. Notice that they’re visited in sequence without interruption before moving to the next layer of the graph. The subsequent layer consists of the second-level neighbors starting from the source node.

You explore the unvisited neighbors of the highlighted cities. The first one is Dundee, whose neighbors include Aberdeen and Perth, but you’ve already visited Perth, so you skip it and only visit Aberdeen. Glasgow doesn’t have any unvisited neighbors, while Perth has only Inverness. Similarly, you visited Stirling’s neighbors but not Carlisle’s, which connects with Lancaster. You stop the search because Lancaster is your answer.

The result of your search may sometimes vary depending on your choice of the starting point, as well as the order of neighbors if there’s more than one node satisfying a condition. To ensure consistent results, you can sort the neighbors according to some criteria. For example, you could visit cities with a higher latitude first:

>>> def order(neighbors):

... def by_latitude(city):

... return city.latitude

... return iter(sorted(neighbors, key=by_latitude, reverse=True))

>>> for node in nx.bfs_tree(graph, nodes["edinburgh"], sort_neighbors=order):

... print("📍", node.name)

... if is_twentieth_century(node.year):

... print("Found:", node.name, node.year)

... break

... else:

... print("Not found")

...

📍 Edinburgh

📍 Dundee

📍 Perth

📍 Stirling

📍 Glasgow

📍 Newcastle upon Tyne

📍 Carlisle

📍 Aberdeen

📍 Inverness

📍 Sunderland

Found: Sunderland 1992

Now, the answer is different because Newcastle is visited before Carlisle due to having a slightly higher latitude. In turn, this makes the breadth-first search algorithm find Sunderland before Lancaster, which is an alternative node matching your condition.

Note: In case you were wondering why order() wraps a list of sorted neighbors in a call to iter(), it’s because nx.bfs_tree() expects an iterator object as input for its sort_neighbors argument.

Now that you’ve gotten the general idea of the breadth-first search algorithm, it’s time to implement it yourself. Because the breadth-first traversal is the basis for other interesting algorithms, you’ll extract its logic into a separate function that you can delegate to:

# graph.py

from queues import Queue

# ...

def breadth_first_traverse(graph, source):

queue = Queue(source)

visited = {source}

while queue:

yield (node := queue.dequeue())

for neighbor in graph.neighbors(node):

if neighbor not in visited:

visited.add(neighbor)

queue.enqueue(neighbor)

def breadth_first_search(graph, source, predicate):

for node in breadth_first_traverse(graph, source):

if predicate(node):

return node

The first function takes a networkx graph and the source node as arguments while yielding nodes visited with the breadth-first traversal. Note that it uses your FIFO queue from the queues module to keep track of the node neighbors, ensuring that you’ll explore them in sequence on each layer. The function also marks visited nodes by adding them to a Python set, so that each neighbor is visited at most once.

Note: Instead of using a while loop along with the walrus operator (:=) to yield a dequeued node in one expression, you could take advantage of the fact that your custom queue is iterable by dequeuing elements using a for loop:

def breadth_first_traverse(graph, source):

queue = Queue(source)

visited = {source}

for node in queue:

yield node

for neighbor in graph.neighbors(node):

if neighbor not in visited:

visited.add(neighbor)

queue.enqueue(neighbor)

However, this relies on a non-obvious implementation detail in your Queue class, so you’ll stick with the more conventional while loop throughout the rest of this tutorial.

The second function builds on top of the first one by looping over the yielded nodes, and stops once the current node meets the expected criteria. If none of the nodes make the predicate truthy, then the function implicitly returns None.

To test your breadth-first search and traversal implementations in action, you can replace the convenience function built into networkx with your own:

>>> from graph import (

... City,

... load_graph,

... breadth_first_traverse,

... breadth_first_search as bfs,

... )

>>> def is_twentieth_century(city):

... return city.year and 1901 <= city.year <= 2000

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> city = bfs(graph, nodes["edinburgh"], is_twentieth_century)

>>> city.name

'Lancaster'

>>> for city in breadth_first_traverse(graph, nodes["edinburgh"]):

... print(city.name)

...

Edinburgh

Dundee

Glasgow

Perth

Stirling

Carlisle

Newcastle upon Tyne

Aberdeen

Inverness

Lancaster

⋮

As you can see, the traversal order is identical to your first attempt with networkx, confirming that your algorithm works correctly for this data set. However, your functions don’t allow sorting the neighbors in a particular order. Try modifying the code so that it accepts an optional sorting strategy. You can click the collapsible section below to see one possible solution:

# graph.py

# ...

def breadth_first_traverse(graph, source, order_by=None):

queue = Queue(source)

visited = {source}

while queue:

yield (node := queue.dequeue())

neighbors = list(graph.neighbors(node))

if order_by:

neighbors.sort(key=order_by)

for neighbor in neighbors:

if neighbor not in visited:

visited.add(neighbor)

queue.enqueue(neighbor)

def breadth_first_search(graph, source, predicate, order_by=None):

for node in breadth_first_traverse(graph, source, order_by):

if predicate(node):

return node

The breadth-first search algorithm ensures that you’ll eventually explore all connected nodes in a graph while searching for one that satisfies the desired condition. You could use it to solve a maze, for example. The breadth-first traversal is also the foundation for finding the shortest path between two nodes in an undirected and unweighted graph. In the next section, you’ll adapt your code to do just that.

Shortest Path Using Breadth-First Traversal

In many cases, the fewer the nodes on the path from source to destination, the shorter the distance. You could take advantage of this observation to estimate the shortest distance if the connections between your cities didn’t have a weight. That would be equivalent to having equal weight on every edge.

Traversing the graph using the breadth-first approach will produce a path guaranteed to have the fewest nodes. Sometimes there might be more than one shortest path between two nodes. For example, there are two such shortest paths between Aberdeen and Perth when you disregard the road distances. As before, the actual result in such a case will depend on how you order the neighboring nodes.

You can use networkx to reveal all the shortest paths between two cities, which will have the same minimal length:

>>> import networkx as nx

>>> from graph import City, load_graph

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> city1 = nodes["aberdeen"]

>>> city2 = nodes["perth"]

>>> for i, path in enumerate(nx.all_shortest_paths(graph, city1, city2), 1):

... print(f"{i}.", " → ".join(city.name for city in path))

...

1. Aberdeen → Dundee → Perth

2. Aberdeen → Inverness → Perth

After loading the graph, you enumerate the shortest paths between two cities and print them onto the screen. You can see there are only two shortest paths between Aberdeen and Perth. In contrast, London and Edinburgh have four distinct shortest paths with nine nodes each, but many longer paths exist between them.

How does breadth-first traversal help you find the shortest path exactly?

Whenever you visit a node, you must keep track of the previous node that led you to it by saving this information as a key-value pair in a dictionary. Later, you’ll be able to trace back your way from the destination to the source by following the previous nodes. Go back to your code editor and create another function by copying and adapting the code from your earlier breadth_first_traverse() function:

# graph.py

# ...

def shortest_path(graph, source, destination, order_by=None):

queue = Queue(source)

visited = {source}

previous = {}

while queue:

node = queue.dequeue()

neighbors = list(graph.neighbors(node))

if order_by:

neighbors.sort(key=order_by)

for neighbor in neighbors:

if neighbor not in visited:

visited.add(neighbor)

queue.enqueue(neighbor)

previous[neighbor] = node

if neighbor == destination:

return retrace(previous, source, destination)

This new function takes another node as an argument and optionally lets you order the neighbors using a custom strategy. It also defines an empty dictionary, which you populate when visiting a neighbor by associating it with the previous node on your path. All key-value pairs in this dictionary are immediate neighbors without any nodes between them.

If a path exists between your source and destination, then the function returns a list of nodes built with another helper function instead of yielding the individual nodes like breadth_first_traverse().

Note: You could try refactoring this code by combining shortest_path() and breadth_first_traverse() into one function if you wanted to. However, experienced programmers generally agree that having a bit of repetition can sometimes be okay as long as it keeps your code easier to understand and focused on one responsibility.

To recreate the shortest path between your source and destination, you can iteratively look up the dictionary built earlier when you traversed the graph with the breadth-first approach:

# graph.py

from collections import deque

# ...

def retrace(previous, source, destination):

path = deque()

current = destination

while current != source:

path.appendleft(current)

current = previous.get(current)

if current is None:

return None

path.appendleft(source)

return list(path)

Because you start from the destination and work your way back, using the Python deque collection with a fast append operation on the left can be helpful. At each iteration, you add the current node to the path and move to the previous node. You repeat these steps until you reach the source node or there’s no previous node.

When you call the queue-based implementation of the shortest path, you get the same results as with networkx:

>>> from graph import shortest_path

>>> " → ".join(city.name for city in shortest_path(graph, city1, city2))

'Aberdeen → Dundee → Perth'

>>> def by_latitude(city):

... return -city.latitude

...

>>> " → ".join(

... city.name

... for city in shortest_path(graph, city1, city2, by_latitude)

... )

'Aberdeen → Inverness → Perth'

The first path follows the natural order of neighbors from the DOT file, whereas the second one prefers neighbors with a higher latitude, which you specify through a custom sort strategy. To enforce a descending order, you add the minus sign (-) in front of the .latitude attribute.

Note that a path may not exist at all for some nodes. For example, Belfast and Glasgow don’t have a land connection, because they’re located on two separate islands. You need to take a ferry to get from one city to the other. The breadth-first traversal can tell you whether two nodes remain connected or not. Here’s how to implement such a check:

# graph.py

# ...

def connected(graph, source, destination):

return shortest_path(graph, source, destination) is not None

After starting at the source node and traversing the entire subgraph of connected nodes, such as Northern Ireland, the dictionary of previous nodes won’t include your destination node. Therefore, retracing will stop immediately and return None, letting you know there’s no path between source and destination.

You can verify this in an interactive Python interpreter session:

>>> from graph import connected

>>> connected(graph, nodes["belfast"], nodes["glasgow"])

False

>>> connected(graph, nodes["belfast"], nodes["derry"])

True

Awesome! With your custom FIFO queue, you can traverse the graph, find the shortest path between two nodes, and even determine whether they’re connected. By adding a small tweak to your code, you’ll be able to change the traversal from breadth-first to depth-first order, which you’ll do now.

Depth-First Search Using a LIFO Queue

As the name implies, the depth-first traversal follows a path from the source node by plunging into the graph as deeply as possible before backtracking to the last edge crossing and trying another branch. Notice the difference in the traversal order when you modify an earlier example by replacing nx.bfs_tree() with nx.dfs_tree():

>>> import networkx as nx

>>> from graph import City, load_graph

>>> def is_twentieth_century(year):

... return year and 1901 <= year <= 2000

...

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> for node in nx.dfs_tree(graph, nodes["edinburgh"]):

... print("📍", node.name)

... if is_twentieth_century(node.year):

... print("Found:", node.name, node.year)

... break

... else:

... print("Not found")

...

📍 Edinburgh

📍 Dundee

📍 Aberdeen

📍 Inverness

📍 Perth

📍 Stirling

📍 Glasgow

📍 Carlisle

📍 Lancaster

Found: Lancaster 1937

Now, the highlighted neighbors of the source node are no longer explored in sequence. After reaching Dundee, the algorithm continues down the same path instead of visiting the next neighbor of Edinburgh on the first graph layer.

To facilitate backtracking, you can essentially replace the FIFO queue with a LIFO queue in your breadth-first traversal function, and you’ll arrive very close to a depth-first traversal. However, it’ll only behave correctly when traversing tree data structures. There’s a subtle difference in graphs with cycles, which requires an additional change in your code. Otherwise, you’ll implement a stack-based graph traversal, which works quite differently.

Note: In binary tree traversal, the depth-first search algorithm defines a few well-known orderings for the child nodes to visit—for example, pre-order, in-order, and post-order.

In the classic depth-first traversal, in addition to replacing the queue with a stack, you initially won’t mark the source node as visited:

# graph.py

from queues import Queue, Stack

# ...

def depth_first_traverse(graph, source, order_by=None):

stack = Stack(source)

visited = set()

while stack:

if (node := stack.dequeue()) not in visited:

yield node

visited.add(node)

neighbors = list(graph.neighbors(node))

if order_by:

neighbors.sort(key=order_by)

for neighbor in reversed(neighbors):

stack.enqueue(neighbor)

Notice that your visited nodes are initialized to an empty set before you start popping elements from the stack. You also check if the node was already visited much earlier than you would in the breadth-first traversal. When iterating the neighbors, you reverse their order to account for the LIFO queue’s reversal. Finally, you don’t mark the neighbors as visited immediately after pushing them onto the stack.

Because the depth-first traversal relies on the stack data structure, you can take advantage of the built-in call stack to save the current search path for later backtracking and rewrite your function recursively:

# graph.py

# ...

def recursive_depth_first_traverse(graph, source, order_by=None):

visited = set()

def visit(node):

yield node

visited.add(node)

neighbors = list(graph.neighbors(node))

if order_by:

neighbors.sort(key=order_by)

for neighbor in neighbors:

if neighbor not in visited:

yield from visit(neighbor)

return visit(source)

By doing so, you avoid maintaining a stack of your own, as Python pushes each function call on a stack behind the scenes for you. It pops one when the corresponding function returns. You only need to keep track of the visited nodes. Another advantage of the recursive implementation is the fact that you don’t have to reverse the neighbors when iterating over them, and you don’t push already visited neighbors onto the stack.

With the traversal function in place, you can now implement the depth-first search algorithm. Because both breadth-first and depth-first search algorithms look almost identical and only differ in the traversal order, you can refactor your code by delegating the common parts of both algorithms to a template function:

# graph.py

# ...

def breadth_first_search(graph, source, predicate, order_by=None):

return search(breadth_first_traverse, graph, source, predicate, order_by)

# ...

def depth_first_search(graph, source, predicate, order_by=None):

return search(depth_first_traverse, graph, source, predicate, order_by)

def search(traverse, graph, source, predicate, order_by=None):

for node in traverse(graph, source, order_by):

if predicate(node):

return node

Now, your breadth_first_search() and depth_first_search() functions call search() with the corresponding traversal strategy. Go ahead and test them in an interactive Python interpreter session:

>>> from graph import (

... City,

... load_graph,

... depth_first_traverse,

... depth_first_search as dfs,

... )

>>> def is_twentieth_century(city):

... return city.year and 1901 <= city.year <= 2000

...

>>> nodes, graph = load_graph("roadmap.dot", City.from_dict)

>>> city = dfs(graph, nodes["edinburgh"], is_twentieth_century)

>>> city.name

'Lancaster'

>>> for city in depth_first_traverse(graph, nodes["edinburgh"]):

... print(city.name)

...

Edinburgh

Dundee

Aberdeen

Inverness

Perth

Stirling

Glasgow

Carlisle

Lancaster

⋮

Even though the search result happens to be the same as with your breadth-first search algorithm, you can clearly see that the order of traversal is now different and follows a linear path.

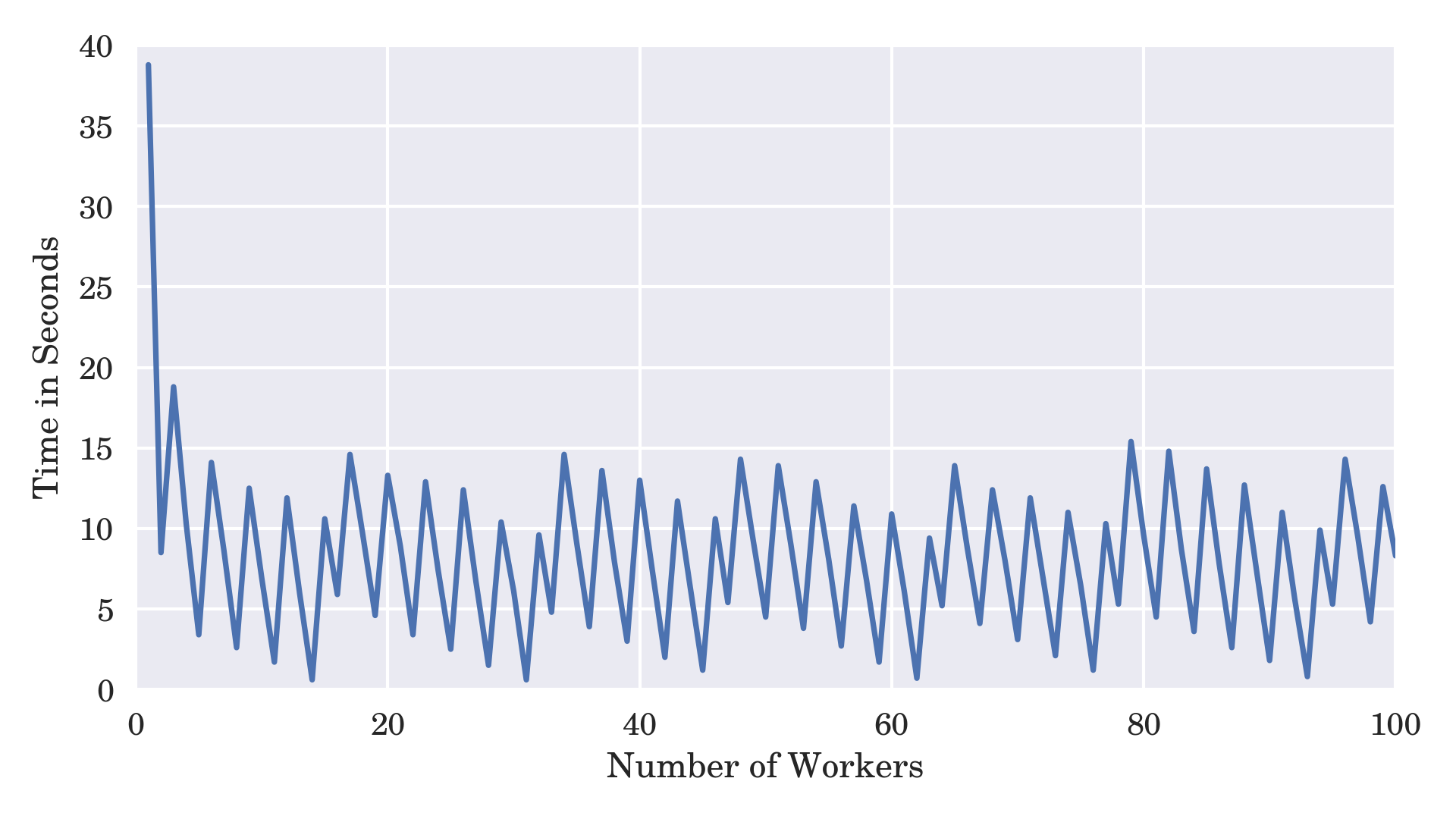

You’ve seen how choosing between a FIFO and a LIFO queue can affect the underlying graph traversal algorithm. So far, you’ve only considered the number of intermediate nodes when looking for the shortest path between two cities. In the next section, you’ll take it one step further by leveraging a priority queue to find the most optimal route, which may sometimes contain more nodes.

Dijkstra’s Algorithm Using a Priority Queue

According to the graph in the sample DOT file, the paths with the fewest nodes between London and Edinburgh have exactly nine stops and a total distance ranging from 451 to 574 miles. There are four such paths:

| 451 miles | 460 miles | 465 miles | 574 miles |

|---|---|---|---|

| City of London | City of London | City of London | City of London |

| Coventry | Peterborough | Peterborough | Bristol |

| Birmingham | Lincoln | Nottingham | Newport |

| Stoke-on-Trent | Sheffield | Sheffield | St Asaph |

| Liverpool | Wakefield | Wakefield | Liverpool |

| Preston | York | York | Preston |

| Lancaster | Durham | Durham | Lancaster |

| Carlisle | Newcastle upon Tyne | Newcastle upon Tyne | Carlisle |

| Edinburgh | Edinburgh | Edinburgh | Edinburgh |

There’s a significant overlap between these paths, as they quickly merge at a few intersections before your destination. To some degree, they also overlap with the only path with the shortest distance between London and Edinburgh, equal to 436 miles, despite having two more stops:

- City of London

- St Albans

- Coventry

- Birmingham

- Stoke-on-Trent

- Manchester

- Salford

- Preston

- Lancaster

- Carlisle

- Edinburgh

Sometimes, it’s worthwhile to take a detour on your route to save time, fuel, or miles, even if it means going through more places along the way.

When you throw edge weights into the mix, then interesting possibilities open up in front of you. For example, you can implement rudimentary artificial intelligence in a video game by assigning negative weights to edges that lead to a virtual enemy and positive weights that point you toward some reward. You may also represent moves in a game like the Rubik’s Cube as a decision tree to find the most optimal solution.

Perhaps the most common use for traversing a weighted graph is when planning a route. A recipe to find the shortest path in a weighted graph, or a multigraph with many parallel connections, is Dijkstra’s algorithm, which builds on top of the breadth-first search algorithm. However, Dijkstra’s algorithm uses a special priority queue instead of the regular FIFO queue.

Explaining Dijkstra’s shortest path algorithm is beyond the scope of this tutorial. However, in a nutshell, you can break it down into the following two steps:

- Build the shortest-path three from a fixed source node to every other node in the graph.

- Trace back the path from the destination to the source node in the same way as you did before with the plain shortest-path algorithm.

The first part is about sweeping the weighted edges of every unvisited node in a greedy manner by checking whether they provide a cheaper connection from the source to one of the current neighbors. The total cost of a path from the source to the neighbor is the sum of the edge’s weight and the cumulative cost from the source to the currently visited node. Sometimes, a path consisting of more nodes will have a smaller total cost.

Here’s a sample result of the first step of Dijkstra’s algorithm for the paths originating in Belfast:

| City | Previous | Total Cost |

|---|---|---|

| Armagh | Lisburn | 41 |

| Belfast | - | 0 |

| Derry | Belfast | 71 |

| Lisburn | Belfast | 9 |

| Newry | Lisburn | 40 |

The first column in the table above indicates a destination city on the shortest path from the source. The second column shows the previous city on the shortest path from the source through which you’ll arrive at your destination. The last column contains information about the total distance to a city from the source.