Have you ever wanted to compose music without expensive gear or a professional studio? Maybe you’ve tried to play a musical instrument before but found the manual dexterity required too daunting or time-consuming. If so, you might be interested in harnessing the power of Python to create a guitar synthesizer. By following a few relatively simple steps, you’ll be able to turn your computer into a virtual guitar that can play any song.

In this tutorial, you’ll:

- Implement the Karplus-Strong plucked string synthesis algorithm

- Mimic different types of string instruments and their tunings

- Combine multiple vibrating strings into polyphonic chords

- Simulate realistic guitar picking and strumming finger techniques

- Use impulse responses of real instruments to replicate their unique timbre

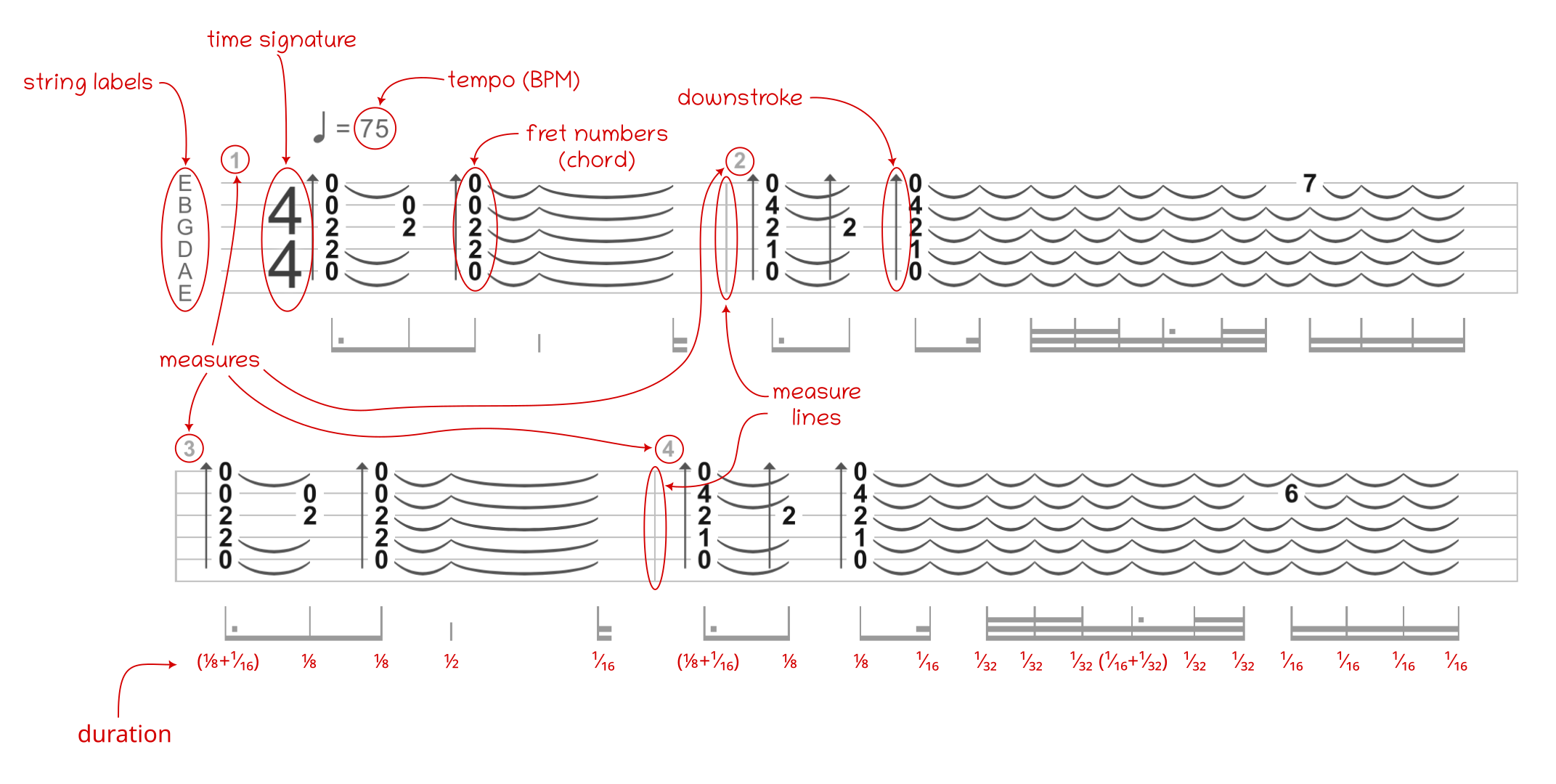

- Read musical notes from scientific pitch notation and guitar tablature

At any point, you’re welcome to download the complete source code of the guitar synthesizer, as well as the sample tablature and other resources that you’ll use throughout this tutorial. They might prove useful in case you want to explore the code in more detail or get a head start. To download the bonus materials now, visit the following link:

Get Your Code: Click here to download the free sample code that you’ll use to build a guitar synthesizer in Python.

Take the Quiz: Test your knowledge with our interactive “Build a Guitar Synthesizer” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Build a Guitar SynthesizerIn this quiz, you'll test your understanding of what it takes to build a guitar synthesizer in Python. By working through this quiz, you'll revisit a few key concepts from music theory and sound synthesis.

Demo: Guitar Synthesizer in Python

In this step-by-step guide, you’ll build a plucked string instrument synthesizer based on the Karplus-Strong algorithm in Python. Along the way, you’ll create an ensemble of virtual instruments, including an acoustic, bass, and electric guitar, as well as a banjo and ukulele. Then, you’ll implement a custom guitar tab reader so that you can play your favorite songs.

By the end of this tutorial, you’ll be able to synthesize music from guitar tablature, or guitar tabs for short, which is a simplified form of musical notation that allows you to play music without having to learn how to read standard sheet music. Finally, you’ll store the result in an MP3 file for playback.

Below is a short demonstration of the synthesizer, re-creating the iconic soundtracks of classic video games like Doom and Diablo. Click the play button to listen to the sample output:

Once you find a guitar tab that you like, you can plug it into your Python guitar synthesizer and bring the music to life. For example, the Songsterr website is a fantastic resource with a wide range of songs you can choose from.

Project Overview

For your convenience, the project that you’re about to build, along with its third-party dependencies, will be managed by Poetry. The project will contain two Python packages with distinctly different areas of responsibility:

digitar: For the synthesis of the digital guitar soundtablature: For reading and interpreting guitar tablature from a file

You’ll also design and implement a custom data format to store guitar tabs on disk or in memory. This will allow you to play music based on a fairly standard tablature notation, which you’ll find in various places on the Internet. Your project will also provide a Python script to tie everything together, which will let you interpret the tabs with a single command right from your terminal.

Now, you can dive into the details of what you’ll need to set up your development environment and start coding.

Prerequisites

Although you don’t need to be a musician to follow along with this tutorial, a basic understanding of musical concepts such as notes, semitones, octaves, and chords will help you grasp the information more quickly. It’d also be nice if you had a rough idea of how computers represent and process digital audio in terms of sampling rate, bit depth, and file formats like WAV.

But don’t worry if you’re new to these ideas! You’ll be guided through each step in small increments with clear explanations and examples. So, even if you’ve never done any music synthesis before, you’ll have a working digital guitar or digitar by the end of this tutorial.

Note: You can learn music theory in half an hour by watching an excellent and free video by Andrew Huang.

The project that you’ll build was tested against Python 3.12 but should work fine in earlier Python versions, too, down to Python 3.10. In case you need a quick refresher, here’s a list of helpful resources covering the most important language features that you’ll take advantage of in your digital guitar journey:

- Assignment expressions

- Data classes

- Enumerations

- Protocols (static duck typing)

- Structural pattern matching

- Type hints

Other than that, you’ll use the following third-party Python packages in your project:

- NumPy to simplify and speed up the underlying sound synthesis

- Pedalboard to apply special effects akin to electric guitar amplifiers

- Pydantic and PyYAML to parse musical tablature representing finger movements on a guitar neck

Familiarity with these will definitely help, but you can also learn as you go and treat this project as an opportunity to practice and improve your Python skills.

Step 1: Set Up the Digital Guitar Project

The first step is to prepare your development environment. To start, you’ll create a new Python project and install the required third-party libraries. Then, you’ll load it into an editor, where you’ll continue to write the necessary code for your guitar synthesizer.

Create a New Project and Install Dependencies

There are many ways to create and manage Python projects. In this tutorial, you’ll use Poetry as a convenient tool for dependency management. If you haven’t already, then install Poetry—for example, with pipx—and start a new project using the src/ folder layout to keep your code organized:

$ poetry new --src --name digitar digital-guitar/

Created package digitar in digital-guitar

This will result in the folder structure below, which includes placeholder files with your project’s metadata and source code that you’ll fill out later:

digital-guitar/

│

├── src/

│ └── digitar/

│ └── __init__.py

│

├── tests/

│ └── __init__.py

│

├── pyproject.toml

└── README.md

Then, change the directory to your new project and add a few dependencies that you’ll rely on later:

$ cd digital-guitar/

$ poetry add numpy pedalboard pydantic pyyaml

After you run this command, Poetry will create an isolated virtual environment in a designated location for your project and install the listed third-party Python packages into it. You should also see a new poetry.lock file in your project’s root folder.

You can now open the digital-guitar/ folder in the Python IDE or code editor of your choice. If you use Visual Studio Code or PyCharm, then both programs will discover the virtual environment created by Poetry. The latter will also associate it with the project, letting you access the installed packages right away.

In VS Code, you may need to manually select the Poetry-managed virtual environment. To do this, bring up the Command Palette, type Python: Select Interpreter, and choose the desired interpreter. Conversely, after you open the folder in PyCharm, confirm the prompt asking you to set up a Poetry environment. The corresponding Python interpreter will appear in the bottom-right corner of the window.

Alternatively, if you’re a die-hard Vim or Sublime Text user, then you can continue to use Poetry in the command line:

$ poetry install

$ poetry run play-tab demo/tabs/doom.yaml

Saved file /home/user/digital-guitar/doom.mp3

The first command will install your project, along with its dependencies, defined in the pyproject.toml file. The second command, which you’ll implement later, will execute a script from within the associated virtual environment managed by Poetry. Note that you’ll use these commands anyway, regardless of which code editor you choose.

Embrace Immutable Data Types in Your Project

With only a few exceptions, you’ll define immutable data types almost exclusively in this project. Immutable objects are those that you can’t alter once you create them. While that may sound limiting at first, it actually brings a host of advantages. Therefore, it’s a good idea to familiarize yourself with the concept of immutability and its impact on your program’s behavior before you get started.

First of all, most immutable objects in Python are hashable, making them valid dictionary keys. Later, this will become essential for caching argument values to avoid repetitive computation. In the long run, it’ll help you reduce the overall time needed for sound synthesis.

Other than that, you can safely use immutable objects as default argument values without worrying about unintended side effects. In contrast, mutable default arguments are one of the most common pitfalls in Python, which can lead to surprising and difficult-to-track bugs. By sticking to immutable types where possible, you’ll save yourself a lot of headaches.

Also, you can think of immutable objects as simple values like integers or strings. When you assign an immutable variable to another variable, the assignment binds both references to the same object in memory. But, as soon as you attempt to modify the state of your immutable object through one of these variables, you’ll create a copy of that object, leaving the original one intact. Thus, your code becomes more predictable and resilient.

Immutable objects are also thread-safe and make it easier to reason about your code. These traits make them especially suitable for the functional programming paradigm, but you’ll enjoy their benefits in the object-oriented realm, too.

Now, it’s time to put this theory into practice by implementing your first immutable data type for this guitar synthesizer project.

Represent Time Instants, Durations, and Intervals

Music is an ephemeral form of art that you can only appreciate for a short period of time when it’s being played or performed. Because music inherently exists in time, it’s crucial that you’re able to properly represent time instants, durations, and intervals if you want to build a robust synthesizer.

Python’s float data type isn’t precise enough for musical timing due to the representation and rounding errors ingrained in the IEEE 754 standard. When you need greater precision, the recommended practice in Python is to replace floating-point numbers with either a Decimal or Fraction data type. However, using these types directly can be cumbersome, and they don’t carry the necessary information about the time units involved.

To alleviate these nuisances, you’ll implement a few custom classes, starting with the versatile Time data type. Go ahead and create a new Python module named temporal inside your digitar package, and define the following data class in it:

src/digitar/temporal.py

from dataclasses import dataclass

from decimal import Decimal

from fractions import Fraction

from typing import Self

type Numeric = int | float | Decimal | Fraction

@dataclass(frozen=True)

class Time:

seconds: Decimal

@classmethod

def from_milliseconds(cls, milliseconds: Numeric) -> Self:

return cls(Decimal(str(float(milliseconds))) / 1000)

This class has only one attribute, representing the number of seconds as a Decimal object for improved accuracy. You can create instances of your new class by providing the seconds through its constructor or by calling a class method that expects milliseconds and converts them to seconds wrapped in an appropriate data type.

Note: Since Python 3.12, you should use the type statement to create an explicit type alias. In previous Python releases, you can fall back to the deprecated TypeAlias annotation:

from typing import TypeAlias

Numeric: TypeAlias = int | float | Decimal | Fraction

Alternatively, if you’re running an even earlier version of Python, then just use a plain assignment statement without any keywords or annotations.

Due to Python’s dynamic nature, the default constructor generated by the interpreter for your data class won’t enforce type hints that you annotated your attributes with. In other words, the interpreter won’t verify whether the supplied values are of the expected types. So, in this case, if you pass an integer or a floating-point number instead of a Decimal object, then you’ll inadvertently create an instance with an incorrect attribute type.

Fortunately, you can prevent this issue by implementing your own initializer method in the class that will replace the one that Python generates by default:

src/digitar/temporal.py

from dataclasses import dataclass

from decimal import Decimal

from fractions import Fraction

from typing import Self

type Numeric = int | float | Decimal | Fraction

@dataclass(frozen=True)

class Time:

seconds: Decimal

@classmethod

def from_milliseconds(cls, milliseconds: Numeric) -> Self:

return cls(Decimal(str(float(milliseconds))) / 1000)

def __init__(self, seconds: Numeric) -> None:

match seconds:

case int() | float():

object.__setattr__(self, "seconds", Decimal(str(seconds)))

case Decimal():

object.__setattr__(self, "seconds", seconds)

case Fraction():

object.__setattr__(

self, "seconds", Decimal(str(float(seconds)))

)

case _:

raise TypeError(

f"unsupported type '{type(seconds).__name__}'"

)

You use structural pattern matching to detect the type of argument passed to your method at runtime and branch off accordingly. Then, you ensure that the instance attribute, .seconds, is always set to a Decimal object, regardless of the input type. If you pass a Decimal instance to your constructor, then there’s nothing more to do. Otherwise, you use the appropriate conversion or raise an exception to signal the misuse of the constructor.

Because you defined a frozen data class, which makes its instances immutable, you can’t set the attribute value directly or call the built-in setattr() function on an existing object. That would violate the immutability contract. If you ever need to forcefully change the state of a frozen data class instance, then you can resort to a hack by explicitly calling object.__setattr__(), as in the code snippet above.

You might recall that data classes support a special method for precisely this kind of customization. However, the advantage of overwriting the default initializer method instead of implementing .__post_init__() is that you take complete control of the object creation process. As a result, an object can either exist and be in a valid state or not exist at all.

Note: When creating an invalid object is essentially impossible, you can say that your code conforms to the fail-fast philosophy.

Finally, you can implement a convenience method that you’ll use later for translating a duration in seconds into the corresponding number of audio samples:

src/digitar/temporal.py

from dataclasses import dataclass

from decimal import Decimal

from fractions import Fraction

from typing import Self

type Numeric = int | float | Decimal | Fraction

type Hertz = int | float

@dataclass(frozen=True)

class Time:

# ...

def get_num_samples(self, sampling_rate: Hertz) -> int:

return round(self.seconds * round(sampling_rate))

This method takes a sampling rate in hertz (Hz) as an argument, which represents the number of samples per second. Multiplying the duration in seconds by the sampling rate in hertz yields the required number of samples, which you can round to return an integer.

Here’s a short Python REPL session demonstrating how you can take advantage of your new data class:

>>> from digitar.temporal import Time

>>> Time(seconds=0.15)

Time(seconds=Decimal('0.15'))

>>> Time.from_milliseconds(2)

Time(seconds=Decimal('0.002'))

>>> _.get_num_samples(sampling_rate=44100)

88

The underscore (_) in the REPL is an implicit variable that holds the value of the last evaluated expression. In this case, it refers to your Time instance representing two milliseconds.

With the Time class in place, you’re ready to move on to the next step. You’ll dip your toes into the physics of a vibrating string and see how it produces a sound.

Step 2: Model the Acoustic Wave of a Vibrating String

At the end of the day, every sound that you hear is a local disturbance of air pressure made by a vibrating object. Whether it’s your vocal cords, a guitar string, or a loudspeaker, these vibrations push and pull on the air molecules around them. That movement then travels through the air as an acoustic wave until it reaches your eardrum, which vibrates in response.

In this step, you’ll take a closer look at the Karplus-Strong synthesis algorithm, which models the vibration of a plucked string. Then, you’ll implement it in Python using NumPy and produce your first synthetic sound resembling that of a plucked string.

Get to Know the Karplus-Strong Algorithm

The Karplus-Strong algorithm is surprisingly straightforward, given the complex sounds it can produce. In a nutshell, it starts by filling a very short buffer with a burst of random noise or another signal that has rich energy or many frequency components. That noise corresponds to the excitation of an actual string, which initially vibrates in several incoherent patterns of motion.

These seemingly random vibrations gradually become more and more sinusoidal, with a clear sine-like period and frequency that you perceive as a distinctive pitch. While the amplitudes of all vibrations weaken over time due to energy dissipation caused by internal friction and energy transfer, a certain fundamental frequency remains stronger than most of the overtones and harmonics that fade away more quickly.

Note: The string’s physical properties, including its length and mass, favor certain modes of vibration over others. That’s what inherently determines the particular frequency that persists and resonates most prominently.

In a guitar, you can shorten a string by pressing it against one of the frets on the guitar neck to produce a higher-pitched sound. Conversely, some strings are thicker and heavier than others, vibrating slower and resulting in lower-frequency sounds.

Additionally, the guitar’s bridge and nut are the anchor points that reflect the energy traveling back and forth along the string. These reflections interfere with waves that travel in opposite directions, contributing to the formation of standing waves, which are the basis of the musical notes that you hear.

Check out a slow-motion recording of a plucked string to see this phenomenon in action.

The Karplus-Strong algorithm applies a low-pass filter to the signal to simulate the decay of higher frequencies at a faster pace than the fundamental frequency. It does so by calculating a moving average of two consecutive amplitude levels in the buffer, effectively acting as a bare-bones convolution filter. It removes the short-term fluctuations while leaving the longer-term trend.

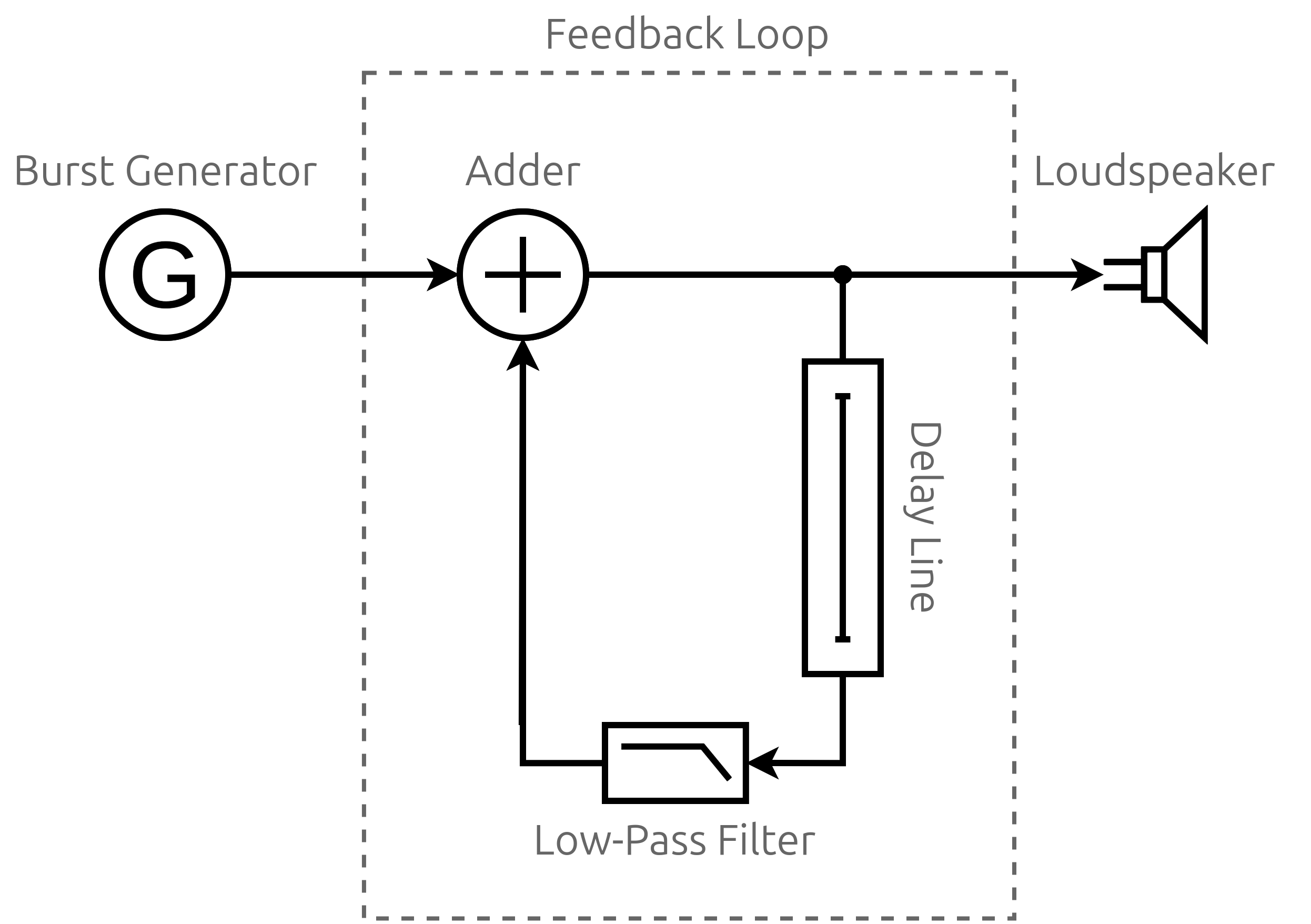

Additionally, the algorithm feeds the averaged values back into the buffer to reinforce and continue the vibration, albeit with gradual energy loss. Take a look at the diagram below to have a better picture of how this positive feedback loop works:

The generator on the left serves as the input to the algorithm, providing the initial burst of noise. It’ll typically be white noise with a uniform probability distribution so that, on average, no particular frequency is emphasized over another. The analogy is similar to white light, which contains all frequencies of the visible spectrum at roughly equal intensities.

The generator shuts down after filling a circular buffer, also known as the delay line, which delays the signal by a certain amount of time before feeding it back to the loop. The phase-shifted signal from the past is then mixed with the current signal. Think of it as the wave’s reflection propagating along the string in the opposite direction.

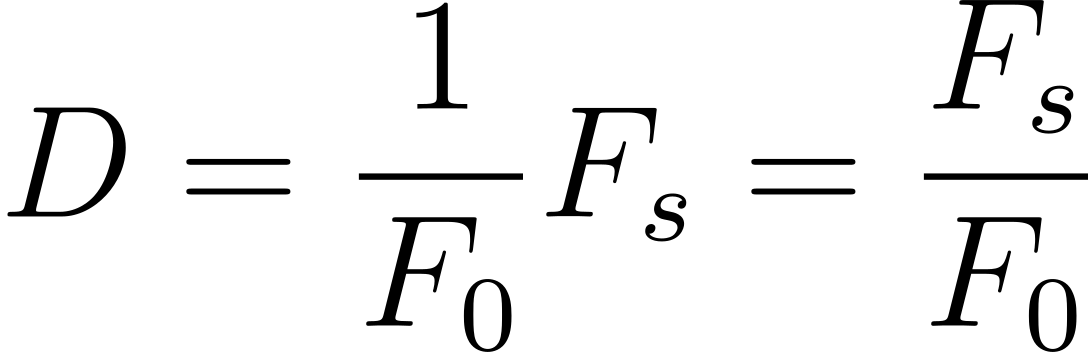

The amount of delay determines the frequency of the virtual string’s vibration. Just like with the guitar’s string length, a shorter delay results in a higher pitch, while a longer delay produces a lower pitch. You can calculate the required size of the buffer—in terms of the number of audio samples—using the following formula:

To get the number of samples, D, multiply the vibration’s period or the reciprocal of the desired fundamental frequency, F0, by your signal’s sampling frequency, Fs. Simply put, divide the sampling frequency by the fundamental frequency.

Then, the delayed signal goes through a low-pass filter before being added to the next sample from the buffer. You can implement both the filter and the adder by applying a weighted average to both samples as long as their weights sum to one or less. Otherwise, you’d be boosting the signal instead of attenuating it. By adjusting the weights, you can control the decay or damping of your virtual string’s vibration.

As the processed signal cycles through the buffer, it loses more high-frequency content and settles into a pattern that closely resembles the sound of a plucked string. Thanks to the feedback loop, you get the illusion of a vibrating string that gradually fades out.

Finally, on the far right of the diagram, you can see the output, which could be a loudspeaker or an audio file that you write the resulting audio samples to.

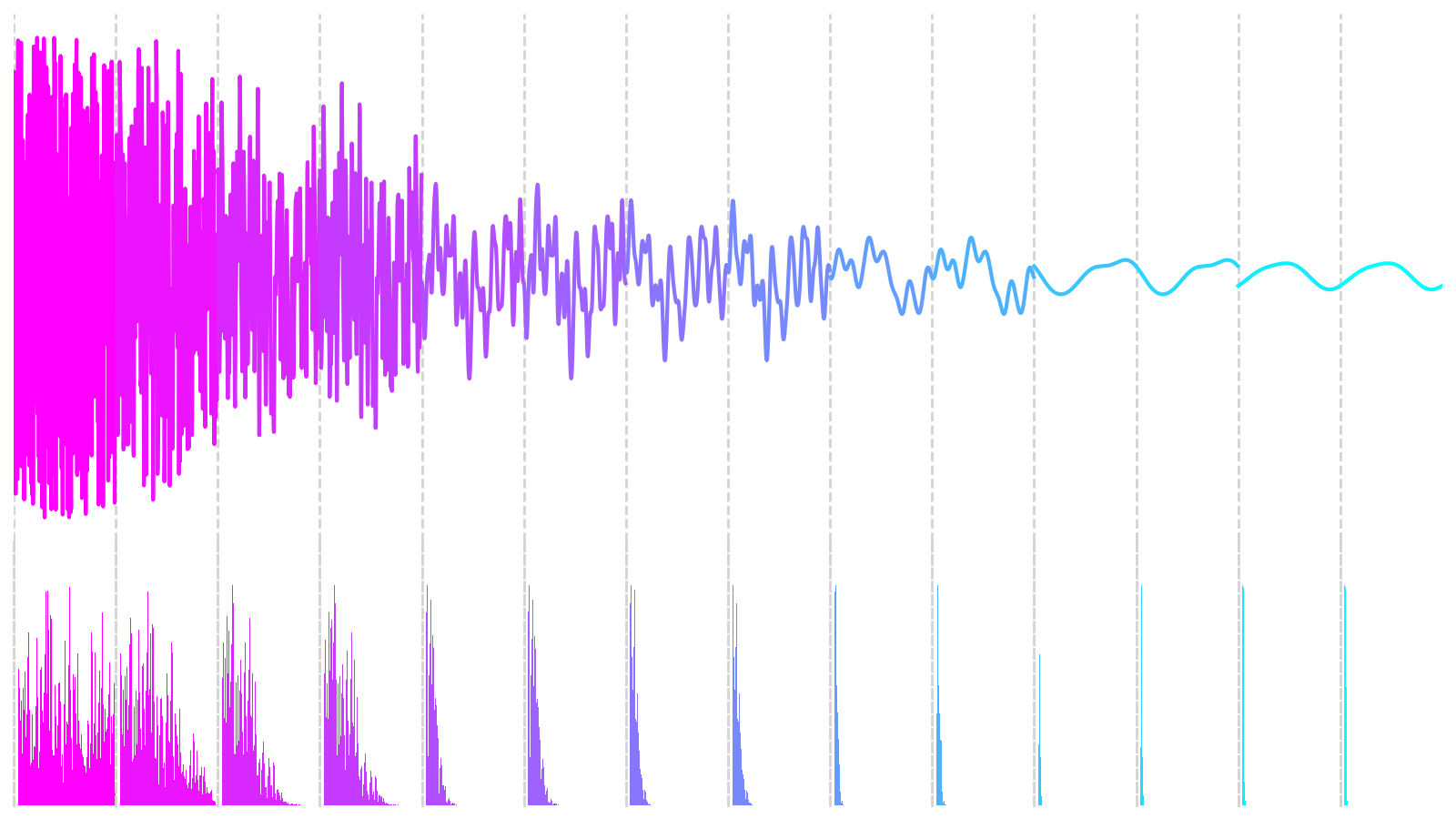

When you plot the waveforms and their corresponding frequency spectra from successive cycles of the feedback loop, you’ll observe the following pattern emerge:

The top graph shows amplitude oscillations over time. The graph just below it depicts the signal’s frequency content at specific moments. Initially, the buffer is filled with random samples, whose frequency distribution is roughly equal across the spectrum. As time goes by, the signal’s amplitude decreases, and the frequency of oscillations starts to concentrate at a particular spectral band. The shape of the waveform resembles a sine wave now.

Since you now understand the principles of the Karplus-Strong algorithm, you can implement the first element of the diagram shown earlier.

Use Random Values as the Initial Noise Burst

There are many kinds of signal generators that you can choose from in sound synthesis. Some of the most popular ones include periodic functions like the square wave, triangle wave, and sawtooth wave. However, in the Karplus-Strong synthesis algorithm, you’ll get the best results with an aperiodic function, such as random noise, due to its rich harmonic content that you can filter over time.

Noise comes in different colors, like pink or white. The difference lies in their spectral power density across frequencies. In white noise, for example, each frequency band has approximately the same power. So, it’s perfect for an initial noise burst because it contains a wide range of harmonics that you can shape through a filter.

To allow for experimenting with the different kinds of signal generators, you’ll define a custom protocol class in a new Python module named burst:

src/digitar/burst.py

from typing import Protocol

import numpy as np

from digitar.temporal import Hertz

class BurstGenerator(Protocol):

def __call__(self, num_samples: int, sampling_rate: Hertz) -> np.ndarray:

...

The point of a protocol class is to specify the desired behavior through method signatures without implementing those methods. In Python, you typically use an ellipsis (...) to indicate that you’ve intentionally left the method body undefined. Therefore, a protocol class acts like an interface in Java, where concrete classes implementing that particular interface provide the underlying logic.

Note: In Python, you’ll need a static type checker like mypy to enforce the protocol adherence by concrete classes.

In this case, you declared the special method .__call__()to make instances of classes that adhere to the protocol callable. Your method expects two arguments:

- The number of audio samples to produce

- The number of samples per second

Additionally, burst generators are meant to return a NumPy array of amplitude levels, which should be floating-point numbers normalized to an interval between minus one and plus one. Such normalization will make the subsequent audio processing more convenient.

Your first concrete generator class will produce white noise, as you’ve already established that it’s most appropriate in this context:

src/digitar/burst.py

# ...

class WhiteNoise:

def __call__(self, num_samples: int, sampling_rate: Hertz) -> np.ndarray:

return np.random.uniform(-1.0, 1.0, num_samples)

Even though your new class doesn’t inherit from BurstGenerator, it still conforms to the protocol you defined earlier by providing a .__call__() method with the correct signature. Notice that the method takes the sampling rate as the second argument despite not referencing it anywhere in the body. That’s required to satisfy the protocol.

Note: You’ll need to know the sampling rate for other types of burst generators, such as the periodic functions mentioned at the beginning of this section, which you might want to implement in the future.

Instances of your WhiteNoise generator class are callable now:

>>> from digitar.burst import WhiteNoise

>>> burst_generator = WhiteNoise()

>>> samples = burst_generator(num_samples=1_000_000, sampling_rate=44100)

>>> samples.min()

-0.9999988055552775

>>> samples.max()

0.999999948864092

>>> samples.mean()

-0.0001278112173601203

The resulting samples are constrained to the range between -1 and 1, as the minimum and maximum values are very close to these bounds. Also, the mean value is near zero because, over a large number of samples, the positive and negative amplitudes balance each other out, confirming a uniform value distribution.

Okay. The next big component in the diagram of the Karplus-Strong algorithm is the feedback loop itself. You’re going to break it down into smaller pieces now.

Filter Higher Frequencies With a Feedback Loop

An elegant way to simulate a feedback loop in Python entails wiring generator functions together and sending values to them. You can also define asynchronous functions and hook them up as cooperative coroutines to achieve a similar effect. However, in this tutorial, you’ll use a much more straightforward and slightly more efficient implementation based on iteration.

Create another module named synthesis in your Python package and define the following class placeholder:

src/digitar/synthesis.py

from dataclasses import dataclass

from digitar.burst import BurstGenerator, WhiteNoise

AUDIO_CD_SAMPLING_RATE = 44100

@dataclass(frozen=True)

class Synthesizer:

burst_generator: BurstGenerator = WhiteNoise()

sampling_rate: int = AUDIO_CD_SAMPLING_RATE

This frozen data class consists of two optional attributes, which let you specify the expected burst generator implementation and the sampling rate. If you skip those parameters when creating a new instance of the class, then you’ll rely on the defaults, which use the white noise generator at a 44.1 kHz sampling rate defined as a Python constant.

Note: The 44.1 kHz sampling rate is a common choice for audio applications like audio CDs. It means there are 44,100 samples of audio data recorded every second. That corresponds to a Nyquist frequency just above frequencies audible to the human ear, ensuring the accurate reproduction without aliasing artifacts.

Using the standard-library itertools package, you can now implement an infinite iterator that will cycle() through the buffer of audio samples. The following code snippet mirrors the Karplus-Strong diagram that you saw in an earlier section:

src/digitar/synthesis.py

from dataclasses import dataclass

from itertools import cycle

from typing import Iterator

import numpy as np

from digitar.burst import BurstGenerator, WhiteNoise

from digitar.temporal import Hertz, Time

AUDIO_CD_SAMPLING_RATE = 44100

@dataclass(frozen=True)

class Synthesizer:

burst_generator: BurstGenerator = WhiteNoise()

sampling_rate: int = AUDIO_CD_SAMPLING_RATE

def vibrate(

self, frequency: Hertz, duration: Time, damping: float = 0.5

) -> np.ndarray:

assert 0 < damping <= 0.5

def feedback_loop() -> Iterator[float]:

buffer = self.burst_generator(

num_samples=round(self.sampling_rate / frequency),

sampling_rate=self.sampling_rate

)

for i in cycle(range(buffer.size)):

yield (current_sample := buffer[i])

next_sample = buffer[(i + 1) % buffer.size]

buffer[i] = (current_sample + next_sample) * damping

You define the .vibrate() method that takes the vibration’s fundamental frequency, duration, and an optional damping coefficient as arguments. When left unspecified, the coefficient’s default value halves the sum of two adjacent samples with each cycle, which is analogous to computing a moving average. It simulates energy loss as the vibration fades out.

Note: To prevent passing in an incorrect damping coefficient, which would cause the algorithm to amplify the samples instead of damping them, you placed an assert statement with an appropriate condition at the beginning of your method. It’ll reject values outside the expected range, although you should only rely on such assertions during development. There are better ways to enforce invariants in your code that you’ll use later.

So far, your method defines an inner function that returns a generator iterator when called. The resulting generator object allocates and fills a buffer using the provided burst generator. The function then enters an infinite for loop that keeps yielding values from the buffer indefinitely in a round-robin fashion because it has no stopping condition.

You use the Walrus operator (:=) to simultaneously yield and intercept the current amplitude value in each cycle. On the next iteration, you calculate the average of the two adjacent values to simulate the damping effect. The modulo operator (%) ensures that the index wraps around to the beginning of the buffer once it reaches the end, creating a circular buffer effect.

To consume a finite number of samples determined by the duration parameter, you can wrap your feedback_loop() with a call to NumPy’s fromiter() function:

src/digitar/synthesis.py

# ...

@dataclass(frozen=True)

class Synthesizer:

# ...

def vibrate(

self, frequency: Hertz, duration: Time, damping: float = 0.5

) -> np.ndarray:

assert 0 < damping <= 0.5

def feedback_loop() -> Iterator[float]:

buffer = self.burst_generator(

num_samples=round(self.sampling_rate / frequency),

sampling_rate=self.sampling_rate

)

for i in cycle(range(buffer.size)):

yield (current_sample := buffer[i])

next_sample = buffer[(i + 1) % buffer.size]

buffer[i] = (current_sample + next_sample) * damping

return np.fromiter(

feedback_loop(),

np.float64,

duration.get_num_samples(self.sampling_rate),

)

As long as the duration parameter is an instance of the Time data class that you defined earlier, you can convert the number of seconds into the corresponding number of audio samples by calling .get_num_samples(). Just remember to pass the correct sampling rate. You should also specify float64 as the data type for the elements of your NumPy array to ensure high precision and avoid unnecessary type conversions.

Note: You want to take advantage of a high-precision numeric type during audio processing. However, you’ll usually encode the resulting audio samples with a more memory-efficient type, such as a 16-bit signed integer.

You’re almost done implementing the Karplus-Strong synthesis algorithm, but your code has two minor issues that you need to address first.

Remove the DC Bias and Normalize Audio Samples

Depending on the initial burst and the damping coefficient, you may end up with values outside the expected amplitude range, or the values may drift away from zero, introducing a DC bias. That could result in audible clicks or other unpleasant artifacts. To fix these potential problems, you’ll remove the bias by subtracting the signal’s mean value, and you’ll normalize the resulting samples afterward.

NumPy doesn’t provide built-in functions for these tasks, but making your own isn’t too complicated. Start by creating a new module named processing in your package with these two functions:

src/digitar/processing.py

import numpy as np

def remove_dc(samples: np.ndarray) -> np.ndarray:

return samples - samples.mean()

def normalize(samples: np.ndarray) -> np.ndarray:

return samples / np.abs(samples).max()

Both functions take advantage of NumPy’s vectorization capabilities. The first one subtracts the mean value from each element, and the second divides all samples by the maximum absolute value in the input array.

Now, you can import and call your helper functions in the synthesizer before returning the array of computed audio samples:

src/digitar/synthesis.py

from dataclasses import dataclass

from itertools import cycle

from typing import Iterator

import numpy as np

from digitar.burst import BurstGenerator, WhiteNoise

from digitar.processing import normalize, remove_dc

from digitar.temporal import Hertz, Time

AUDIO_CD_SAMPLING_RATE = 44100

@dataclass(frozen=True)

class Synthesizer:

# ...

def vibrate(

self, frequency: Hertz, duration: Time, damping: float = 0.5

) -> np.ndarray:

# ...

return normalize(

remove_dc(

np.fromiter(

feedback_loop(),

np.float64,

duration.get_num_samples(self.sampling_rate),

)

)

)

Although the order may not make a significant difference, it’s customary to remove the DC bias before performing the normalization. Removing the DC component ensures that your signal is centered around zero. Otherwise, it might still have a DC component, which could affect the overall scale of the normalization.

Great! You’ve just implemented the Karplus-Strong synthesis algorithm in Python. Why not put it to the test to hear the results?

Pluck the String to Produce Monophonic Sounds

Strictly speaking, your synthesizer returns a NumPy array of normalized amplitude levels instead of audio samples directly corresponding to digital sound. At the same time, you can choose from several data formats, compression schemes, and encodings to determine how to store and transmit your audio data.

For example, Linear Pulse-Code Modulation (LPCM) is a standard encoding in uncompressed WAV files, which typically use 16-bit signed integers to represent audio samples. Other formats like MP3 employ lossy compression algorithms that reduce file size by removing information that’s less perceivable by the human ear. These formats can offer constant or variable bitrates depending on the desired quality and file size.

To avoid getting bogged down by the technicalities, you’ll use Spotify’s Pedalboard library, which can handle these low-level details for you. You’ll supply the normalized amplitude levels from your synthesizer, and Pedalboard will encode them accordingly depending on your preferred data format:

>>> from pedalboard.io import AudioFile

>>> from digitar.synthesis import Synthesizer

>>> from digitar.temporal import Time

>>> frequencies = [261.63, 293.66, 329.63, 349.23, 392, 440, 493.88, 523.25]

>>> duration = Time(seconds=0.5)

>>> damping = 0.495

>>> synthesizer = Synthesizer()

>>> with AudioFile("monophonic.mp3", "w", synthesizer.sampling_rate) as file:

... for frequency in frequencies:

... file.write(synthesizer.vibrate(frequency, duration, damping))

In this case, you save the synthesized sounds as an MP3 file using the library’s default parameters. The code snippet above produces an MP3 file with a mono channel sampled at 44.1 kHz and a constant bitrate of 320 kilobits per second, which is the highest quality supported by this format. Remember to run the code from within your project’s virtual environment to access the required modules.

To confirm some of these audio properties, you can open the file for reading and check a few of its attributes:

>>> with AudioFile("monophonic.mp3") as file:

... print(f"{file.num_channels = }")

... print(f"{file.samplerate = }")

... print(f"{file.file_dtype = }")

...

file.num_channels = 1

file.samplerate = 44100

file.file_dtype = 'float32'

Because MP3 files are compressed, you can’t calculate their bitrate from these parameters. The actual bitrate is stored in the file’s header along with other metadata, which you can verify using an external program like MediaInfo:

$ mediainfo monophonic.mp3

General

Complete name : monophonic.mp3

Format : MPEG Audio

File size : 159 KiB

Duration : 4 s 48 ms

Overall bit rate mode : Constant

Overall bit rate : 320 kb/s

Writing library : LAME3.100

(...)

The generated file contains a series of musical tones based on the frequencies that you supplied. Each tone is sustained for half a second, resulting in a melody that progresses through the notes do-re-mi-fa-sol-la-ti-do. These tones are the solfeggio notes, often used to teach the musical scale. Below is what they look like when plotted as a waveform. You can click the play button to take a listen:

Notice that each tone stops abruptly before getting a chance to fade out completely. You can experiment with a longer or shorter duration and adjust the the damping parameter. But, no matter how hard you try, you can only produce monophonic sounds without the possibility of overlaying multiple notes.

In the next section, you’ll learn how to synthesize more complex sounds, getting one step closer to simulating a full-fledged guitar.

Step 3: Simulate Strumming Multiple Guitar Strings

At this point, you can generate audio files consisting of monophonic sounds. This means that as soon as the next sound starts playing, the previous one stops, resulting in a series of discrete tones. That’s fine for old-school cellphone ringtones or retro video game soundtracks. However, when a guitarist strums several strings at once, they produce a chord with notes that resonate together.

In this section, you’ll tweak your synthesizer class to produce polyphonic sounds by allowing the individual notes to overlap and interfere with each other.

Blend Multiple Notes Into a Polyphonic Sound

To play multiple notes simultaneously, you can mix the corresponding acoustic waves. Go ahead and define another method in your synthesizer class, which will be responsible for overlaying samples from multiple sounds on top of each other:

src/digitar/synthesis.py

from dataclasses import dataclass

from itertools import cycle

from typing import Iterator, Sequence

# ...

@dataclass(frozen=True)

class Synthesizer:

# ...

def overlay(self, sounds: Sequence[np.ndarray]) -> np.ndarray:

return np.sum(sounds, axis=0)

This method takes a sequence of equal-sized NumPy arrays comprising the amplitudes of several sounds to mix. The method then returns the element-wise arithmetic sum of the input sound waves.

Note: While you should generally remain flexible by allowing your functions and methods to take any iterable as their input arguments, in this case, NumPy expects the iterable to have a fixed size.

Assuming you’ve already removed the DC bias from the individual sounds you want to mix, you no longer need to worry about it. Additionally, you don’t want to normalize the overlaid sounds at this stage because their number may vary greatly within a single song. Doing so now could lead to inconsistent volume levels, making certain musical chords barely audible. Instead, you must apply normalization before writing the entire song into the file.

Suppose you wanted to simulate a performer plucking all the strings of a guitar at the same time. Here’s how you could do that using your new method:

>>> from pedalboard.io import AudioFile

>>> from digitar.processing import normalize

>>> from digitar.synthesis import Synthesizer

>>> from digitar.temporal import Time

>>> frequencies = [329.63, 246.94, 196.00, 146.83, 110.00, 82.41]

>>> duration = Time(seconds=3.5)

>>> damping = 0.499

>>> synthesizer = Synthesizer()

>>> sounds = [

... synthesizer.vibrate(frequency, duration, damping)

... for frequency in frequencies

... ]

>>> with AudioFile("polyphonic.mp3", "w", synthesizer.sampling_rate) as file:

... file.write(normalize(synthesizer.overlay(sounds)))

You define the frequencies corresponding to the standard tuning of a six-string guitar and set the duration of an individual note to three and a half seconds. Additionally, you adjust the damping coefficient to a slightly greater value than before to make it vibrate longer. Then, you synthesize each string’s sound in a list comprehension and combine them using your .overlay() method.

This will be the resulting waveform of the audio file that you’ll create after you run the code listed above:

It’s unquestionably an improvement over the monophonic version. However, the synthesized file still sounds a bit artificial when you play it. That’s because, with a real guitar, the strings are never plucked at precisely the same moment. There’s always a slight delay between each string being plucked. The resulting wave interactions create complex resonances, adding to the richness and authenticity of the sound.

Next, you’ll introduce an adjustable delay between the subsequent strokes to give your polyphonic sound a more realistic feel. You’ll be able to discern the striking direction as a result of that!

Adjust the Stroke Speed to Control the Rhythm

When you stroke the strings of a guitar quickly, the delay between successive plucks is relatively short, making the overall sound loud and sharp. Conversely, the delay increases as you pluck the strings more slowly and gently. You can take this technique to the extreme by playing an arpeggio or a broken chord where you play the notes one after the other rather than simultaneously.

Now, modify your .overlay() method so that it accepts an additional delay parameter representing the time interval between each stroke:

src/digitar/synthesis.py

# ...

@dataclass(frozen=True)

class Synthesizer:

# ...

def overlay(

self, sounds: Sequence[np.ndarray], delay: Time

) -> np.ndarray:

num_delay_samples = delay.get_num_samples(self.sampling_rate)

num_samples = max(

i * num_delay_samples + sound.size

for i, sound in enumerate(sounds)

)

samples = np.zeros(num_samples, dtype=np.float64)

for i, sound in enumerate(sounds):

offset = i * num_delay_samples

samples[offset : offset + sound.size] += sound

return samples

Based on the current sampling frequency of your synthesizer, you convert the delay in seconds into the corresponding number of samples. Then, you find the total number of samples to allocate for the resulting array, which you initialize with zeros. Finally, you iterate over the sounds, adding them into your samples array with the appropriate offset.

Note: This modified version of .overlay() lets you mix variable-length sounds whose arrays no longer have to be of equal size. That can be particularly handy if you want to allow one or more strings to ring out longer than the rest, creating an interesting texture.

Here’s the same example you saw in the previous section. However, you now have a forty-millisecond delay between the individual plucks, and you vary the vibration duration depending on its frequency:

>>> from pedalboard.io import AudioFile

>>> from digitar.processing import normalize

>>> from digitar.synthesis import Synthesizer

>>> from digitar.temporal import Time

>>> frequencies = [329.63, 246.94, 196.00, 146.83, 110.00, 82.41]

>>> delay = Time.from_milliseconds(40)

>>> damping = 0.499

>>> synthesizer = Synthesizer()

>>> sounds = [

... synthesizer.vibrate(frequency, Time(3.5 + 0.25 * i), damping)

... for i, frequency in enumerate(frequencies)

... ]

>>> with AudioFile("arpeggio.mp3", "w", synthesizer.sampling_rate) as file:

... file.write(normalize(synthesizer.overlay(sounds, delay)))

Notes with a lower frequency will have a slightly longer duration than their higher-frequency counterparts. This simulates the inertia of real strings, which tend to vibrate longer if they are thicker or longer.

Below is the corresponding waveform, which appears to have more variation and complexity:

If you look closely at this waveform, then you’ll see the individual peaks at the beginning, indicating where the subsequent notes start. They’re equally spaced, as determined by your delay parameter.

By changing the delay, you can adjust the stroke speed to create a faster and more dynamic rhythm or a slower, more mellow sound. You’ll use this parameter to enhance the expressiveness of your virtual instrument and mimic the musical phrasings that a guitarist might naturally use.

Now that you have control over the timing of each note in a chord, you can experiment further by changing the order in which you play them.

Reverse the Strumming Direction to Alter the Timbre

Guitarists often vary not just the speed but also the strumming direction as they play. By alternating between downstrokes and upstrokes, they can emphasize different strings and change the timbre of the same chord. Downstrokes tend to sound more powerful and are usually louder because the pick—or your finger—hits the lower, thicker strings first. Conversely, upstrokes often highlight the higher, thinner strings, producing a lighter sound.

You can express both the strumming speed and direction with custom data types. Create a Python module named stroke in your digitar package and define these two classes in it:

src/digitar/stroke.py

import enum

from dataclasses import dataclass

from typing import Self

from digitar.temporal import Time

class Direction(enum.Enum):

DOWN = enum.auto()

UP = enum.auto()

@dataclass(frozen=True)

class Velocity:

direction: Direction

delay: Time

@classmethod

def down(cls, delay: Time) -> Self:

return cls(Direction.DOWN, delay)

@classmethod

def up(cls, delay: Time) -> Self:

return cls(Direction.UP, delay)

The first class is a Python enumeration, which assigns unique values to the mutually exclusive stroke directions, of which there are two. The following class, Velocity, uses that enumeration as its member, combining it with the delay or the interval between the subsequent plucks.

You can quickly instantiate objects to represent guitar strokes by calling convenient class methods on your Velocity class:

>>> from digitar.stroke import Direction, Velocity

>>> from digitar.temporal import Time

>>> slow = Time.from_milliseconds(40)

>>> fast = Time.from_milliseconds(20)

>>> Velocity.down(slow)

Velocity(direction=<Direction.DOWN: 1>, delay=Time(seconds=Decimal('0.04')))

>>> Velocity.up(fast)

Velocity(direction=<Direction.UP: 2>, delay=Time(seconds=Decimal('0.02')))

The first stroke is slow and directed downward, while the second is faster and directed upward. You’ll use these new data types in the project to control the musical feel of your digital guitar.

But there are many kinds of guitars in the wild. Some have fewer strings, others are bigger or smaller, and some need an electronic amplifier. On top of that, you can tune each instrument to different notes. So, before you can properly take advantage of the stroke velocity, you need to build a virtual instrument and learn how to handle it.

Step 4: Play Musical Notes on the Virtual Guitar

At this point, you can produce monophonic as well as polyphonic sounds based on specific frequencies with your digital guitar. In this step, you’ll model the relationship between those frequencies and the musical notes they correspond to. Additionally, you’ll simulate the tuning of the guitar strings and the interaction with the fretboard to create a realistic playing experience.

Press a Vibrating String to Change Its Pitch

Most guitars have between four and twelve strings, each capable of producing a variety of pitches. When you pluck an open string without touching the guitar neck, the string starts to vibrate at its fundamental frequency. However, once you press the string against one of the metal strips or frets along the fingerboard, you effectively shorten the string, changing its vibration frequency when plucked.

Note: The shorter the string, the higher the frequency of vibration or pitch that you can hear. Conversely, the longer the string, the lower the pitch. That’s why a double bass has such a long neck, and a ukulele has a shorter one.

Each guitar fret represents an increase in pitch by a single semitone or a half-step on the chromatic scale—the standard scale in Western music. The chromatic scale divides each octave, or a set of eight musical notes, into twelve equally spaced semitones, with a ratio of the twelfth root of two between them. When you go all the way up to the twelfth semitone, you’ll double the frequency of the note that marks the beginning of an octave.

The distances between adjacent frets in a fretted instrument follow the same principle, reflecting the logarithmic nature of the frequency increase at each step. As you move along the fretboard and press down on successive frets, you’ll notice the pitch of the string progressively increasing, one semitone at a time.

On a typical six-string guitar, you’ll usually find about twenty or more frets, amounting to over a hundred pitches! However, when you account for the duplicates due to overlapping octaves, the actual number of distinctive pitches decreases. In reality, you can play about four octaves of musical notes, which is short of fifty unique pitches. On the other hand, the virtual guitar that you’re about to build has no such limits!

In Python, you can implement a semitone-based pitch adjustment like this:

src/digitar/pitch.py

from dataclasses import dataclass

from typing import Self

from digitar.temporal import Hertz

@dataclass(frozen=True)

class Pitch:

frequency: Hertz

def adjust(self, num_semitones: int) -> Self:

return Pitch(self.frequency * 2 ** (num_semitones / 12))

Once you create a new pitch, you can modify the corresponding fundamental frequency by calling .adjust() with the desired number of semitones. A positive number of semitones will increase the frequency, a negative number will decrease it, while zero will keep it intact. Note that you use Python’s exponentiation operator (**) to calculate the twelfth root of two, which the formula relies on.

To confirm that your code is working as expected, you can run the following test:

>>> from digitar.pitch import Pitch

>>> pitch = Pitch(frequency=110.0)

>>> semitones = [-12, 12, 24] + list(range(12))

>>> for num_semitones in sorted(semitones):

... print(f"{num_semitones:>3}: {pitch.adjust(num_semitones)}")

...

-12: Pitch(frequency=55.0)

0: Pitch(frequency=110.0)

1: Pitch(frequency=116.54094037952248)

2: Pitch(frequency=123.47082531403103)

3: Pitch(frequency=130.8127826502993)

4: Pitch(frequency=138.59131548843604)

5: Pitch(frequency=146.8323839587038)

6: Pitch(frequency=155.56349186104046)

7: Pitch(frequency=164.81377845643496)

8: Pitch(frequency=174.61411571650194)

9: Pitch(frequency=184.9972113558172)

10: Pitch(frequency=195.99771799087463)

11: Pitch(frequency=207.65234878997256)

12: Pitch(frequency=220.0)

24: Pitch(frequency=440.0)

You start by defining a pitch produced by a string vibrating at 110 Hz, which corresponds to the A note in the second octave. Then, you iterate over a list of semitone numbers to adjust the pitch accordingly.

Depending on whether the given number is negative or positive, adjusting the frequency by exactly twelve semitones (one octave) either halves or doubles the original frequency of that pitch. Anything in between sets the frequency to the corresponding semitone within that octave.

Note: In all cases, you get a new instance of the Pitch class, which is immutable.

Being able to adjust the frequency is useful, but the Pitch class forces you to think in terms of pitches, semitones, and octaves, which isn’t the most convenient. You’ll wrap the pitch in a higher-level class inside a new module named instrument:

src/digitar/instrument.py

from dataclasses import dataclass

from digitar.pitch import Pitch

@dataclass(frozen=True)

class VibratingString:

pitch: Pitch

def press_fret(self, fret_number: int | None = None) -> Pitch:

if fret_number is None:

return self.pitch

return self.pitch.adjust(fret_number)

To simulate plucking an open string, pass None or leave the fret_number parameter out when calling your .press_fret() method. By doing so, you’ll return the string’s unaltered pitch. Alternatively, you can pass zero as the fret number.

And here’s how you can interact with your new class:

>>> from digitar.instrument import VibratingString

>>> from digitar.pitch import Pitch

>>> a2_string = VibratingString(Pitch(frequency=110))

>>> a2_string.pitch

Pitch(frequency=110)

>>> a2_string.press_fret(None)

Pitch(frequency=110)

>>> a2_string.press_fret(0)

Pitch(frequency=110.0)

>>> a2_string.press_fret(1)

Pitch(frequency=116.54094037952248)

>>> a2_string.press_fret(12)

Pitch(frequency=220.0)

You can now treat pitches and guitar strings independently, which lets you assign a different pitch to the same string if you want to. This mapping of pitches to open strings is known as guitar tuning in music. Tuning systems require you to understand a specific notation of musical notes, which you’ll learn about in the next section.

Read Musical Notes From Scientific Pitch Notation

In scientific pitch notation, every musical note appears as a letter followed by an optional symbol, such as a sharp (♯) or flat (♭) denoting accidentals, as well as an octave number. The sharp symbol raises the note’s pitch by a semitone, while the flat symbol lowers it by a semitone. If you omit the octave number, then zero is assumed implicitly.

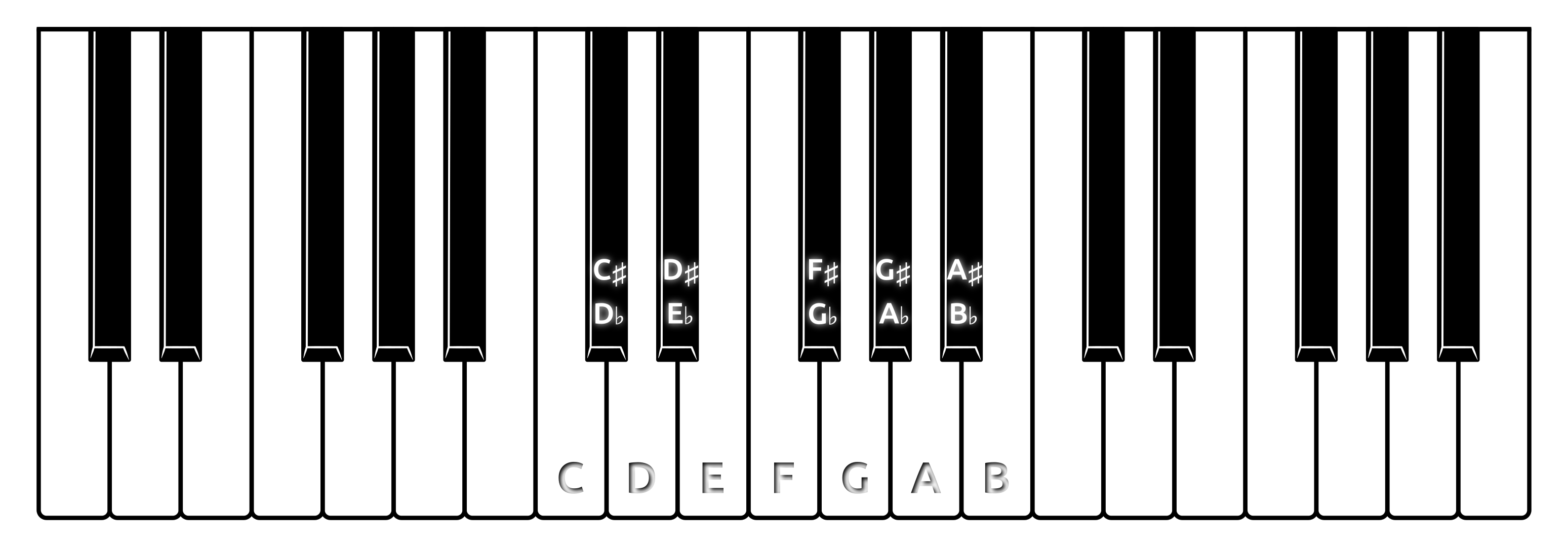

There are seven letters in this notation, with C marking the boundaries of each octave:

| Semitone | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sharp | C♯0 | D♯0 | F♯0 | G♯0 | A♯0 | ||||||||

| Tone | C0 | D0 | E0 | F0 | G0 | A0 | B0 | C1 | |||||

| Flat | D♭0 | E♭0 | G♭0 | A♭0 | B♭0 |

In this case, you’re looking at the first octave comprising eight notes: C0, D0, E0, F0, G0, A0, B0, and C1. The system starts at C0 or just C, which is approximately 16.3516 Hz. When you go all the way up to C1 on the right, which also starts the next octave, you’ll double that frequency.

Note: While most letters are a whole tone apart, not all of them have sharp or flat equivalents. The letters E and F, as well as B and C, are already one half-tone apart, so there’s no E♯ or F♭. This is for historical reasons due to the technical limitations of the early piano keyboards:

When you overlay the notes in scientific pitch notation on top of a piano keyboard, you’ll notice that accidentals correspond to the five black keys. Conversely, the natural tones correspond to the seven white keys in each octave that repeat in both directions across the keyboard.

You can now decipher scientific pitch notation. For example, A4 indicates the musical note A in the fourth octave, with a frequency of 440 Hz, which is the concert pitch reference. Similarly, C♯4 represents the C-sharp note in the fourth octave, located one semitone above the middle C on a standard piano keyboard.

In Python, you can leverage regular expressions to programmatically translate this notation into numeric pitches. Add the following class method to the Pitch class in the pitch module:

src/digitar/pitch.py

import re

from dataclasses import dataclass

from typing import Self

from digitar.temporal import Hertz

@dataclass(frozen=True)

class Pitch:

frequency: Hertz

@classmethod

def from_scientific_notation(cls, notation: str) -> Self:

if match := re.fullmatch(r"([A-G]#?)(-?\d+)?", notation):

note = match.group(1)

octave = int(match.group(2) or 0)

semitones = "C C# D D# E F F# G G# A A# B".split()

index = octave * 12 + semitones.index(note) - 57

return cls(frequency=440.0 * 2 ** (index / 12))

else:

raise ValueError(

f"Invalid scientific pitch notation: {notation}"

)

def adjust(self, num_semitones: int) -> Self:

return Pitch(self.frequency * 2 ** (num_semitones / 12))

This method calculates the frequency for a given note based on its distance in semitones from A4. Note that this is a simplified implementation, which only takes sharp notes into account. If you need to represent a flat note, then you can rewrite it in terms of its equivalent sharp note, provided that it exists. For instance, B♭ is the same as A♯.

Here’s the sample usage of your new class method:

>>> from digitar.pitch import Pitch

>>> for note in "C", "C0", "A#", "C#4", "A4":

... print(f"{note:>3}", Pitch.from_scientific_notation(note))

...

C Pitch(frequency=16.351597831287414)

C0 Pitch(frequency=16.351597831287414)

A# Pitch(frequency=29.13523509488062)

C#4 Pitch(frequency=277.1826309768721)

A4 Pitch(frequency=440.0)

As you can see, the code accepts and interprets a few variants of scientific pitch notation. That’s perfect! You’re now ready to tune up your digital guitar.

Perform String Tuning of the Virtual Guitar

In the real world, musicians adjust the tension of guitar strings by tightening or loosening the respective tuning pegs to achieve a perfectly tuned sound. Doing so allows them to assign different sets of musical notes or pitches to their instrument’s strings. They’ll occasionally reuse the same pitch for two or more strings to create a fuller sound.

Depending on the number of strings in a guitar, you’ll assign the musical notes differently. Apart from the standard tuning, which is the most typical choice of notes for a given instrument, you can apply several alternative guitar tunings, even when you have the same number of strings at your disposal.

The traditional six-string guitar tuning, from the thinnest string (highest pitch) to thickest one (lowest pitch), is the following:

| String | Note | Frequency |

|---|---|---|

| 1st | E4 | 329.63 Hz |

| 2nd | B3 | 246.94 Hz |

| 3rd | G3 | 196.00 Hz |

| 4th | D3 | 146.83 Hz |

| 5th | A2 | 110.00 Hz |

| 6th | E2 | 82.41 Hz |

If you’re right-handed, you’d typically use your right hand to strum or pluck the strings near the sound hole while your left hand frets the notes on the neck. In this orientation, the first string (E4) is closest to the bottom, while the sixth string (E2) is closest to the top.

It’s customary to denote guitar tunings in ascending frequency order. For example, the standard guitar tuning is usually presented as: E2-A2-D3-G3-B3-E4. At the same time, some guitar tabs follow the string numbering depicted in the table above, which reverses this order. Therefore, the top line in a six-string guitar tablature will usually represent the first string (E4) and the bottom line the sixth string (E2).

To avoid confusion, you’ll respect both conventions. Add the following class to your instrument module so that you can represent a string tuning:

src/digitar/instrument.py

from dataclasses import dataclass

from typing import Self

from digitar.pitch import Pitch

# ...

@dataclass(frozen=True)

class StringTuning:

strings: tuple[VibratingString, ...]

@classmethod

def from_notes(cls, *notes: str) -> Self:

return cls(

tuple(

VibratingString(Pitch.from_scientific_notation(note))

for note in reversed(notes)

)

)

An object of this class contains a tuple of VibratingString instances sorted by the string number in ascending order. In other words, the first element in the tuple corresponds to the first string (E4) and the last element to the sixth string (E2). Note that the number of strings can be less than or greater than six should you need to represent other types of stringed instruments, such as a banjo, which has just five strings.

In practice, you’ll create new instances of the StringTuning class by calling the .from_notes() class method and passing a variable number of musical notes in scientific pitch notation. When you do, you must follow the string tuning order, starting with the lowest pitch. This is because the method reverses the input notes to match the typical string arrangement on a guitar tab.

Here’s how you might use the StringTuning class to represent various tuning systems for different plucked string instruments:

>>> from digitar.instrument import StringTuning

>>> StringTuning.from_notes("E2", "A2", "D3", "G3", "B3", "E4")

StringTuning(

strings=(

VibratingString(pitch=Pitch(frequency=329.6275569128699)),

VibratingString(pitch=Pitch(frequency=246.94165062806206)),

VibratingString(pitch=Pitch(frequency=195.99771799087463)),

VibratingString(pitch=Pitch(frequency=146.8323839587038)),

VibratingString(pitch=Pitch(frequency=110.0)),

VibratingString(pitch=Pitch(frequency=82.4068892282175)),

)

)

>>> StringTuning.from_notes("E1", "A1", "D2", "G2")

StringTuning(

strings=(

VibratingString(pitch=Pitch(frequency=97.99885899543733)),

VibratingString(pitch=Pitch(frequency=73.41619197935188)),

VibratingString(pitch=Pitch(frequency=55.0)),

VibratingString(pitch=Pitch(frequency=41.20344461410875)),

)

)

The first object represents the standard six-string guitar tuning, while the second one represents the four-string bass guitar tuning. You can use the same approach to model the tuning of other stringed instruments by providing the appropriate notes for each string.

With this, you can achieve the effect of fretting the guitar with your fingers to play a particular chord:

>>> tuning = StringTuning.from_notes("E2", "A2", "D3", "G3", "B3", "E4")

>>> frets = (None, None, 2, None, 0, None)

>>> for string, fret_number in zip(tuning.strings, frets):

... if fret_number is not None:

... string.press_fret(fret_number)

...

Pitch(frequency=220.0)

Pitch(frequency=110.0)

In this case, you use the standard guitar tuning. Then, you simulate pressing the second fret on the third string (G3) and leaving the fifth string (A2) open while strumming both of them. You don’t stroke or fret the remaining strings, as indicated by None in the tuple. The zip() function combines strings and the corresponding fret numbers into pairs that you iterate over.

Note: The number of frets must correspond to the number of guitar strings. Otherwise, you risk indexing errors or inaccurate representation of the chord being played.

The third string is tuned to note G3 or 196 Hz. But, since you press it on the second fret, you increase its pitch by two semitones, resulting in a frequency of 220 Hz. The fifth string is tuned to A2 or 110 Hz, which you play open or without fretting. When you mix both frequencies, you’ll produce a chord consisting of notes A3 and A2, which are one octave apart.

Note: Such a combination of musical notes creates a unison or perfect octave interval, which is harmonically consonant and pleasing to the ear.

Next up, you’ll create a custom data type to represent musical chords more conveniently.

Represent Chords on a Fretted Instrument

Previously, you defined a plain tuple to express the fret numbers in a particular chord. You can be a tad bit more explicit by extending the tuple class and restricting the types of values that are allowed in it:

src/digitar/chord.py

from typing import Self

class Chord(tuple[int | None, ...]):

@classmethod

def from_numbers(cls, *numbers: int | None) -> Self:

return cls(numbers)

With type hints, you declare that your tuple should only contain integers representing the fret numbers or empty values (None) indicating an open string. You also provide a class method, .from_numbers(), allowing you to create a Chord instance by passing in the fret numbers directly. This method takes a variable number of arguments, each of which can be an integer or None.

Note: Python tuples are immutable, so you don’t need to take extra steps to follow your initial design goals.

Here’s how you might define a chord from the previous section of this tutorial using the Chord class:

>>> from digitar.chord import Chord

>>> Chord.from_numbers(None, None, 2, None, 0, None)

(None, None, 2, None, 0, None)

>>> Chord([None, None, 2, None, 0, None])

(None, None, 2, None, 0, None)

When you create a Chord instance using the class method, you pass the fret numbers as arguments. You can also instantiate the class by passing an iterable object of values, such as a list, to the constructor. However, it’s generally more explicit to use the .from_numbers() method.

To sum up, these are the most important points to remember:

- The value’s position in the tuple determines the string number, so the first element corresponds to the highest pitch.

- An empty value (

None) means that you don’t pluck the string at all. - Zero represents an open string, which you pluck without pressing any frets.

- Other integers correspond to fret numbers on a guitar neck that you press.

These are also finger patterns on guitar tabs that you’ll leverage later in the tutorial. Now, it’s time to define another custom data type with which you’ll represent different kinds of plucked string instruments in code.

Model Any Plucked String Instrument

When you think about the primary properties that influence how a plucked string instrument sounds, they’re the number of strings, their tuning, and the material they’re made of. While not the only one, the latter aspect affects how long the string will sustain its vibration and the degree of energy damping.

You can conveniently express those attributes by defining a data class in your instrument module:

src/digitar/instrument.py

from dataclasses import dataclass

from typing import Self

from digitar.pitch import Pitch

from digitar.temporal import Time

# ...

@dataclass(frozen=True)

class PluckedStringInstrument:

tuning: StringTuning

vibration: Time

damping: float = 0.5

def __post_init__(self) -> None:

if not (0 < self.damping <= 0.5):

raise ValueError(

"string damping must be in the range of (0, 0.5]"

)

The string tuning determines how many strings an instrument has and what their fundamental frequencies of vibration are. For simplicity, all strings in an instrument will share the same vibration time and damping coefficient that defaults to one-half. If you’d like to override them individually on a string-by-string basis, then you’ll need to tweak the code yourself.

The .__post_init__() method verifies whether the damping is within the acceptable range of values.

You can define a convenient property in your class to quickly find out the number of strings in an instrument without reaching out for the tuning object:

src/digitar/instrument.py

from dataclasses import dataclass

from functools import cached_property

from typing import Self

from digitar.pitch import Pitch

from digitar.temporal import Time

# ...

@dataclass(frozen=True)

class PluckedStringInstrument:

# ...

@cached_property

def num_strings(self) -> int:

return len(self.tuning.strings)

It’s a cached property for more efficient access. After you access such a property for the first time, Python remembers the computed value, so subsequent accesses won’t recompute it since the value doesn’t change during an object’s lifetime.

Next, you may add methods that will take a Chord instance, which you built earlier, and turn it into a tuple of pitches that you can later use to synthesize a polyphonic sound:

src/digitar/instrument.py

from dataclasses import dataclass

from functools import cache, cached_property

from typing import Self

from digitar.chord import Chord

from digitar.pitch import Pitch

from digitar.temporal import Time

# ...

@dataclass(frozen=True)

class PluckedStringInstrument:

# ...

@cache

def downstroke(self, chord: Chord) -> tuple[Pitch, ...]:

return tuple(reversed(self.upstroke(chord)))

@cache

def upstroke(self, chord: Chord) -> tuple[Pitch, ...]:

if len(chord) != self.num_strings:

raise ValueError(

"chord and instrument must have the same string count"

)

return tuple(

string.press_fret(fret_number)

for string, fret_number in zip(self.tuning.strings, chord)

if fret_number is not None

)

Since the order of fret numbers in a chord agrees with the order of guitar strings (bottom-up), stroking a chord simulates an upstroke. Your .upstroke() method uses a generator expression with a conditional expression, which looks almost identical to the loop you saw earlier when you performed the string tuning. The .downstroke() method delegates execution to .upstroke(), intercepts the resulting tuple of the Pitch objects, and reverses it.

Because most chords repeat over and over again in similar patterns within a single song, you don’t want to calculate each one of them every time. Instead, you annotate both methods with the @cache decorator to avoid redundant computations. By storing the computed tuples, Python will return the cached result when the same inputs occur again.

You can now model different types of plucked string instruments to reproduce their unique acoustic characteristics. Here are a few examples using the standard tuning of each instrument:

>>> from digitar.instrument import PluckedStringInstrument, StringTuning

>>> from digitar.temporal import Time

>>> acoustic_guitar = PluckedStringInstrument(

... tuning=StringTuning.from_notes("E2", "A2", "D3", "G3", "B3", "E4"),

... vibration=Time(seconds=10),

... damping=0.498,

... )

>>> bass_guitar = PluckedStringInstrument(

... tuning=StringTuning.from_notes("E1", "A1", "D2", "G2"),

... vibration=Time(seconds=10),

... damping=0.4965,

... )

>>> electric_guitar = PluckedStringInstrument(

... tuning=StringTuning.from_notes("E2", "A2", "D3", "G3", "B3", "E4"),

... vibration=Time(seconds=0.09),

... damping=0.475,

... )

>>> banjo = PluckedStringInstrument(

... tuning=StringTuning.from_notes("G4", "D3", "G3", "B3", "D4"),

... vibration=Time(seconds=2.5),

... damping=0.4965,

... )

>>> ukulele = PluckedStringInstrument(

... tuning=StringTuning.from_notes("A4", "E4", "C4", "G4"),

... vibration=Time(seconds=5.0),

... damping=0.498,

... )

Right now, they’re only abstract containers for logically related data. Before you can take full advantage of these virtual instruments and actually hear them, you must integrate them into your Karplus-Strong synthesizer, which you’ll do next.

Combine the Synthesizer With an Instrument

You want to parameterize your synthesizer with a plucked string instrument so that you can synthesize sounds that are characteristic of that particular instrument. Open the synthesis module in your Python project now and add an instrument field to the Synthesizer class:

src/digitar/synthesis.py

from dataclasses import dataclass

from itertools import cycle

from typing import Sequence

import numpy as np

from digitar.burst import BurstGenerator, WhiteNoise

from digitar.instrument import PluckedStringInstrument

from digitar.processing import normalize, remove_dc

from digitar.temporal import Hertz, Time

AUDIO_CD_SAMPLING_RATE = 44100

@dataclass(frozen=True)

class Synthesizer:

instrument: PluckedStringInstrument

burst_generator: BurstGenerator = WhiteNoise()

sampling_rate: int = AUDIO_CD_SAMPLING_RATE

# ...

By using the properties defined in the PluckedStringInstrument class, the synthesizer can generate sounds that mimic the timbre and expression of a plucked string instrument, such as an acoustic guitar or a banjo.

Now that you have an instrument in your synthesizer, you can leverage its tuned strings to play a chord with the given speed and direction:

src/digitar/synthesis.py

# ...

from digitar.burst import BurstGenerator, WhiteNoise

from digitar.chord import Chord

from digitar.instrument import PluckedStringInstrument

from digitar.processing import normalize, remove_dc

from digitar.stroke import Direction, Velocity

from digitar.temporal import Hertz, Time

AUDIO_CD_SAMPLING_RATE = 44100

@dataclass(frozen=True)

class Synthesizer:

instrument: PluckedStringInstrument

burst_generator: BurstGenerator = WhiteNoise()

sampling_rate: int = AUDIO_CD_SAMPLING_RATE

def strum_strings(

self, chord: Chord, velocity: Velocity, vibration: Time | None = None

) -> np.ndarray:

if vibration is None:

vibration = self.instrument.vibration

if velocity.direction is Direction.UP:

stroke = self.instrument.upstroke

else:

stroke = self.instrument.downstroke

sounds = tuple(

self.vibrate(pitch.frequency, vibration, self.instrument.damping)

for pitch in stroke(chord)

)

return self.overlay(sounds, velocity.delay)

# ...

Your new .strum_strings() method expects a Chord and a Velocity instance at the minimum. You can optionally pass the vibration duration, but if you don’t, then the method falls back on the instrument’s default duration. Depending on the desired stroke direction, it synthesizes the pitches in ascending or descending string order. Finally, it overlays them with the required delay or arpeggiation.

Note: You could introduce two separate methods, one for plucking an individual string and another one for strumming multiple strings at once. However, it’s more convenient to treat both cases uniformly, especially when you have to deal with hundreds of chords in a guitar tablature.

Because .strum_strings() has become the only part of the public interface of your class, you can signal that the other two methods, vibrate() and overlay(), are intended for internal use only. A common convention in Python to denote non-public methods is to prefix their names with a single underscore (_):

src/digitar/synthesis.py

# ...

@dataclass(frozen=True)

class Synthesizer:

# ...

def strum_strings(...) -> np.ndarray:

# ...

sounds = tuple(

self._vibrate(pitch.frequency, vibration, self.instrument.damping)

for pitch in stroke(chord)

)

return self._overlay(sounds, velocity.delay)

def _vibrate(...) -> np.ndarray:

# ...

def _overlay(...) -> np.ndarray:

# ...

It’s clear now that ._vibrate() and ._overlay() are implementation details that can change without notice, so you shouldn’t access them from an external scope.

Your synthesizer is almost complete, but it’s missing one crucial detail. If you were to synthesize a complete music piece, like the original Diablo soundtrack, then over ninety percent of the synthesis time would be spent on redundant computation. That’s because most songs consist of repeating patterns and motifs. It’s these repeated sequences of chords that create a recognizable rhythm.

To bring down the total synthesis time from minutes to seconds, you can incorporate caching of the intermediate results. Ideally, you’d want to decorate all methods in your Synthesizer class with the @cache decorator to compute them once for each unique list of arguments. However, caching requires that all method arguments are hashable.

While you diligently used immutable objects, which also happen to be hashable, NumPy arrays aren’t. Therefore, you can’t cache the results of your ._overlay() method, which takes a sequence of arrays as an argument. Instead, you can cache the other two methods that only rely on immutable objects:

src/digitar/synthesis.py

from dataclasses import dataclass

from functools import cache

from itertools import cycle

from typing import Sequence

# ...

@dataclass(frozen=True)

class Synthesizer:

# ...

@cache

def strum_strings(...) -> np.ndarray:

# ...

@cache

def _vibrate(...) -> np.ndarray:

# ...

def _overlay(...) -> np.ndarray:

# ...