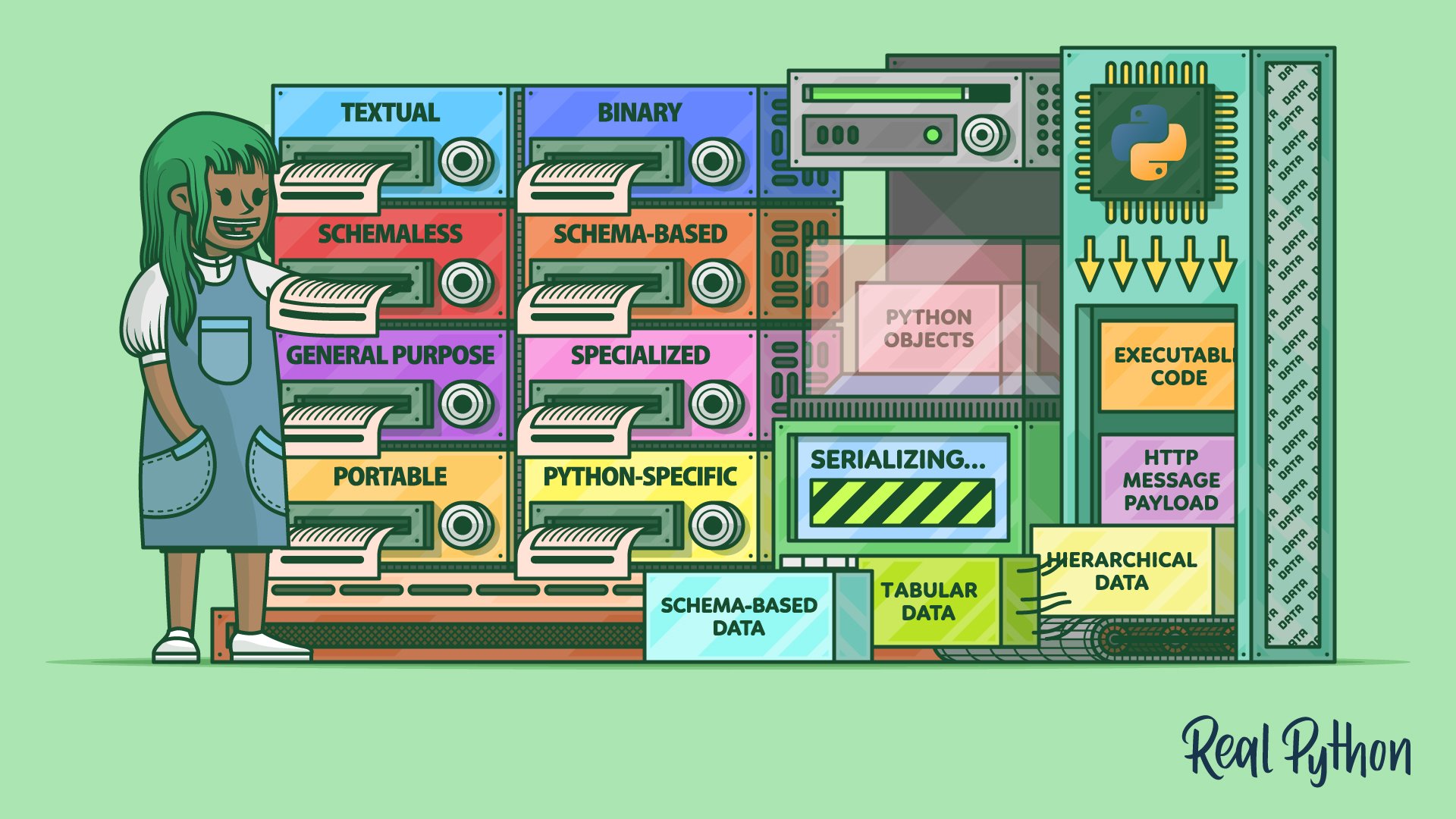

Whether you’re a data scientist crunching big data in a distributed cluster, a back-end engineer building scalable microservices, or a front-end developer consuming web APIs, you should understand data serialization. In this comprehensive guide, you’ll move beyond XML and JSON to explore several data formats that you can use to serialize data in Python. You’ll explore them based on their use cases, learning about their distinct categories.

By the end of this tutorial, you’ll have a deep understanding of the many data interchange formats available. You’ll master the ability to persist and transfer stateful objects, effectively making them immortal and transportable through time and space. Finally, you’ll learn to send executable code over the network, unlocking the potential of remote computation and distributed processing.

In this tutorial, you’ll learn how to:

- Choose a suitable data serialization format

- Take snapshots of stateful Python objects

- Send executable code over the wire for distributed processing

- Adopt popular data formats for HTTP message payloads

- Serialize hierarchical, tabular, and other shapes of data

- Employ schemas for validating and evolving the structure of data

To get the most out of this tutorial, you should have a good understanding of object-oriented programming principles, including classes and data classes, as well as type hinting in Python. Additionally, familiarity with the HTTP protocol and Python web frameworks would be a plus. This knowledge will make it easier for you to follow along with the tutorial.

You can download all the code samples accompanying this tutorial by clicking the link below:

Get Your Code: Click here to download the free sample code that shows you how to serialize your data with Python.

Feel free to skip ahead and focus on the part that interests you the most, or buckle up and get ready to catapult your data management skills to a whole new level!

Get an Overview of Data Serialization

Serialization, also known as marshaling, is the process of translating a piece of data into an interim representation that’s suitable for transmission through a network or persistent storage on a medium like an optical disk. Because the serialized form isn’t useful on its own, you’ll eventually want to restore the original data. The inverse operation, which can occur on a remote machine, is called deserialization or unmarshaling.

Note: Although the terms serialization and marshaling are often used interchangeably, they can have slightly different meanings for different people. In some circles, serialization is only concerned with the translation part, while marshaling is also about moving data from one place to another.

The precise meaning of each term depends on whom you ask. For example, Java programmers tend to use the word marshaling in the context of remote method invocation (RMI). In Python, marshaling refers almost exclusively to the format used for storing the compiled bytecode instructions.

Check out the comparison of serialization and marshaling on Wikipedia for more details.

The name serialization implies that your data, which may be structured as a dense graph of objects in the computer’s memory, becomes a linear sequence—or a series—of bytes. Such a linear representation is perfect to transmit or store. Raw bytes are universally understood by various programming languages, operating systems, and hardware architectures, making it possible to exchange data between otherwise incompatible systems.

When you visit an online store using your web browser, chances are it runs a piece of JavaScript code in the background to communicate with a back-end system. That back end might be implemented in Flask, Django, or FastAPI, which are Python web frameworks. Because JavaScript and Python are two different languages with distinct syntax and data types, they must share information using an interchange format that both sides can understand.

In other words, parties on opposite ends of a digital conversation may deserialize the same piece of information into wildly different internal representations due to their technical constraints and specifications. However, it would still be the same information from a semantic point of view.

Tools like Node.js make it possible to run JavaScript on the back end, including isomorphic JavaScript that can run on both the client and the server in an unmodified form. This eliminates language discrepancies altogether but doesn’t address more subtle nuances, such as big-endian vs little-endian differences in hardware.

Other than that, transporting data from one machine to another still requires converting it into a network-friendly format. Specifically, the format should allow the sender to partition and put the data into network packets, which the receiving machine can later correctly reassemble. Network protocols are fairly low-level, so they deal with streams of bytes rather than high-level data types.

Depending on your use case, you’ll want to pick a data serialization format that offers the best trade-off between its pros and cons. In the next section, you’ll learn about various categories of data formats used in serialization. If you already have prior knowledge about these formats and would like to explore their respective scenarios, then feel free to skip the basic introduction coming up next.

Compare Data Serialization Formats

There are many ways to classify data serialization formats. Some of these categories aren’t mutually exclusive, making certain formats fall under a few of them simultaneously. In this section, you’ll find an overview of the different categories, their trade-offs, and use cases, as well as examples of popular data serialization formats.

Later, you’ll get your hands on some practical applications of these data serialization formats under different programming scenarios. To follow along, download the sample code mentioned in the introduction and install the required dependencies from the included requirements.txt file into an active virtual environment by issuing the following command:

(venv) $ python -m pip install -r requirements.txt

This will install several third-party libraries, frameworks, and tools that will allow you to navigate through the remaining part of this tutorial smoothly.

Textual vs Binary

At the end of the day, all serialized data becomes a stream of bytes regardless of its original shape or form. But some byte values—or their specific arrangement—may correspond to Unicode code points with a meaningful and human-readable representation. Data serialization formats whose syntax consists purely of characters visible to the naked eye are called textual data formats, as opposed to binary data formats meant for machines to read.

The main benefit of a textual data format is that people like you can read serialized messages, make sense of them, and even edit them by hand when needed. In many cases, these data formats are self-explanatory, with descriptive element or attribute names. For example, take a look at this excerpt from the Real Python web feed with information about the latest tutorials and courses published:

<?xml version="1.0" encoding="utf-8"?>

<feed xmlns="http://www.w3.org/2005/Atom">

<title>Real Python</title>

<link href="https://realpython.com/atom.xml" rel="self"/>

<link href="https://realpython.com/"/>

<updated>2023-09-15T12:00:00+00:00</updated>

<id>https://realpython.com/</id>

<author>

<name>Real Python</name>

</author>

<entry>

<title>Bypassing the GIL for Parallel Processing in Python</title>

<id>https://realpython.com/python-parallel-processing/</id>

<link href="https://realpython.com/python-parallel-processing/"/>

<updated>2023-09-13T14:00:00+00:00</updated>

<summary>In this tutorial, you'll take a deep dive (...)</summary>

<content type="html">

<div><p>Unlocking Python's true potential (...)

</content>

</entry>

(...)

</feed>

The Real Python feed uses the XML-based Atom Syndication Format, which is a form of a data serialization format. You can open the document above in any text editor without needing specialized software or libraries. Furthermore, just by looking at this feed, you can immediately break down its structure and probably guess the meaning of the individual elements without checking the format’s specification.

Now, compare the text-based Atom feed above with an equivalent binary feed. You can generate one by parsing atom.xml with xmltodict and dumping the resulting dictionary to a file using MongoDB’s BSON format. When you do, the result will look something like this:

$ hexdump -C atom.bson | head

00000000 51 d2 01 00 03 66 65 65 64 00 46 d2 01 00 02 40 |Q....feed.F....@|

00000010 78 6d 6c 6e 73 00 1c 00 00 00 68 74 74 70 3a 2f |xmlns.....http:/|

00000020 2f 77 77 77 2e 77 33 2e 6f 72 67 2f 32 30 30 35 |/www.w3.org/2005|

00000030 2f 41 74 6f 6d 00 02 74 69 74 6c 65 00 0c 00 00 |/Atom..title....|

00000040 00 52 65 61 6c 20 50 79 74 68 6f 6e 00 04 6c 69 |.Real Python..li|

00000050 6e 6b 00 72 00 00 00 03 30 00 3f 00 00 00 02 40 |nk.r....0.?....@|

00000060 68 72 65 66 00 20 00 00 00 68 74 74 70 73 3a 2f |href. ...https:/|

00000070 2f 72 65 61 6c 70 79 74 68 6f 6e 2e 63 6f 6d 2f |/realpython.com/|

00000080 61 74 6f 6d 2e 78 6d 6c 00 02 40 72 65 6c 00 05 |atom.xml..@rel..|

00000090 00 00 00 73 65 6c 66 00 00 03 31 00 28 00 00 00 |...self...1.(...|

In this case, you use the command-line tool hexdump to view the contents of your binary file, but it’s rather difficult to understand. While there are bits of text here and there, much of the data looks like gibberish.

Another great advantage of a textual data format is that text has a uniform and mostly unambiguous interpretation. As long as you know the correct character encoding, which is almost always the ubiquitous UTF-8 these days, then you’ll be able to read your serialized messages everywhere on any hardware and system imaginable. No wonder so many popular serialization formats like XML, JSON, YAML, and CSV are all based on text.

Note: As a Python programmer, you might be accustomed to using TOML for your project’s metadata in the pyproject.toml file. Build systems like setuptools and Poetry know how to read this file to build your Python package before you can publish it on PyPI.

While there’s some resemblance to other text-based formats, TOML is different. It’s not a data serialization format, primarily due to its rigid syntax and limited data types. Instead, TOML advertises itself as a configuration file format based on INI files, intended to make editing the configuration easier for humans.

Unfortunately, there’s no rose without a thorn. Text-based data serialization formats are slower to process than their binary counterparts because text has to be translated to native data types. They also use the available space less efficiently, which can limit their usefulness in larger datasets. Therefore, textual formats aren’t as common in data science and other fields that have to deal with big data.

Moreover, textual formats tend to become overly verbose and may contain a lot of redundant boilerplate. Notice how each element in the Atom feed above, such as the author of the feed, is wrapped in at least one pair of opening and closing XML tags, adding to the bloat. You can try compressing the serialized data to mitigate that, but it’ll add some overhead without providing predictable message sizes.

If you’re able to describe your data using plain English, then a textual data format is all you need. But what if you need to mix both text and binary assets in one text message? It’s possible. After all, email uses a text-based protocol, yet it lets you include binary attachments like pictures or PDF documents.

Embedding binary data in a text-based format requires expressing arbitrary bytes using a limited number of characters. One such technique involves Base64 encoding, which turns bytes into ASCII characters. The downside is that it increases the size of the binary asset by about thirty percent. So, sharing your vacation photos with colleagues at work through email can quickly bring your department’s network down!

Note: A common work-around to this problem is to strip the message from binary assets, send them separately using a different communication channel, and only include their handles in the serialized text.

For example, some email providers implement this feature behind the scenes by uploading binary assets to the cloud while linking to them in your message. The same is true for many web APIs, whose responses include hyperlinks to files stored elsewhere instead of directly embedding them in the serialized message.

There’s also the issue of security risks associated with leaking sensitive information due to the ease of reading textual data formats. In contrast, making sense of a message serialized to a binary data format is more challenging but certainly not impossible. Therefore, serializing private information like passwords or credit card numbers always requires strong encryption regardless of the data format!

To sum up, here’s how textual and binary data formats stack up against each other:

| Textual | Binary | |

|---|---|---|

| Examples | CSV, JSON, XML, YAML | Avro, BSON, Parquet, Protocol Buffers |

| Readability | Human and machine-readable | Machine-readable |

| Processing Speed | Slow with bigger datasets | Fast |

| Size | Large due to wasteful verbosity and redundancy | Compact |

| Portability | High | May require extra care to ensure platform-independence |

| Structure | Fixed or evolving, often self-documenting | Usually fixed, which must be agreed on beforehand |

| Types of Data | Mostly text, less efficient when embedding binary data | Text or binary data |

| Privacy and Security | Exposes sensitive information | Makes it more difficult to extract information, but not completely immune |

With this table, you can understand the key differences between textual and binary data serialization formats before deciding which one fits your specific needs. Once you know the answer, the next question should be whether to use a schema or not.

Schemaless vs Schema-Based

Regardless of whether they’re textual or binary, many data serialization formats require a schema document, which is a formal description of the expected structure of serialized data. At the same time, some formats are schemaless, while others can work with or without a schema:

| Schemaless | Schema-Based | |

|---|---|---|

| Textual | JSON, XML, YAML | JSON+JSON Schema, XML+XML Schema (XSD), XML+Document Type Definition (DTD) |

| Binary | BSON, pickle | Avro, Protocol Buffers |

Depending on the format at hand, you can express the corresponding schema differently. For example, it’s common to provide an XML-based XSD schema for XML documents, while the binary Avro format relies on JSON for its schemas. Protocol Buffers use their own interface definition language (IDL), on the other hand.

The schema for the Atom feed that you saw earlier leverages a somewhat dated RELAX NG format, which stands for regular language for XML next generation. Unlike the more widespread XML Schema (XSD), it’s not based on XML itself:

# -*- rnc -*-

# RELAX NG Compact Syntax Grammar for the

# Atom Format Specification Version 11

namespace atom = "http://www.w3.org/2005/Atom"

namespace xhtml = "http://www.w3.org/1999/xhtml"

namespace s = "http://www.ascc.net/xml/schematron"

namespace local = ""

start = atomFeed | atomEntry

# Common attributes

atomCommonAttributes =

attribute xml:base { atomUri }?,

attribute xml:lang { atomLanguageTag }?,

undefinedAttribute*

# ...

A schema typically defines the allowed set of elements and attributes, their arrangement, relationships, and the associated constraints, such as whether an element is required or what range of values it can take. You can think of it as the vocabulary and grammar of a data serialization language.

This concept is analogous to a relational database schema, which specifies the tables, their column types, foreign keys, and so on. It’s a blueprint for re-creating the database from scratch, which can also act as a form of documentation. At runtime, the database schema governs your data’s referential integrity. Lastly, it helps facilitate object-relational mapping (ORM) in frameworks like Django.

Note: Within the realm of web services, two popular technologies that rely heavily on data serialization schemas are SOAP and WSDL. These days, however, modern alternatives like GraphQL, REST combined with JSON, and gRPC have mostly superseded them.

The benefits of using a schema in a data serialization format include:

- Automation: The formal specification of data allows you to generate code stubs in different programming languages to handle automatic serialization and deserialization of each language’s native data types. This is also known as data binding when you use the XML format.

- Consistency: The schema enforces a standard structure for serialized data, ensuring its integrity and consistency across different systems.

- Documentation: The schema provides a clear definition of the structure of the data, which can help you quickly understand how the information is organized.

- Efficiency: Referencing the schema instead of including explicit field names reduces the size of serialized data. The schema can be known ahead of time or be embedded in the serialized message.

- Interoperability: Sharing the schema between different applications or services can facilitate their integration by allowing them to communicate with each other.

- Validation: The schema can be used to validate serialized data in an automated way, catching potential errors early on.

While there are many benefits to employing a schema, it also comes with some drawbacks, which you should weigh before deciding whether or not to use one. The highest price to pay for adopting a schema is the limited flexibility of the serialized data. The schema enforces a rigid structure, which may not be desirable if your data evolves over time or is dynamic in the sense that it doesn’t have a fixed layout.

Moreover, it can be difficult to alter a schema once you commit to one. Even though some schema-based formats, such as Avro, allow for schema versioning, this may generally break the consistency of data that you previously serialized. To mitigate this problem, some tools in the relational database domain offer schema migration, which gradually transforms data from an old schema to a new one.

For rapid prototyping or when working with unstructured data with unpredictable layouts, a schemaless data serialization format may be more suitable. Conceptually, this is like having a NoSQL database that can accept and process data from multiple sources. New types of elements or unknown attributes would be ignored without breaking the system instead of failing the schema validation.

All in all, these are the most important pros and cons of schemaless and schema-based data serialization formats:

| Schemaless | Schema-Based | |

|---|---|---|

| Flexibility | High | Can’t handle unstructured data or easily modify its shape |

| Consistency | Data integrity can become a problem | High |

| Size | Large due to repetitive inclusion of metadata | Compact, especially when the schema is separate |

| Efficiency | Fast storage, slow lookup | Querying the data is fast thanks to its uniform structure |

| Simplicity | Straightforward to implement | Requires more effort and planning up front |

All right, you’ve successfully narrowed down your options regarding the numerous types of data serialization formats. You’ve determined whether choosing a binary format over a textual one is more suitable in your case. Additionally, you understand when to use a schema-based format. Nonetheless, there are still a few choices left on the table, so you must ask yourself some more questions.

General-Purpose vs Specialized

Some data serialization formats are expressive enough to represent arbitrary data, making them universal formats. For example, JSON has become the prevailing data serialization format, especially in web development and REST API design. This format has a minimalistic syntax with only a few essential building blocks that are straightforward to map onto numerous data types in the vast landscape of programming languages.

Note: In the past, the ubiquitous XML format played a similar role but lost its popularity due to the sudden rise of JSON. The biggest argument for using JSON was its cheap deserialization cost on the client side, thanks to being modeled after JavaScript literals. Moreover, people started using XML for purposes that it wasn’t designed for, which led to a growing dislike and a reputation for being notoriously problematic.

On the opposite end of the spectrum, you’ll find specialized formats that can only represent a particular type of data. For example, XML continues to be an excellent format for describing deeply nested hierarchical data like user interfaces. After all, XML belongs to the same family of markup languages as HTML, which is widely used to structure content on the Internet.

Another example of a specialized data serialization format is the comma-separated values (CSV) format. It works best with flat tabular data like spreadsheets, database tables, or DataFrames. While you might be able to serialize a data record by mapping its attributes to table columns, modeling hierarchical data with CSV is less convenient than with XML. But, as a textual format, CSV can reach its limits even when handling tabular data.

Note: When researching data formats, you may come across an alternative nomenclature. Some people refer to tabular data formats as flat, while they call hierarchical formats structured.

In data science, you often have to process enormous amounts of data. To optimize performance and reduce storage costs, it’s usually preferable to choose a binary data serialization format dedicated to such large datasets.

These days, Parquet and Feather are gaining popularity in the data science space. They’re both compatible with Arrow, which is an in-memory specification that allows data-sharing between different libraries and even different programming languages. A couple of older but still popular ones are HDF5 and NetCDF. Their newer counterpart, Zarr, offers better support for distributed data storage and computation.

Special data serialization formats emerged in other domains, as well. Some examples include the following:

- DICOM: A binary format for storing and transmitting medical images

- GeoJSON: A specialized flavor of JSON for serializing geographic features

- GPX: An XML-based format for exchanging GPS coordinates

- MusicXML: An XML-based format for storing musical notation

- OBJ: A textual format for storing three-dimensional models

Whether you can use a textual or binary format, with or without a schema, may actually depend on your use case. Some specialized data serialization formats give you little choice in that regard. But there’s one final question that you must ask yourself before choosing the right data serialization format for you. You’ll read about it in the next section.

Portable vs Python-Specific

Another criterion to consider when choosing a data serialization format for your use case is where you’re going to use it. If you wish to exchange information between foreign systems, then opt for a popular data serialization format that’s globally understood. For example, JSON and Protocol Buffers are widely adopted across different programming languages and platforms.

On the other hand, if you only intend to serialize and deserialize data within Python, then you may choose a Python-specific format for practicality reasons. It’ll be a more efficient and convenient option, provided that you’re not planning to share the serialized data with other systems.

Python ships with the following modules in the standard library, which provide binary data serialization formats for different purposes:

pickle: Python object serializationmarshal: Internal object serializationshelve: Python object persistencedbm: An interface to Unix databases

In practice, you’ll almost always want to serialize your objects with pickle, which is the standard data serialization format in Python. The rest on the list are either low-level formats used internally by the interpreter or legacy formats kept for compatibility. You’ll review them now to get a complete picture of the available options.

Python uses marshal behind the scenes to read and write special files containing the bytecode of the imported modules. When you import a module for the first time, the interpreter builds a corresponding .pyc file with compiled instructions to speed up subsequent imports. Here’s a short code snippet that roughly demonstrates what happens under the hood when you import a module:

>>> import marshal

>>> def import_pyc(path):

... with path.open(mode="rb") as file:

... _ = file.read(16) # Skip the file header

... code = marshal.loads(file.read())

... exec(code, globals())

...

>>> import sysconfig

>>> from pathlib import Path

>>> cache_dir = Path(sysconfig.get_path("stdlib")) / "__pycache__"

>>> module_path = cache_dir / "decimal.cpython-312.pyc"

>>> import_pyc(module_path)

>>> Decimal(3.14)

Decimal('3.140000000000000124344978758017532527446746826171875')

First, you locate the compiled .pyc file of the decimal module in Python’s standard library and then use marshal to execute its bytecode. As a result, you can access the Decimal class in the highlighted line without having to import it explicitly.

Note: The filename decimal.cpython-312.pyc contains your Python version and flavor. Depending on which Python interpreter you use, the corresponding file may be named slightly differently. Head over to the supporting materials to see how you can locate the correct .pyc file in your system.

The marshal module is fairly limited in functionality. It can only serialize a few primitive values like integers and strings, but not user-defined or built-in classes. The implementation details of the format are left intentionally undocumented to deter you from using it. The module authors don’t give any guarantees about its backward compatibility. Your marshaled data could become unreadable or incompatible with newer Python versions one day.

The official documentation makes it clear that you’re not supposed to use marshal in your code. Again, you should generally prefer pickle to transmit or persistently store Python objects. But sometimes, you want a simple key-value store for your objects, which might be the case for caching purposes. In such a case, you can take advantage of shelve, which combines pickle and a lower-level dbm module:

>>> import shelve

>>> with shelve.open("/tmp/cache.db") as shelf:

... shelf["last_updated"] = 1696846049.8469703

... shelf["user_sessions"] = {

... "jdoe@domain.com": {

... "user_id": 4185395169,

... "roles": {"admin", "editor"},

... "preferences": {

... "language": "en_US",

... "dark_theme": False

... }

... }

... }

...

The resulting shelf is a dictionary-like object or, more specifically, a hash table whose keys must be Python strings and whose values must be picklable objects. The module automatically calls .encode() on the keys to apply the default character encoding and uses pickle to convert the corresponding values to byte sequences.

Note: As a rule of thumb, only one program should have a shelf open for writing, because the module doesn’t support concurrent read and write access. However, simultaneous reads are thread-safe.

Because shelve is a convenience module that builds on top of pickle and dbm, you can use the latter to take a peek at what’s inside the created file:

>>> import dbm

>>> with dbm.open("/tmp/cache.db") as db:

... for key in db.keys():

... print(f"{key} = {db[key]}")

...

b'user_sessions' = b'\x80\x04\x95{\x00\x00\x00\x00\x00\x00\x00}…'

b'last_updated' = b'\x80\x04\x95\n\x00\x00\x00\x00\x00\x00\x00G…'

Both keys and values in the file are stored as raw bytes. Additionally, the values comply with the pickle protocol, which shelve uses internally to serialize Python objects.

Python’s dbm module is an interface to DBM databases popular on Unix. For instance, the manual pages on Unix-like operating systems use this format to index the individual documentation pages. Note that a DBM instance only supports a few basic operations of the regular Python dictionary, so it might not expose methods like .copy() or support the union operators and iteration.

If you have special needs that the standard pickle format can’t fulfill, then consider installing a third-party library like dill, which extends its capabilities while remaining compatible with pickle. It can handle more sophisticated data types, including lambda expressions and Python modules.

Fun Fact: The name dill is a play on the word pickle, continuing the theme of preserving and storing food items for later use. Dill is a common herb used in pickling, which adds a distinctive flavor to pickled cucumbers.

One key point to keep in mind when choosing a Python-specific serialization format, regardless of which one, is the security ramifications. Because serialized data may contain malicious code that could compromise or damage your system, you should only deserialize data from trusted sources! This applies to pickle and other formats that allow arbitrary code execution during deserialization.

As you can see, there’s no one-size-fits-all data serialization format, as each comes with its own pros and cons. While this section gave you a comprehensive overview of the various categories, it’s now time dive deeper into concrete formats to understand them better.

Serialize Python Objects

Over the following sections, you’ll practice serializing various types of Python objects that mainly carry data, using popular binary and textual formats. After dipping your toe into the pickle module and tweaking its inner workings, you’ll learn about its limitations. Finally, you’ll represent some of your objects using the widely adopted JSON format.

Pickle Your Python Objects

As you’ve learned, pickle is the standard data serialization format in Python, and it’s capable of representing a wide range of native data types as well as user-defined classes. It’s a binary format specific to Python, which requires no schema definition and can handle data in almost any shape or form. If you need to persist your Python objects for later use or send them to another Python interpreter, then this should be your preferred choice.

Note: The pickle module ships with the interpreter’s standard library, making the corresponding serialization format ubiquitous. As a result, you can call data serialization and deserialization in Python pickling and unpickling, respectively.

Getting started with pickle is fairly straightforward:

>>> import pickle

>>> data = 255

>>> with open("filename.pkl", mode="wb") as file:

... pickle.dump(data, file)

...

>>> pickle.dumps(data)

b'\x80\x04K\xff.'

First, you need to import the pickle module and then call either dump() or dumps() to turn an arbitrary Python object into a bytes instance. The first function expects a file-like object open in binary mode for writing, and the latter returns the sequence of bytes to the caller. Both functions allow you to choose the underlying protocol version, which might produce slightly different output:

>>> for protocol in range(pickle.HIGHEST_PROTOCOL + 1):

... print(f"v{protocol}:", pickle.dumps(data, protocol))

...

v0: b'I255\n.'

v1: b'K\xff.'

v2: b'\x80\x02K\xff.'

v3: b'\x80\x03K\xff.'

v4: b'\x80\x04K\xff.'

v5: b'\x80\x05K\xff.'

>>> pickle.DEFAULT_PROTOCOL

4

At the time of writing, protocol version 5 was the highest, and version 4 was the default. In general, higher versions are more efficient but may not work in older Python releases. Therefore, it’s usually good practice to stick with the relatively conservative defaults if you want your code to remain portable across Python versions. You can always try compressing the resulting byte stream to reduce its size.

Note: If you’d like to analyze the pickled byte sequence to get more information, then you can disassemble it into opcodes using the pickletools module, which also comes with Python.

Deserializing pickled data boils down to calling the module’s load() or loads(), which are counterparts of the two functions mentioned earlier:

>>> with open("filename.pkl", mode="rb") as file:

... pickle.load(file)

...

255

>>> pickle.loads(b"\x80\x04K\xff.")

255

When unpickling data, you don’t get the option to specify the protocol version because it gets baked into the pickled byte sequence during serialization. This ensures that you can correctly unpickle your data using the right protocol.

Note: While pickling data always results in an immutable bytes object, you can unpickle any byte-like object, including an array.array, bytearray, bytes, or memoryview:

>>> import array

>>> import pickle

>>> pickle.loads(array.array("B", [128, 4, 75, 255, 46]))

255

In this case, you deserialize pickled data that was stored in a binary array of unsigned bytes.

The pickle module can deal with most data types that you’ll ever work with. Moreover, it can handle objects with reference cycles, such as graphs, including recursive data structures and even deeply nested ones up to the recursion limit in Python:

>>> cycle = {}

>>> cycle["sibling"] = {"sibling": cycle}

>>> pickle.loads(pickle.dumps(cycle))

{'sibling': {'sibling': {...}}}

>>> recursive = []

>>> recursive.append(recursive)

>>> pickle.loads(pickle.dumps(recursive))

[[...]]

>>> import sys

>>> deeply_nested = []

>>> for _ in range(sys.getrecursionlimit() // 2):

... deeply_nested = [deeply_nested]

...

>>> pickle.loads(pickle.dumps(deeply_nested))

[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[...]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]]

The variable cycle in the code snippet above is a dictionary whose only key-value pair holds another dictionary, which in turn contains a reference back to the original dictionary, forming a cycle. The recursive list above has only one element, which is a reference to itself. Finally, the deeply_nested list uses half of Python’s maximum recursion limit to wrap lists inside one another like a Matryoshka doll.

However, there are a few notable exceptions that will cause an error when you try to pickle an object. In particular, instances of the following data types aren’t picklable by design:

- Very deeply nested data structures approaching Python’s recursion limit

- Lambda expressions

- Generator objects

- Python modules

- File objects

- Network sockets

- Database connections

- Threads

- Stack frames

For example, these are the kinds of errors that you’ll get when you try to pickle a lambda expression, a Python module, and a very deeply nested list:

>>> pickle.dumps(lambda: None)

Traceback (most recent call last):

...

_pickle.PicklingError: Can't pickle <function <lambda> at 0x7fdd14107920>:

⮑ attribute lookup <lambda> on __main__ failed

>>> pickle.dumps(pickle)

Traceback (most recent call last):

...

TypeError: cannot pickle 'module' object

>>> very_deeply_nested = []

>>> for _ in range(sys.getrecursionlimit()):

... very_deeply_nested = [very_deeply_nested]

...

>>> pickle.dumps(very_deeply_nested)

Traceback (most recent call last):

...

RecursionError: maximum recursion depth exceeded while pickling an object

This limitation particularly affects inter-process communication (IPC) in Python, which uses pickle to serialize and deserialize data exchanged between the running interpreter processes:

from concurrent.futures import ProcessPoolExecutor

def worker(function, parameter):

return function(parameter)

if __name__ == "__main__":

with ProcessPoolExecutor() as pool:

future = pool.submit(worker, lambda x: x**2, 10)

future.result()

This code will result in a pickling error because of the attempt to send a lambda expression to another system process. When you use a process pool from concurrent.futures or the equivalent pool from the multiprocessing module, then you won’t be able to share certain types of data between your workers running in parallel.

Note: There’s a third-party fork of multiprocessing called multiprocess, which uses dill instead of pickle under the hood, giving you much more flexibility. You’ll learn more about dill throughout this tutorial.

Similarly, copying objects in Python relies on the pickle module, which suffers from the same deficiencies:

>>> def generator():

... yield

...

>>> import copy

>>> copy.copy(generator())

Traceback (most recent call last):

...

TypeError: cannot pickle 'generator' object

If that’s too prohibitive for your needs, then consider using the third-party dill package, which acts as a drop-in replacement for pickle. It has the same interface as pickle and adheres to its underlying protocol while extending it to some extent:

>>> import pickle

>>> pickle.dumps(42)

b'\x80\x04K*.'

>>> import dill

>>> dill.dumps(42)

b'\x80\x04K*.'

>>> dill.loads(dill.dumps(lambda: None))

<function <lambda> at 0x7f78e793e660>

>>> dill.dumps(generator())

Traceback (most recent call last):

...

TypeError: cannot pickle 'generator' object

For basic data types, dill behaves similarly to pickle but lets you serialize some of the more exotic types, such as lambda expressions. At the same time, it can’t serialize everything—including generator objects.

Note: You can use the following idiom in your code to seamlessly fall back on pickle when the third-party dill isn’t available:

try:

import dill as pickle

except ModuleNotFoundError:

import pickle

Because both modules have a similar interface, you can use them interchangeably if you don’t need the extra features that dill provides.

Anyway, as long as you stay within most of the native data types in Python, classes from the standard library, or even classes of your own, then pickle is all you need. However, in some cases, you’ll want more control over the serialization and deserialization processes.

Customize the Pickling Process

When you serialize a custom object, Python uses type introspection to discover the underlying class attributes and instance attributes. Whether these attributes are stored in .__dict__ or .__slots__, the pickle module can correctly recognize them. Their names and the corresponding values will be encoded on the target byte stream along with the fully qualified name of your class, which includes the module name where you defined the class.

Note: Pickling a class instance serializes the data—that is, the names and values of its attributes. It doesn’t serialize any Python code that your class methods encapsulate. In other words, you need the class definition on the other end to unpickle the serialized object. What’s more, you must define your class at the top level of an importable module, as opposed to inside a function.

Deserializing a pickled class instance resembles cloning an object, as it bypasses the initializer method completely. This may not be ideal when your initializer method contains important setup code for the object.

Other situations that may require customizing the pickling or unpickling process include:

- Adding metadata: During pickling, you can introduce extra attributes that aren’t part of your object to augment the serialized byte stream with additional information, like timestamps.

- Hiding sensitive information: To avoid serializing passwords and other secrets, you can exclude one or more attributes from pickling.

- Unpickling stateful objects: If your object has a non-picklable state, such as a database connection or an open file that can’t be directly serialized, then you might need to include some extra logic to handle the state during pickling and unpickling.

- Providing default values: When the structure of your class evolves, it may gain new attributes that old instances lack. In such cases, you can provide default values for missing attributes when unpickling old objects.

- Reducing pickled size: During unpickling, you can recalculate some attributes instead of persisting them on the byte stream if they take a lot of space in serialized form.

To hook into the pickling process, you can specify these two special methods in your class:

Python will call the first one before pickling an instance of your class, expecting the method to return a dictionary of attribute names and their values. Conversely, during unpickling, Python will call the second method and pass a dictionary of attributes to let you properly initialize your object after its state has been deserialized.

Say that you have the following data class representing a user in your system, which has the .name and .password attributes:

customize-pickle/models.py

1import time

2from dataclasses import dataclass

3

4@dataclass

5class User:

6 name: str

7 password: str

8

9 def __getstate__(self):

10 state = self.__dict__.copy()

11 state["timestamp"] = int(time.time())

12 del state["password"]

13 return state

14

15 def __setstate__(self, state):

16 self.__dict__.update(state)

17 with open("/dev/random", mode="rb") as file:

18 self.password = file.read(8).decode("ascii", errors="ignore")

To control the pickling and unpickling of your instances, you defined the two special methods above. Here’s how they work line by line:

- Line 10 makes a copy of the object’s internal dictionary of attributes comprising the user’s name and password.

- Line 11 injects an extra attribute named

timestampwith the current Unix timestamp into the copied dictionary. - Line 12 removes the key-value pair corresponding to the user’s password from the new dictionary.

- Line 13 returns the new dictionary with the modified state of your object to the

picklemodule. - Line 16 populates the object’s internal dictionary using the deserialized state provided by the

picklemodule. - Lines 17 and 18 set a new password based on random bytes loaded from the

/dev/randomfile on a Unix-like operating system.

As you can see, pickle leaves the door open to overriding its default behavior, giving you plenty of flexibility. If you need even more freedom, then consider defining a custom Pickler object to serialize references of objects persisted externally—for example, in a database. You can also create a private dispatch table to decide when to use a custom serialization code and when to fall back to the default one.

With the protocol version 5 proposed and described in PEP 574, you can now efficiently pickle out-of-bound data. This can help serialize very large datasets, such as NumPy arrays or pandas DataFrames, meant for transferring between processes or distributed machines rather than persisting on disk. By leveraging buffers managed outside the usual pickling process, data engineers can avoid unnecessary copies and significantly reduce memory use.

So far, you’ve seen examples of serializing Python objects to a byte stream using the standard pickle module. It’s the most versatile, efficient, and battle-proven method for object serialization in Python. But it won’t always be the best choice due to its lack of cross-language compatibility and potential security risks, which you’ll learn how to mitigate later.

And now, it’s time to look into serializing Python objects using the text-based JSON format, which enjoys widespread use beyond the Python ecosystem.

Encode Objects Using JSON

Unlike the binary protocols that the pickle module uses, JSON is a textual serialization format readable by humans. Thanks to its huge popularity and simplicity, it’s become a universally accepted standard for data exchange across various programming languages and platforms.

Note: Because JSON is just text, deserializing it from an untrusted source doesn’t pose the risk of arbitrary code execution. However, it’s possible for an attacker to craft a malicious JSON string that will bring your computer down by exhausting the available CPU and memory resources. The idea behind it is similar to the infamous XML bomb attack.

Unfortunately, you can only represent a few Python data types with pure JSON, whereas pickle is able to handle nearly all Python data types out of the box, including more advanced cases like user-defined classes. That said, you’ll learn how to customize the serialization of various types with JSON in this section.

The interface of the json module looks similar to pickle and other serialization-related modules like PyYAML. You get the familiar dump() and dumps() methods, along with their load() and loads() counterparts.

Note: One notable difference between json and pickle is that you can only dump your data to a JSON file once without corrupting the file. With pickle, on the other hand, you may repeatedly dump multiple objects one after the other and then retrieve them in the same order later.

In the following example, you dump a Python object to a JSON string:

>>> import json

>>> data = {

... "email": None,

... "name": "John Doe",

... "age": 42.5,

... "married": True,

... "children": ["Alice", "Bob"],

... }

>>> print(json.dumps(data, indent=4, sort_keys=True))

{

"age": 42.5,

"children": [

"Alice",

"Bob"

],

"email": null,

"married": true,

"name": "John Doe"

}

In this case, you serialize a Python dictionary whose keys are strings mapped to a few different data types. What you get as a result is a Python string formatted according to the grammar rules of JSON. Notice that you can optionally request to pretty-print the output and sort the keys alphabetically to improve the readability of larger objects.

The JSON format supports only six native data types:

- Array:

[1, 2, 3] - Boolean:

true,false - Null:

null - Number:

42,3.14 - Object:

{"key1": "value", "key2": 42} - String:

"Hello, World!"

In some cases, the json module will be able to convert your Python values to their closest JSON equivalents. At other times, it’ll fail with the following error:

>>> json.dumps({"Saturday", "Sunday"})

Traceback (most recent call last):

...

TypeError: Object of type set is not JSON serializable

The error message above tells you that a Python set isn’t serializable using the JSON format. However, you can teach the json module how to deal with such non-standard data types or even custom classes.

There are two ways to do so. You can provide callback functions to the dumping and loading functions, or you can extend the encoder and decoder classes from the json module.

To serialize a Python set as a JSON string using the first approach, you could define the following callback function and pass its reference to json.dump() or json.dumps() through the default parameter:

>>> def serialize_custom(value):

... if isinstance(value, set):

... return {

... "type": "set",

... "elements": list(value)

... }

...

>>> data = {"weekend_days": {"Saturday", "Sunday"}}

>>> json.dumps(data, default=serialize_custom)

'{"weekend_days": {"type": "set", "elements": ["Sunday", "Saturday"]}}'

Python will now call serialize_custom() for every object that it can’t serialize as JSON by itself. It’ll pass down that object as an argument to your function, which should return one of the known data types. In this case, the function represents a Python set as a dictionary with elements encoded as a list and a type field denoting the intended Python data type.

If you’re happy with this new representation, then you’re essentially done. Otherwise, you can implement the corresponding deserialization callback to retrieve the original Python set from its current JSON representation:

>>> def deserialize_custom(value):

... match value:

... case {"type": "set", "elements": elements}:

... return set(elements)

... case _:

... return value

...

>>> json_string = """

... {

... "weekend_days": {

... "type": "set",

... "elements": ["Sunday", "Saturday"]

... }

... }

... """

>>> json.loads(json_string, object_hook=deserialize_custom)

{'weekend_days': {'Sunday', 'Saturday'}}

Here, you pass a reference to deserialize_custom() through the object_hook parameter of json.loads(). Your custom function takes a Python dictionary as an argument, letting you convert it into an object of your choice. Using structural pattern matching, you identify if the dictionary describes a set, and if so, return a Python set instance. Otherwise, you return the dictionary as is.

Note: Alternatively, you can specify another callback through the object_pairs_hook parameter, in which case you’ll get an ordered list of key-value tuples instead of a dictionary. This was preferable when you wanted to preserve the original order of key-value pairs before Python 3.7 made dictionaries maintain insertion order as a language feature.

In addition to these generic callbacks, you can specify a few specialized ones to override how to deserialize integer and floating-point number literals in JSON:

>>> json.loads("42", parse_int=float)

42.0

>>> from decimal import Decimal

>>> json.loads("3.14", parse_float=Decimal)

Decimal('3.14')

>>> json.loads("[NaN, Infinity, -Infinity]", parse_constant=str)

['NaN', 'Infinity', '-Infinity']

In the code snippet above, you convert all integers to Python floats and all floating-point number literals to decimal numbers. You also treat the special constants defined in the IEEE 754 standard, such as NaN, as regular strings.

If you prefer a more object-oriented approach to customizing the logic behind JSON serialization in Python, then extend the JSONEncoder and JSONDecoder classes. They’ll let you keep your reusable code in one place while giving you even more fine-grained control, including a streaming API. However, the general principles are the same as with the callback-based approach.

When you decide to use JSON over the binary pickle format, remember that you’ll have to take care of many corner cases manually. One such area is the handling of reference cycles, which pickle accounts for automatically:

>>> cycle = {}

>>> cycle["sibling"] = {"sibling": cycle}

>>> json.dumps(cycle)

Traceback (most recent call last):

...

ValueError: Circular reference detected

>>> json.dumps(cycle, check_circular=False)

Traceback (most recent call last):

...

RecursionError: maximum recursion depth exceeded while encoding a JSON object

When an object contains a cycle, the pure json module is unable to serialize it. You must implement some mechanism of tracking the objects that you’ve already serialized and reconstruct the original object graph in memory during deserialization.

This exhausts the list of data serialization formats supported natively by Python. Next, you’ll look into some third-party alternatives.

Use Formats Foreign to Python

Both pickle and JSON have their pros and cons. The first one can automatically serialize many types of Python objects out of the box but isn’t portable or human-readable. On the other hand, the text-based JSON is widely supported outside of Python but comes with only a few basic data types. Fortunately, you can combine the best of both worlds with the help of the jsonpickle library.

Note: Don’t confuse jsonpickle—a mature, well-documented, and maintained library—with a separate picklejson package.

Contrary to what the name suggests, jsonpickle doesn’t actually use pickle under the hood to serialize Python objects. That would defeat the purpose of creating a more portable and human-readable format. Instead, it uses a custom serialization format on top of JSON to allow you to serialize complex Python objects.

If you still have your User data class around, then this is how you can serialize it to a JSON-formatted string with jsonpickle:

>>> import jsonpickle

>>> from models import User

>>> user = User(name="John", password="*%!U8n9erx@GdqK(@J")

>>> user_json = jsonpickle.dumps(user, indent=4)

>>> print(user_json)

{

"py/object": "models.User",

"py/state": {

"name": "John",

"timestamp": 1699805990

}

}

>>> jsonpickle.loads(user_json)

User(name='John', password='\\\x06,\x19')

As you can see, the library respects the standard .__getstate__() and .__setstate__() methods that pickle uses. Moreover, it retains the type’s fully qualified name as a plain string in the resulting JSON under the "py/object" key. This means that the corresponding class must be importable when you try deserializing one of its instances.

That idea sounds remarkably similar to application-specific tags that some YAML parsers use to extend their capabilities. For example, this is what the same user object will look like when you serialize it using the PyYAML library:

>>> print(yaml.dump(user))

!!python/object:models.User

name: John

timestamp: 1699807790

The first line, which starts with a double exclamation mark (!!), uses a custom tag to let you store a User object. YAML is an excellent data serialization format because it supports a large number of data types that you’ll find in many programming languages. Even so, you can extend it with custom data types like the User class above for your specific needs.

Now that you know how to serialize Python objects representing data, you can tackle the task of serializing executable Python code.

Serialize Executable Code

Modern computers are designed with von Neumann architecture in mind, allowing low-level code and data to coexist in the same memory space. On the one hand, this played a role in the emergence of computer viruses, but on the other hand, it was a revolutionary and innovative idea that made compilers possible.

While the low-level instructions can only run on the specific type of hardware that they were compiled for, high-level languages like Python enjoy greater portability. Python code is interpreted, which means it can run on any device with the necessary interpreter installed. This makes it worthwhile to serialize Python code. But why would you want to do that in the first place?

Serializing executable code can be useful when you need to distribute chunks of work over a number of remote workers to achieve parallel processing. When you have a cluster of computers, you can let each one run a different function, for example.

Note: In theory, you can treat program instructions as ordinary data when, for example, you want to serialize them. However, accessing executable units of code inside a running program is only possible when the underlying programming language allows for that.

Fortunately, Python has first-class functions, which is a fancy way of saying that functions are objects that you can manipulate just like any other value. In particular, you can assign them to variables, pass them around, and even return them from other functions. You’ll see examples of this in action soon.

In the following sections, you’ll take a closer look at some of the challenges associated with the serialization of Python code.

Pickle Importable Symbols

In Python, modules, functions, and classes are the most fundamental building blocks of any program, encapsulating its logic. The pickle module can serialize functions and classes but with certain limitations.

If you followed along with the earlier section on customizing the pickling process, then you might recall that pickle only retains the names of your functions and classes. Later, it uses those names to look up the corresponding source code in your virtual environment.

Say you have a Python module with the following definitions, which you’d like to serialize using pickle:

pickle-importable/plus.py

def create_plus(x):

def plus(y):

return x + y

return plus

plus_one = create_plus(1)

plus_two = lambda x: x + 2

The create_plus() function is an example of a higher-order function, or more specifically, a factory function that returns a brand-new function closure created dynamically at runtime. The outer function takes a number, x, as input and returns an inner function, which takes another number, y, as an argument and adds that number to the first one.

The two variables at the bottom, plus_one and plus_two, represent callable objects. The first is a function closure returned by the factory function defined earlier, while the other one is a lambda expression.

Now, because Python modules aren’t picklable, you can’t serialize the entire module using pickle:

>>> import pickle

>>> import plus

>>> pickle.dumps(plus)

Traceback (most recent call last):

...

TypeError: cannot pickle 'module' object

By the same token, you can’t serialize inner functions and lambda expressions imported from your module, as well as code objects of regular functions. To get the code object associated with your function, you can access its .__code__ attribute, as shown at the bottom of the following code snippet:

>>> pickle.dumps(plus.plus_one)

Traceback (most recent call last):

...

AttributeError: Can't pickle local object 'create_plus.<locals>.plus'

>>> pickle.dumps(plus.plus_two)

Traceback (most recent call last):

...

_pickle.PicklingError: Can't pickle <function <lambda> at 0x7f6f32f2a480>:

⮑ attribute lookup <lambda> on plus failed

>>> pickle.dumps(plus.create_plus.__code__)

Traceback (most recent call last):

...

TypeError: cannot pickle code objects

The first error message tells you that you can’t pickle a local object, which is a function defined inside another function in this case. Python must be able to reference your object from the global namespace to serialize it. Similarly, a lambda is an anonymous function defined on the fly as an expression that doesn’t belong to the global scope.

You can only pickle top-level functions, such as create_plus(), that you defined with the def keyword in the global namespace of a module:

>>> pickle.dumps(plus.create_plus)

b'\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c

⮑ \x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94.'

Notice that the resulting byte stream is fairly short and contains the fully qualified name of your function, including the enclosing module name, plus. You can now store this byte sequence on disk or transfer it to another Python interpreter over a network. However, if the receiving end has no access to the corresponding functions or class definitions, then you still won’t be able to unpickle the serialized code:

>>> # This runs on a remote computer without the "plus" module

>>> import pickle

>>> pickle.loads(

... b"\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c"

... b"\x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94."

... )

Traceback (most recent call last):

...

ModuleNotFoundError: No module named 'plus'

As you can see, Python is able to deserialize your byte stream correctly, knowing which module to import, but it fails to find the missing plus module.

In contrast, when the enclosing module of your serialized function or class is on the import path, making the module importable, then you’ll be able to retrieve the original object from the byte stream:

>>> # This runs where the "plus" module is importable

>>> import pickle

>>> pickle.loads(

... b"\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c"

... b"\x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94."

... )

<function create_plus at 0x7f89d84a2700>

Here, you get a reference to the create_plus() function, which Python imported for you behind the scenes. It’s as if you had imported it yourself.

The lack of a built-in mechanism for serializing Python source code is a bit disappointing. A quick and dirty way to work around this limitation of the pickle module is to share the code in literal form for the exec() function to execute later:

>>> import importlib

>>> import inspect

>>> def get_module_source(module_name):

... module = importlib.import_module(module_name)

... return inspect.getsource(module)

...

>>> source_code = get_module_source("plus")

>>> print(source_code)

def create_plus(x):

def plus(y):

return x + y

return plus

plus_one = create_plus(1)

plus_two = lambda x: x + 2

>>> exec(source_code)

>>> plus_two(3)

5

You define get_module_source() for dynamically importing a Python module by name with the help of importlib. Additionally, you rely on inspect to return the Python source code of the imported module as a string. Then, you use your new helper function to obtain the source code of the plus module that you defined earlier and execute the associated code with its function definitions. Finally, you call one of those functions without importing it.

This trick avoids serializing the code altogether, but it comes with its own set of problems. What if you only wanted to share a specific function or class without revealing the rest of your module? Perhaps executing the module causes unwanted side effects. Maybe you don’t have access to the high-level Python source code because it’s a compiled C extension module. Do you trust that the code will remain intact and secure when you execute it again?

Note: If you use dill instead of pickle, then you can conveniently narrow down the source code to extract from a module:

>>> import dill.source

>>> from plus import plus_two

>>> dill.source.getsource(plus_two)

'plus_two = lambda x: x + 2\n'

You only get the source code of the plus_two() callable, which you defined as a lambda expression. Nevertheless, dill offers a better way to serialize code, which you’ll explore now.

The next few sections will address these concerns and provide alternative solutions to serializing executable code in Python using the third-party dill library.

Serialize Python Modules

You’ve already heard of the third-party dill library a few times in this tutorial. One of its main advantages over the standard library’s pickle module is the ability to serialize Python source code without requiring an importable enclosing module. However, there’s a catch that you need to be aware of, which you’ll learn about soon.

First of all, you can call dump_module(), which is specific to dill, in order to retain and later restore the state of global variables defined in a module. Unless you specify otherwise, dill serializes the special __main__ module by default. This can be useful when you’re experimenting in the interactive Python REPL and would like to continue the session where you left off, potentially on another computer:

>>> from dataclasses import dataclass

>>> @dataclass

... class Person:

... first_name: str

... last_name: str

...

>>> jdoe = Person("John", "Doe")

>>> import dill

>>> dill.dump_module("session.pkl")

Here, you define a data class, create an instance of it, and assign the resulting object to a global variable. Then, you dump the current session to a binary file, which you can load in a new Python REPL session later:

>>> import dill

>>> dill.load_module("session.pkl")

>>> jdoe

Person(first_name='John', last_name='Doe')

>>> Person("Jack", "Ryan")

Person(first_name='Jack', last_name='Ryan')

After loading the serialized module, you continue working with the object that you created before and the class that you defined earlier as if you’d never left the original session.

Note: If your module depends on external modules, or if you dump a custom module other than the default __main__, then you must ensure that these modules are importable before loading the associated binary file. Otherwise, you’ll get the ModuleNotFoundError exception:

>>> import dill

>>> dill.load_module("plus.pkl")

Traceback (most recent call last):

...

ModuleNotFoundError: No module named 'plus'

In this case, you dumped your custom plus module, which Python can’t find anymore during an attempt to load its serialized state.

Okay. Dumping Python modules with dill allows you to share your functions and classes between Python interpreters, but what if you wanted to be more selective about what you serialize? What about a situation where your recipient doesn’t have access to your source code? In such cases, you can use dill to dump a specific code object associated with a function or class.

Serialize Code Objects

Somewhat surprisingly, when you try serializing a function imported from another module, such as your create_plus() factory function from the plus module, dill and pickle may sometimes produce an identical binary sequence:

>>> import pickle

>>> import dill

>>> import plus

>>> pickle.dumps(plus.create_plus)

b'\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c

⮑ \x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94.'

>>> dill.dumps(plus.create_plus)

b'\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c

⮑ \x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94.'

This seems to break the promise made by dill to be capable of serializing such code without needing the enclosing module.

However, if you dump your function from within that module, then you’ll get the expected result. To do so, you can run your module through the Python interpreter with the -i option, which lets you inspect the finished program’s state in an interactive Python shell:

$ python -i plus.py

>>> import dill

>>> dill.dumps(create_plus)

b'\x80\x04\x95U\x01\x00\x00\x00\x00\x00\x00\x8c

⮑ \ndill._dill\x94\x8c\x10_create_function\x94

⮑ \x93\x94(h\x00\x8c\x0c_create_code\x94\x93

⮑ \x94(C\x04\x04\x01\n\x03\x94K\x01K\x00K\x00K

⮑ \x02K\x02K\x03C\x12\x87\x00\x97\x00\x88\x00f

⮑ \x01d\x01\x84\x08}\x01|\x01S\x00\x94Nh\x04(C

⮑ \x02\x04\x01\x94K\x01K\x00K\x00K\x01K\x02K

⮑ \x13C\x0e\x95\x01\x97\x00\x89\x01|\x00z\x00

⮑ \x00\x00S\x00\x94N\x85\x94)\x8c\x01y\x94\x85

⮑ \x94\x8c\x0c/tmp/plus.py\x94\x8c\x04plus\x94

⮑ \x8c\x19create_plus.<locals>.plus\x94K\x02C

⮑ \x0c\xf8\x80\x00\xd8\x0f\x10\x901\x89u\x88

⮑ \x0c\x94C\x00\x94\x8c\x01x\x94\x85\x94)t\x94R

⮑ \x94\x86\x94)h\x11h\r\x86\x94h\x0c\x8c

⮑ \x0bcreate_plus\x94h\x17K\x01C\x0f\xf8\x80\x00

⮑ \xf4\x02\x01\x05\x15\xf0\x06\x00\x0c\x10\x80K

⮑ \x94h\x10)h\x11\x85\x94t\x94R\x94c__builtin__

⮑ \n__main__\nh\x17NNt\x94R\x94}\x94}\x94\x8c

⮑ \x0f__annotations__\x94}\x94s\x86\x94b.'

With this option, Python executes the code in your module, including the function definitions, while letting you stay in the interactive shell to inspect global variables and other symbols afterward.

Because create_plus() is now a part of the global namespace, dill can serialize the function properly. It finally outputs a much longer byte sequence, which includes the serialized code object that you can deserialize without having the plus module in your virtual environment:

>>> # This runs on a remote computer without the "plus" module

>>> import dill

>>> create_plus = dill.loads(

... b"\x80\x04\x95U\x01\x00\x00\x00\x00\x00\x00\x8c"

... b"\ndill._dill\x94\x8c\x10_create_function\x94"

... b"\x93\x94(h\x00\x8c\x0c_create_code\x94\x93"

... b"\x94(C\x04\x04\x01\n\x03\x94K\x01K\x00K\x00K"

... b"\x02K\x02K\x03C\x12\x87\x00\x97\x00\x88\x00f"

... b"\x01d\x01\x84\x08}\x01|\x01S\x00\x94Nh\x04(C"

... b"\x02\x04\x01\x94K\x01K\x00K\x00K\x01K\x02K"

... b"\x13C\x0e\x95\x01\x97\x00\x89\x01|\x00z\x00"

... b"\x00\x00S\x00\x94N\x85\x94)\x8c\x01y\x94\x85"

... b"\x94\x8c\x0c/tmp/plus.py\x94\x8c\x04plus\x94"

... b"\x8c\x19create_plus.<locals>.plus\x94K\x02C"

... b"\x0c\xf8\x80\x00\xd8\x0f\x10\x901\x89u\x88"

... b"\x0c\x94C\x00\x94\x8c\x01x\x94\x85\x94)t\x94R"

... b"\x94\x86\x94)h\x11h\r\x86\x94h\x0c\x8c"

... b"\x0bcreate_plus\x94h\x17K\x01C\x0f\xf8\x80\x00"

... b"\xf4\x02\x01\x05\x15\xf0\x06\x00\x0c\x10\x80K"

... b"\x94h\x10)h\x11\x85\x94t\x94R\x94c__builtin__"

... b"\n__main__\nh\x17NNt\x94R\x94}\x94}\x94\x8c"

... b"\x0f__annotations__\x94}\x94s\x86\x94b."

... )

>>> create_plus

<function create_plus at 0x7f0f88324220>

>>> plus_three = create_plus(3)

>>> plus_three

<function create_plus.<locals>.plus at 0x7f0f883242c0>

>>> plus_three(2)

5

When you pass the same byte sequence to dill.loads(), it returns a new function object that works exactly like your original create_plus() factory function. Even if the plus module with the corresponding Python source code can’t be found, the deserialized function still works as expected.

Note: If you’re curious about how dill reconstructs functions, lambda expressions, classes, and other kinds of code elements from their serialized form, then have a look at the types module in the Python standard library. It provides classes like FunctionType that let you dynamically create types based on compiled code objects augmented with bits of metadata. Thanks to this, dill can serialize local functions defined inside other functions.

Inspecting a module to serialize one of its functions or classes is a lot of hassle. Sure, you could always slap the call to dill.dumps() at the bottom of your module and call it a day:

pickle-importable/plus.py

import dill

def create_plus(x):

def plus(y):

return x + y

return plus

plus_one = create_plus(1)

plus_two = lambda x: x + 2

print(dill.dumps(create_plus))

This might work in some cases, but you generally prefer your code to be flexible so that you can decide later which function or class to serialize. Fortunately, you have another trick up your sleeve. You can manually substitute the special .__module__ attribute and instruct dill to recursively traverse the globals() dictionary:

>>> import dill

>>> import plus

>>> plus.create_plus.__module__

'plus'

>>> dill.dumps(plus.create_plus)

b'\x80\x04\x95\x18\x00\x00\x00\x00\x00\x00\x00\x8c

⮑ \x04plus\x94\x8c\x0bcreate_plus\x94\x93\x94.'

>>> plus.create_plus.__module__ = None

>>> dill.dumps(plus.create_plus, recurse=True)

b'\x80\x04\x95N\x01\x00\x00\x00\x00\x00\x00\x8c

⮑ \ndill._dill\x94\x8c\x10_create_function\x94

⮑ \x93\x94(h\x00\x8c\x0c_create_code\x94\x93\x94

⮑ (C\x04\x04\x01\n\x03\x94K\x01K\x00K\x00K\x02K

⮑ \x02K\x03C\x12\x87\x00\x97\x00\x88\x00f\x01d

⮑ \x01\x84\x08}\x01|\x01S\x00\x94Nh\x04(C\x02

⮑ \x04\x01\x94K\x01K\x00K\x00K\x01K\x02K\x13C

⮑ \x0e\x95\x01\x97\x00\x89\x01|\x00z\x00\x00\x00S

⮑ \x00\x94N\x85\x94)\x8c\x01y\x94\x85\x94\x8c

⮑ \x0c/tmp/plus.py\x94\x8c\x04plus\x94\x8c\x19

⮑ create_plus.<locals>.plus\x94K\x02C\x0c\xf8\x80

⮑ \x00\xd8\x0f\x10\x901\x89u\x88\x0c\x94C\x00\x94

⮑ \x8c\x01x\x94\x85\x94)t\x94R\x94\x86\x94)h\x11h

⮑ \r\x86\x94h\x0c\x8c\x0bcreate_plus\x94h\x17K

⮑ \x01C\x0f\xf8\x80\x00\xf4\x02\x01\x05\x15\xf0

⮑ \x06\x00\x0c\x10\x80K\x94h\x10)h\x11\x85\x94t

⮑ \x94R\x94}\x94\x8c\x08__name__\x94Nsh\x17NNt

⮑ \x94R\x94}\x94}\x94\x8c\x0f__annotations__\x94}

⮑ \x94s\x86\x94b.'

The .__module__ attribute of a function or a class indicates the name of the Python module it belongs to. As you might expect, the initial value of the create_plus() function’s .__module__ attribute is plus. This triggers the fallback mechanism, which delegates the serialization to pickle, resulting in a short byte sequence comprising only the fully qualified name of your function instead of the code object.

By artificially changing this attribute’s value to None, you detach the function from its module. However, callable objects have another special attribute, .__globals__, which is a dictionary representing the global namespace where you defined them. Some of its elements may be redundant, forcing dill to serialize more than necessary and potentially making the enclosing module required for deserialization.

The .__globals__ attribute is read-only, so you can’t easily override it. What you can do, though, is instruct dill to recursively traverse that namespace and cherry-pick objects to serialize as needed. In contrast, when you remove the recurse=True parameter from one of the highlighted lines above, the resulting byte sequence will be a few times longer!

Finally, it’s worth noting that recursive functions need special attention when you deserialize them. Here’s a sample function, which calculates the nth Fibonacci number, represented as a byte stream produced by dill:

>>> import dill

>>> fn = dill.loads(

... b"\x80\x04\x95-\x01\x00\x00\x00\x00\x00\x00\x8c\ndill._dill"

... b"\x94\x8c\x10_create_function\x94\x93\x94(h\x00\x8c\x0c"

... b"_create_code\x94\x93\x94(C\x02\x02\x01\x94K\x01K\x00K\x00K"

... b"\x01K\x05K\x03CJ\x97\x00|\x00d\x01k\x02\x00\x00r\x02d\x02S"

... b"\x00t\x01\x00\x00\x00\x00\x00\x00\x00\x00|\x00d\x01z\n\x00"

... b"\x00\xab\x01\x00\x00\x00\x00\x00\x00t\x01\x00\x00\x00\x00"

... b"\x00\x00\x00\x00|\x00d\x02z\n\x00\x00\xab\x01\x00\x00\x00"

... b"\x00\x00\x00z\x00\x00\x00S\x00\x94NK\x02K\x01\x87\x94\x8c"

... b"\x03fib\x94\x85\x94\x8c\x01n\x94\x85\x94\x8c\x0b/tmp/fib.py"

... b"\x94h\x08h\x08K\x01C(\x80\x00\xd8\x10\x11\x90A\x92\x05\x881"

... b"\xd0\x042\x9c3\x98q\xa01\x99u\x9b:\xac\x03\xa8A\xb0\x01\xa9E"

... b"\xab\n\xd1\x1b2\xd0\x042\x94C\x00\x94))t\x94R\x94c__builtin__"

... b"\n__main__\nh\x08NNt\x94R\x94}\x94}\x94\x8c\x0f__annotations__"

... b"\x94}\x94s\x86\x94b."

... )

>>> fn

<function fib at 0x7fd6b68dc220>

>>> fn(5)

Traceback (most recent call last):

...

NameError: name 'fib' is not defined

You successfully load the function from that byte stream and assign it to a variable named fn. Later, you use your new variable to call the deserialized function, which fails with a NameError due to referencing an undefined symbol, fib. What’s going on?

A recursive function calls itself until the base condition is met. However, to call itself, the function must know its own name. In the serialized version, the function was named fib, as indicated by the code object that you evaluated in the Python REPL. But there’s no such name in your current scope.

To fix this problem, you can create an alias for the function, like so:

>>> fib = fn

>>> [fib(n) for n in range(10)]

[1, 1, 2, 3, 5, 8, 13, 21, 34, 55]

Great! With the function assigned to fib, the recursive calls now succeed because the correct function name exists in your scope.

You’ve covered a lot of ground when it comes to serializing executable code. Before closing this topic, you should address the elephant in the room, which is the potential security risk associated with deserializing code that can run on your computer.

Protect From Malicious Code

When you browse Python’s documentation of the various data serialization formats that allow you to serialize code, you’ll notice prominent warnings about the potential security risks involved. These can enable the execution of arbitrary code during deserialization, exposing you to hacker attacks.

The official documentation page of pickle demonstrates an example of a handmade byte stream, which results in running a system command when unpickled:

>>> import pickle

>>> pickle.loads(b"cos\nsystem\n(S'echo hello world'\ntR.")

hello world

0

After unpickling this cleverly crafted byte sequence, Python automatically imports the os module and calls the system() function, passing a command to run in your shell. It merely executes the innocent echo command, which prints hello world on the screen. However, it could potentially do anything, including deleting files from your system, stealing sensitive data, installing malware, or even giving unauthorized access to your computer.

As a rule of thumb, you should always double-check that you can trust the source of the code before allowing it to run. But even that may not be enough when someone in the middle tampers with your code. The recommended practice is to either use a safer serialization format that requires input validation or implement countermeasures to prevent malicious code injection.

When using pickle, you can restrict which functions and classes are allowed to be deserialized by extending the Unpickler class and overriding its .find_class() method:

digital-signature/safe_unpickler.py

import importlib

import io

import pickle

class SafeUnpickler(pickle.Unpickler):

ALLOWED = {

"builtins": ["print"],

"sysconfig": ["get_python_version"],

}

@classmethod

def safe_loads(cls, serialized_data):

file = io.BytesIO(serialized_data)

return cls(file).load()

def find_class(self, module_name, name):

if module_name in self.ALLOWED:

if name in self.ALLOWED[module_name]:

module = importlib.import_module(module_name)

return getattr(module, name)